Transaction merging, locks, background threads and performance

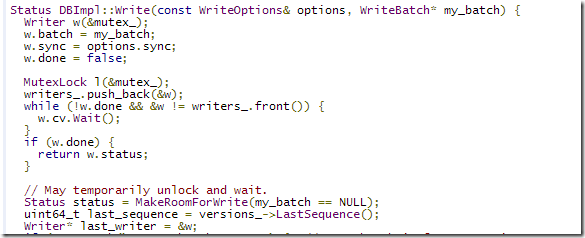

The following code is taken from the leveldb codebase:

This code is responsible for merging concurrent transactions. It operates using a mutex and conditional variables. And the idea really impressed me when I first saw it.

I implemented that in Voron because I think that this is a great way to gain higher concurrency rates while still having a single writer mode (which is much easier to work with).

However, as it turned out, this isn’t a really good idea in practice. The way conditional variables work, you lock the mutex, check your condition, and then wait on the conditional variable. Waking up from a conditional variable means that you have re-acquired the mutex.

What I want to happen:

- Multiple concurrent threads will try to write at the same time.

- One of them goes through and start writing, it takes all the pending writes and write them to disk.

- It then releases all the waiting threads whose work it already completed.

- They all move on from there without having to wait on one another.

However, because of the way conditional variables are implemented, what will actually happen is that they each will wake up one at a time, acquiring the mutex lock, then releasing it. However, while they are being released, they compete with one another, and they also compete with the next write.

We profiled this approach, and the result was that we could see how we were spending pretty much all of our time in just synchronizing threads.

Instead, we moved to a different approach, in which the first write will actually start off a new thread, dedicated to writing batches. The way it works, when a thread want to write a batch, it will add that to a queue and wake up the background writer thread, then wait on an event. The background writer will read all the current batches and merge them into a single write transaction.

When it is done, it will wake up all the sleeping writers. We tried to use TaskCompletionSource for that, initially, but we found that the inline nature of tasks made that too expensive to use. Instead, we use ManualResetEventSlim, and we explicitly wait / wake them. We even reuse events, so we don’t have to keep creating and disposing them.

The end result is that we have a pretty sequential process for actually doing concurrent writes, which turn it into a simple producers/consumer problem from threading perspective, and the actual writes into a simple write things out as fast as you can process them.

This also gives us the chance to do some re-ordering of operations to get better performance overall.

Comments

A single dedicated writer is always, simpler, cleaner solution. In .NET world, EventStore shows the way to go.

Looks like you got rid of the mutex because you don't need to synchronize concurrent access to actual data writing code. This could save you some thread context switching for multiple concurrent writers, but will slow you down when you have only a single writer thread. The leveldb implementation using cond variables and a mutex is quite elegant. Maybe you could keep it but improve, maybe by using lockless synchronization primitives? I'm also curious how you implemented thread synchronization for putting items into write queue and retrieving them by the writer thread. Obviously you couldn't lock the queue for the whole retrieval operation, because this wouldn't be an improvement over leveldb. Is this why you've been talking about immutable lists recently?

Rafal, I am not sure that I am following you. We do need to sync data writing code. We just moved it to a separate thread, so it can't compete with itself. We don't do context switching here, we are simply adding to the queue and waiting on the background thread to complete. There cannot be multiple writers threads, we don't support it. The queue is just a standard ConcurrentQueue.

Rafal, The leveldb approach locks very elegant, but it means that you have a big convoy here. With my approach, you have each thread only waiting for their own writes, not waiting for other writes.

I also liked the LevelDB idea, but not the mutex locks, so quite a while ago I experimented with a few designs that attempt to minimize contention and be mostly lockess. It may be that this is what Rafal is hinting at.

I added the (somewhat ugly) result of one of those proof of concept experiments in a gist (https://gist.github.com/anonymous/8099257). If I remember well, it performed quite reasonable. It is based on some ideas from Joe Duffy's reader writer spinlock that I was reading about at the time.

Maybe i went too far with speculation. The main point is that it doesn't matter which thread handles write operations as long as it has to be single threaded - you need to suspend all other threads while writing is done. So alex is right, this is what i was thinking about. Maybe some clever wait implementation would reduce lock contention without requiring a dedicated writer thread, and without paying context switch penalty when there's only one transaction to be written. ConcurrentQueue is already lock free, what's left is a lock-free decision if a current thread should do the writing or should wait until someone else does it.

ps Alex, I had too much beer today to be able to understand your code clearly, but i think it has same lock contention problem as the leveldb implementation - it's the same Monitor pattern. I wonder if lock free implementation is possible...

Rafal, I am not sure if it is due to too much beer ... I agree the code of this PoC is a bit of a kludge .

It is not the same monitor pattern as LevelDB's though, because each writer thread has its own condition variable (i.e. not one that is shared between threads) that it enqueues and blocks on if it fails to acquire write responsibility. The thread that did acquire write responsibility will wake each of these blocked threads when it has performed the write for them and set the result.

Ok, let's try, my quick and dirty attempt at illustrating what I was talking about. This code is not meant to be compiled or executed ;) https://gist.github.com/lafar6502/8105682

@Rafal, that quick and dirty attempt is quite similar in approach to my PoC kludge. Concurrent queue instead of "thread id indexed" non-sharing array, but same purpose. And a single event instead of multiple thread specific condition variables. But similar in idea anyway.

You do need to be running the "DoSynchronized" in a loop though, because the thread with writer responsibility may be at line 24, at the time when a thread that failed to acquire write responsibility is at line 18, and would therefore block at line 39 until a next thread calls "DoSynchronized".

yeah, i've noticed and fixed some obvious logic errors, but what's most important is that this code should not have a single lock contention problem as long as you provide a separate wait event for each thread. Enough coding for today, hope this will still make sense tomorrow ;)

alex, https://gist.github.com/anonymous/8099257#file-gatedbatchwriter-cs-L27 - looks suspicious. You appear to want to threat the array elements as volatile, not the array itself. https://gist.github.com/anonymous/8099257#file-gatedbatchwriter-cs-L91 - bug waiting to happen. What happen if I have 1000 threads and only 4 cores? https://gist.github.com/anonymous/8099257#file-gatedbatchwriter-cs-L156 - Under busy scenario, your first writer is now busy doing the writes for everyone else, and its work is delayed forever. You are also going to have a lot of waking / sleeping threads, as they check their own status. Consider that we need to issue IO here, which can take tens of ms. That means that you'll constantly be thread switching just to fall asleep again.

Rafal, The reason I am requiring single thread writer is that we are writing to the end of the file, and that is pretty much a single threaded operation by definition. And we kind of need the lock there. We need to wait until the operation is actually completed before we can continue on with other stuff.

Rafal, We ended up with something similar, but instead of using one of the user's threads to do so, we are doing it in a separate background thread. That made it simpler to work with in general.

Ok, I fixed more errors in my 'gateway' and was able to run some comparison with 'separate thread' version. The verdict is that lockfree is much faster for single thread, but with increasing number of threads the performance difference becomes negligible. 1 thread: 0.07 secs vs 1.07 secs 10 threads: 1.49 secs vs 1.96 secs 100 threads (or whatever is the threadpool limit): 16.12 sec vs 16.71 sec Two implementations I compared are at https://gist.github.com/lafar6502/8105682

Rafal, In your scenario, ConcurrentGateway still have the race condition. But more interestingly, you aren't bounding the amount of work. You can't have a thread just wait forever while it is doing other's threads work. Next, you are waking up ALL threads, all the time. Assume that you are limited to 4 merged work items. And you have 16 threads doing work. and on every work completed, you will wake all of them, which will compete for CPU time just to go back tto sleep again.

Yeah, i realized that quickly after posting here - both race condition and the starvation problem. And both these problems aren't easy to solve. Condition variables are protected by mutex for a reason... PS but why do you think i'm waking all threads? There's one wait event per thread...

Rafal, I am sorry, I didn't notice it was thread static.

Also, What would be the result when you are actually doing some no trivial amount of work (such as I/O) in those actions?

With IO or any other significant work you'd be quite likely to have a context switch inside the action, which probably nullifies performance benefits from lockless operation. Thread synchronization cost is very small compared to I/O time (so I wonder why it appeared to be a problem in your leveldb tests).

Rafal, The problem with leveldb is that each thread comes up, takes the lock (preventing anyone else from doing anything). That gives us a pretty poor scenario for handling things in general, especially under a lot of pressure. Because you may have 20 threads waiting for work to complete, it is completed, but now you have to have each of those threads in turn take a turn at the lock, realize it is over, and release it.

so, are you saying leveldb could be significantly improved with lockless code discussed here (+ some fixes)?

@ayende hmm ... it appears you woke up with your "code review" goggles on :D

As I mentioned, the posted code is the result of a proof of concept experiment, not production ready code. And as a proof of concept (i.e. for working around the LevelDB shared mutex issue, by using lockless/contentionless constructs) it served its purpose quite well.

The issues you mentioned can be easily resolved, while retaining the same ideas as incorporated in this PoC. So challenge accepted. A somewhat refactored version that fixes the issues you mentioned can be found here: https://gist.github.com/anonymous/8112801

That single writer thread does it write synchronously?

Rafal, I think so, yes.

Comment preview