Reviewing FASTERLet’s start with managed code

Last post commented on the FASTER paper, now I’m getting to reading the code. This is a pleasant change of scenery for me, since FASTER is written in C# (there is a C++ port that I’ll also be going through later). As someone who spend most of their time in C#, that make it much easier to go through the code. On the other hand, this was clearly written by someone who spent most of their time in C++. This means that naming conventions and the general approach to writing the code sometimes directly contradict C# conventions. Some of that really bugs me.

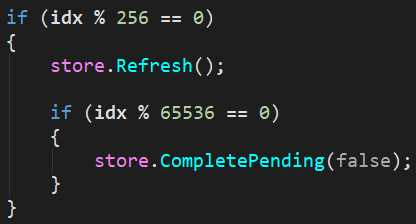

The first thing I did was to try to run the benchmark on my own machine, to get relative numbers. It died with an AccessViolationException, which was a disappointment. I’m going to ignore that and just read through the code. One thing that I did noticed when reading through the benchmark code is that this piece:

This maps closely to some of the things they mentioned in the paper, where each thread refresh every 256 ops and complete pending operations every 65536 ops. The reason I call this out is that having this done in what effectively is a client code is a bad idea in term of design. The benchmark code is operating under continuous stream of operations and can afford to amortize such things. However, real code need to deal with client code that isn’t well behaving and you can’t assume that you’ll be operating in this kind of environment.

Based on the semantics discussed in the paper and what the code is showing me, I would assume that the general model for actually using this is to spawn off a bunch of threads and then listen to some data source. For example, a socket. On each network read, the thread would apply a batch of operations. Note that this means that you have to deal with threads that may be arbitrarily delayed. I would expect that under such a scenario, you’ll see a very different performance profile. You won’t have all threads working in tandem and staying close to one another.

When getting to the code itself, I started with the native portions, trying to figure out what FASTER is doing with the lowest level of the system. It is mostly advanced file operations (things like telling the OS to don’t defrag files, allow to allocate disk space without zeroing it first, etc). As far as I can see, this isn’t actually being called from the current code, but I assume that they at least tested out this approach. Another native code they have is related to calling __rdtsc(), which they use in the HiResTimer class.

This can be replaced completely by the .NET Stopwatch class and I believe that dropping the adv-file-ops and readtsc native projects is possible and straightforward, allowing for a fully managed FASTER impl. Note that it is still using a lot of interop calls, it seems, but at least so far, I think that most of them are not necessary. To be fair, given the way the code is structured, a lot of the native code is about I/O with pointers, which until the Great Spanification in .NET Core was PITA to deal with.

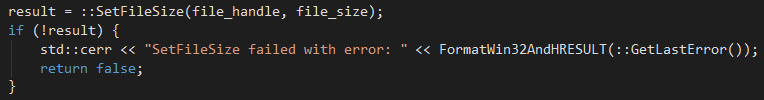

In general, by the way, the code reads more as a proof of concept than a production codebase. I notice this in particular with the general approach for errors handling. Here are two examples:

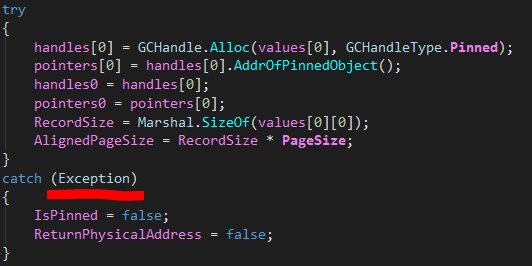

This is from the native code, from which it is a PITA to return errors. However, writing to the console from a library is not an error reporting strategy. What really bugged me was this code, from the MallocFixedPageSize code:

If there is any error in the process of pinning memory, just ignore it? Leave the memory pinned?

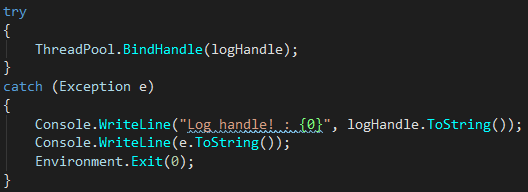

Here is another example that made me cringe:

Moving on to the CodeGen directory, it gets interesting. The paper talks about dynamic code generation, but I didn’t expect to see such wide usage of this. In particular, large sections of the code (13 files, to be exact, over 6000 lines of code) are dynamically loaded, transformed and compiled on the fly when you create the hashtable.

I understand the reasoning for this, you want to get the JIT to compile the best possible code that it can for the specific operations you execute. However, this make it pretty hard to follow what is going on there. In particular, code generating code make it harder to follow what end up actually going on. There are also better ways to do it. Either through generic struct parameters to specialize the code or only generating the dedicated connecting methods as needed and not recompiling large portions on the fly.

The Device directory isn’t really interesting. Mostly pretty trivial I/O operations, so I’m not going to discuss it in depth.

Next, getting to the Epoch, which was really interesting to read in the paper. The actual implementation raise a few questions. The Epoch value in an 32 bits integer, that means that it will wrap fairly quickly. It looks like the Epoch is bumped every time you need a new page. Given the operations rate that are reported, I would expect it to happen on a regular basis (this is also required for the system to progress properly and sync up). My reading is that wrapping of the Epoch counter will result in Bad Things Going On.

There there is this:

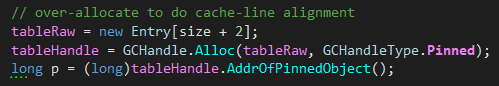

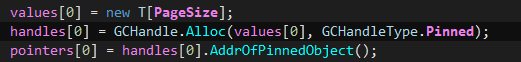

Size, in this case, is always set to 128. The size of Entry is 64 bytes, this means that this call will allocate 8,320 bytes in Gen0, immediately pin it and never let it go. This is going to result in memory fragmentation. It would be better to allocate this on the Large Object Heap and avoid the issue. In fact, the same issue can be seen in the memory allocation, where the code does:

In This case, PageSize is 65,536, however, and given any value except a byte, this will automatically go to the Large Object Heap anyway. I’m going to be a bit ungenerous here, but I’m not sure if this was intentional or not.

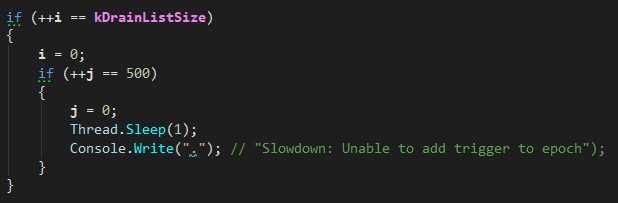

I’m firmly convinced that this is pure POC code, here is the snippet that finally did it for me, from the BumpCurrentEpoch() code.

Note that I don’t mind the Thread.Sleep() here, it make sense in context, but the Console.WriteLine cinched the deal for me. This is not something that you can take a use, but something to look at as you implement this properly.

I have to say that I find it very hard to go through the code, mostly because it’s in C# and there are certain expectations that I have from the code which are routinely violated. I think that I’m going to stop reading the C# codebase and switch over to the C++ implementation. I expect this to be more idiomatic, both because it is the second time this was written and because the people writing the code are clearly more using to writing in C++.

More posts in "Reviewing FASTER" series:

- (06 Sep 2018) Summary

- (05 Sep 2018) When the data hits the disk

- (04 Sep 2018) Reading data from disk

- (03 Sep 2018) The hash structure

- (31 Aug 2018) Working with the file system

- (30 Aug 2018) Digging into the C++ impl

- (29 Aug 2018) Let’s check these numbers

- (28 Aug 2018) Let’s start with managed code

- (27 Aug 2018) Reading the paper

Comments

Comment preview