Reviewing FASTERThe hash structure

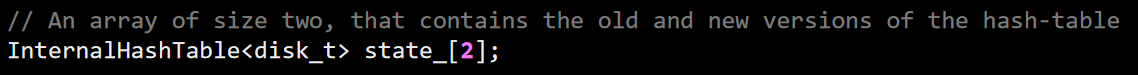

Continuing my stroll through the FASTER codebase, I want to try to get to the core stuff, not just the periphery. This is a bit hard, because there is a lot of code here and it seems to be hard to find where the real action is happening. I decided to find how FASTER is handling Get and Put operations. There is something called InternalHashTable inside FasterKv, which seems promising, so I’m going to follow up on that. Interestingly enough, it shows up as:

So there are some funky stuff here to consider too, but we’ll deal with that later, for now, I want to understand what it is doing with the InternalHashTable. Inside that class, there is the notion of bukcets:

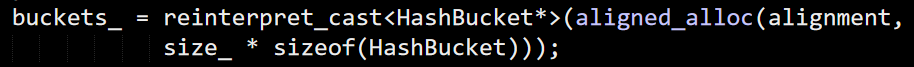

And a bucket is:

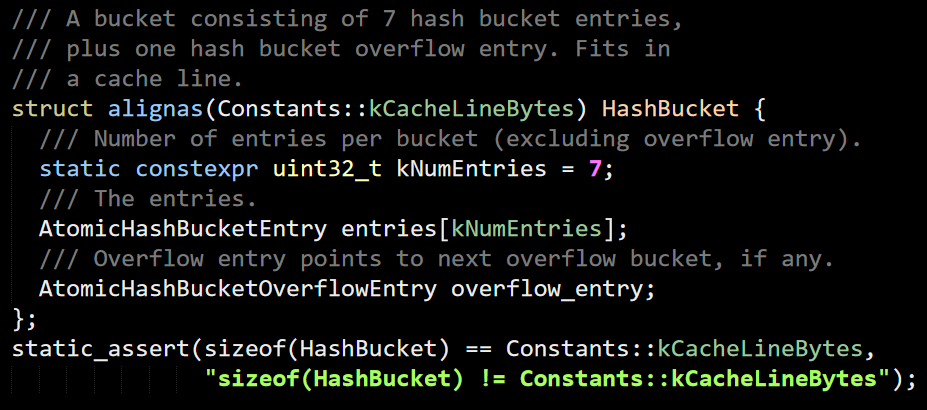

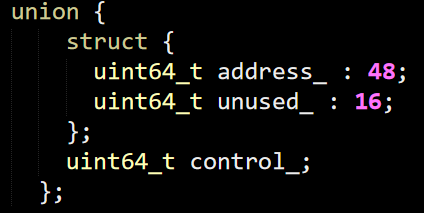

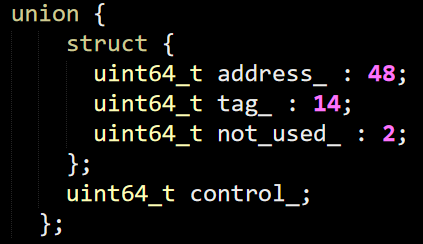

This starts to get interesting for me, so let’s dig deeper into HashBucketEntry, where they use the same union trick I talked about in my previous posts, this allow to easily define an atomic version of it with very little effort.

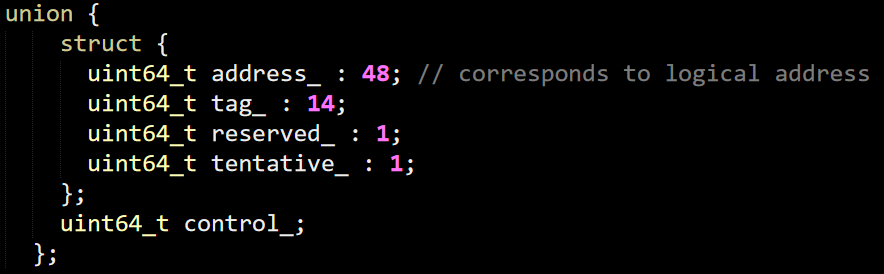

There is also the overflow entry definition, which looks like this:

I got to say, I’m really liking this approach for handling data packing as well as multi threading concerns. It also plays very well with the actual cache line architecture of modern CPUs.

There is also the KeyHash struct, whose heart is this:

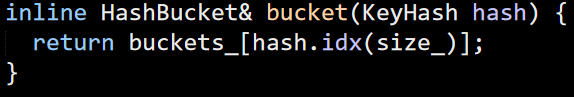

So far, this lines us very neatly to how the FASTER paper talks about things. Given a KeyHash, here is how you get it’s bucket:

This simply index into the buckets_ array by taking the (size_ –1) bits from the hash itself. That tells me that the FASTER structure is very sensitive to the choice of hash function that will be used. This SO answer has some amazing detail on analysis of the output of hash functions with different outputs, which can give you some idea about why this matters so much. This post is an in depth discussion of this, as well of why the choice of hash function matters. This is enough for me to stop everything and try to find what kind of hash function is actually being used here.

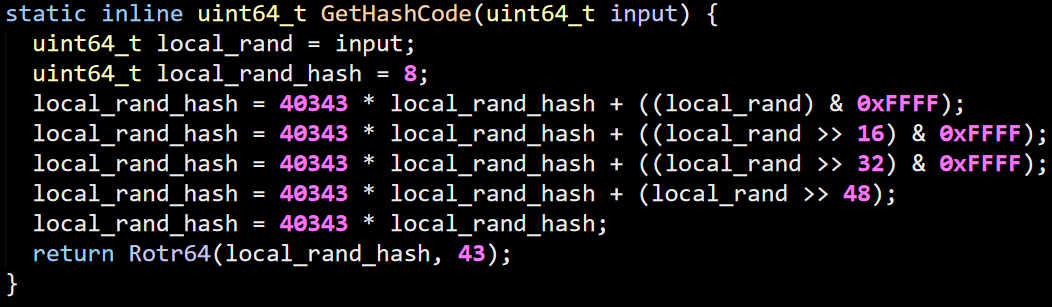

The user get to define their own hash function, and if they do so badly (for example, use the identity function since they have an 8 bytes value and they need an 8 bytes hash) that is going to be fun. The function that they seem to be using in their examples is this one:

A bit of searching on the interweb didn’t narrow it down to something specific, it may be something that they wrote specifically for that. Given that the paper doesn’t mention this, it doesn’t seem to be something special.

Given that 40343 is a prime, it seems like a pretty common pattern of multiple by a prime with each 16 bits portion of the key. The idea is that the multiplication by prime will spread the bits around. No idea how high the quality of this hash function is, since actual analysis would take at least a few hours. At least at a glance, it doesn’t seem to be awful, but I do wonder whatever something like FNV-1. In fact, this is very similar, but with different prime and offset and with addition instead of XOR.

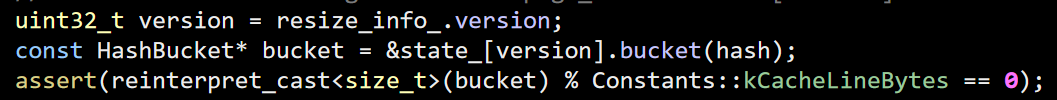

The actual InternalHashTable class doesn’t actually do something, there are a few functions around checkpointing and recovery, but they aren’t very interesting at this point. I’m going back to actually working with the has table and looked at how reads work. They end up in the InternalRead method which does the majority of the work inside the FindEntry function. The first thing that happens there is:

The first line is there to handle growing the hash table on the fly and will be ignored for now. As you can see, this basically just gives me the relevant bucket. Here is the next stage, once we have a bucket, we need to find the relevant entry that matches what we are looking for. Here is the code:

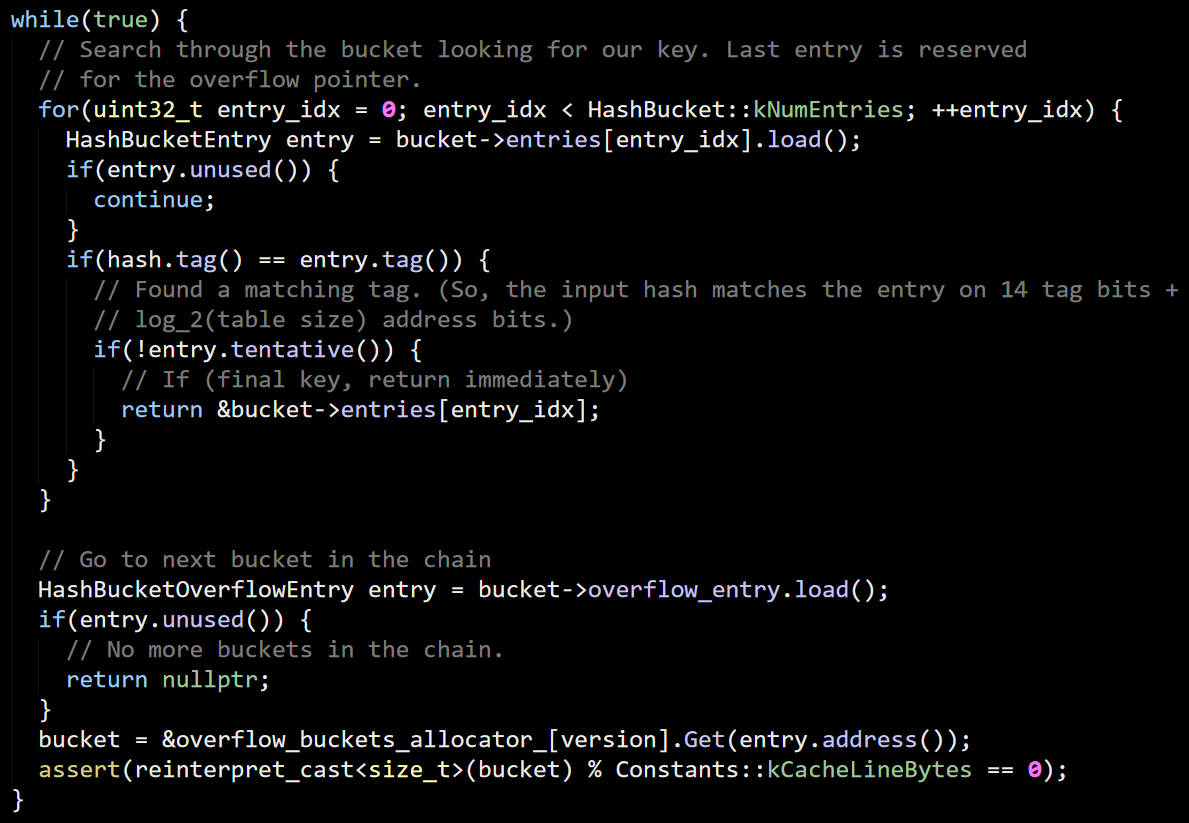

You can see that there are a bunch of stuff going on here. First, we run over the known entries in the bucket, trying to find an entry with the same tag. You can see the tentative usage, which is used to sync up between concurrent readers and writers. There may be more items in the bucket than we have space for, so there is also the concept of overflow. This is basically a linked list of 7 items at a time and likely to be pretty fast (frequently already in the higher tiers of the cache for commonly accessed data).

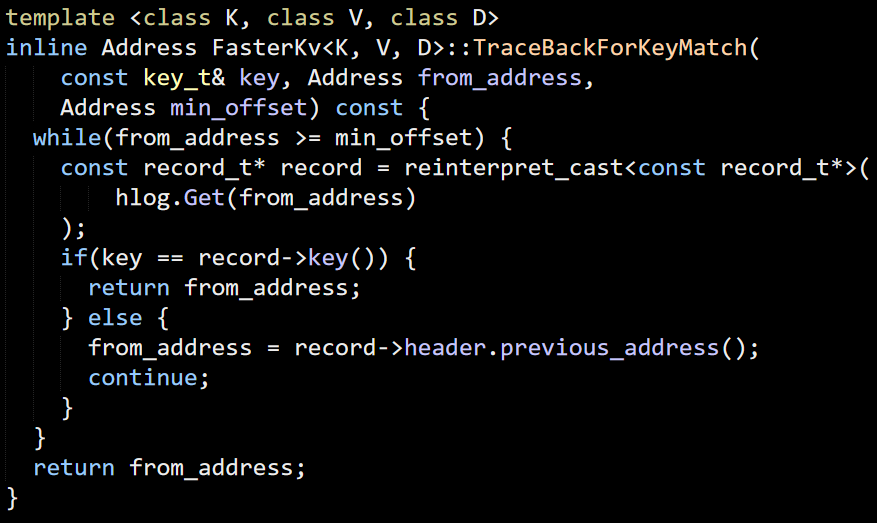

Now that we have the entry, let’s see what is done with it. I’m currently reading through the InternalRead code. Just finding the matching hash doesn’t really help us, we may have hash collisions, this is handled here:

This is the first time that we actually run into the hybrid log (hlog here). But the basic idea is pretty obvious. Either the key match, or we have a pointer to the previous entry. I’m not seeing any handling of complete mismatch, though. I’m pretty sure that this is a bug (the behavior is different in the C# version).

This is enough for now, from here the InternalRead code is starting to do things with the hlog, state machine, etc. I’m going to handle that in a separate post.

More posts in "Reviewing FASTER" series:

- (06 Sep 2018) Summary

- (05 Sep 2018) When the data hits the disk

- (04 Sep 2018) Reading data from disk

- (03 Sep 2018) The hash structure

- (31 Aug 2018) Working with the file system

- (30 Aug 2018) Digging into the C++ impl

- (29 Aug 2018) Let’s check these numbers

- (28 Aug 2018) Let’s start with managed code

- (27 Aug 2018) Reading the paper

Comments

Comment preview