FASTER is an interesting project, with some unique approaches to solving their tasks that I haven’t encountered before. When I initially read the paper about a year or so ago, I was impressed with what they were doing, even I didn’t quite grasp exactly what was going on. After reading the code, this is now much clearer. I don’t remember where I read it, but I remember reading a Googler talking about the difference between Microsoft and Google with regards to publishing technical papers. Google would only publish something after it has been in production for a while (and probably ready to sunset ![]() ) while Microsoft would publish papers about software that hasn’t been deployed yet.

) while Microsoft would publish papers about software that hasn’t been deployed yet.

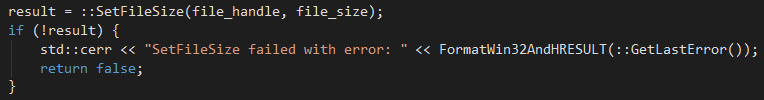

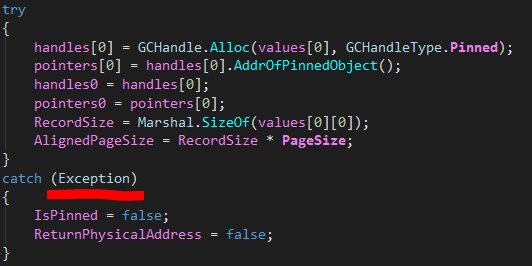

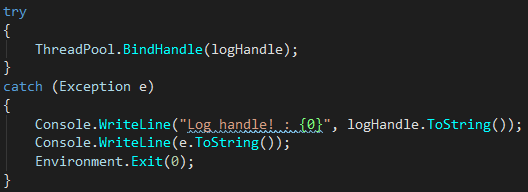

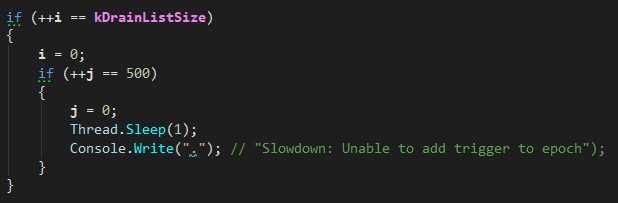

The reason I mention this is that FASTER isn’t suitable for production. Not by a long shot. I’m talking about issues such as swallowing errors, writing to the console as an error handling approach, calling sleep(), lack of logging / tracing / visibility into what is going on in the system. In short, FASTER looks like it was produced to support the paper. It is proof of concept / research code, not something that can take and use.

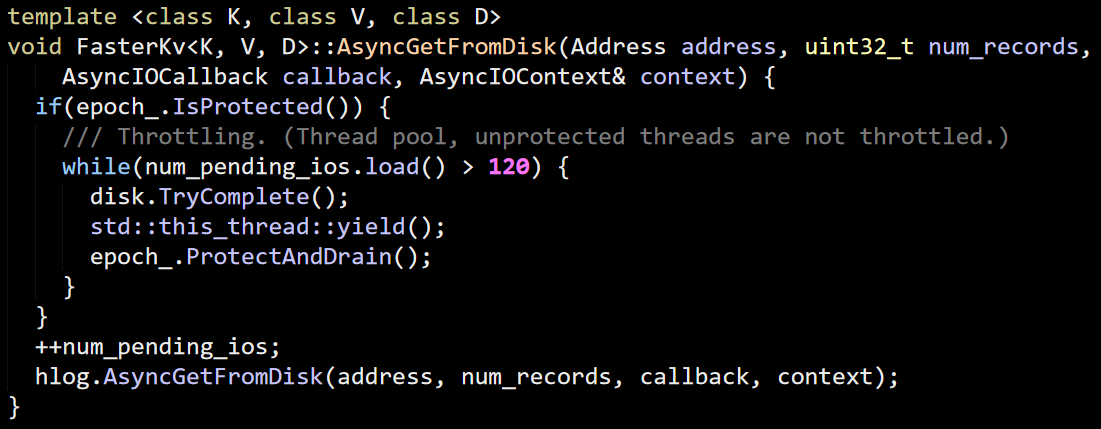

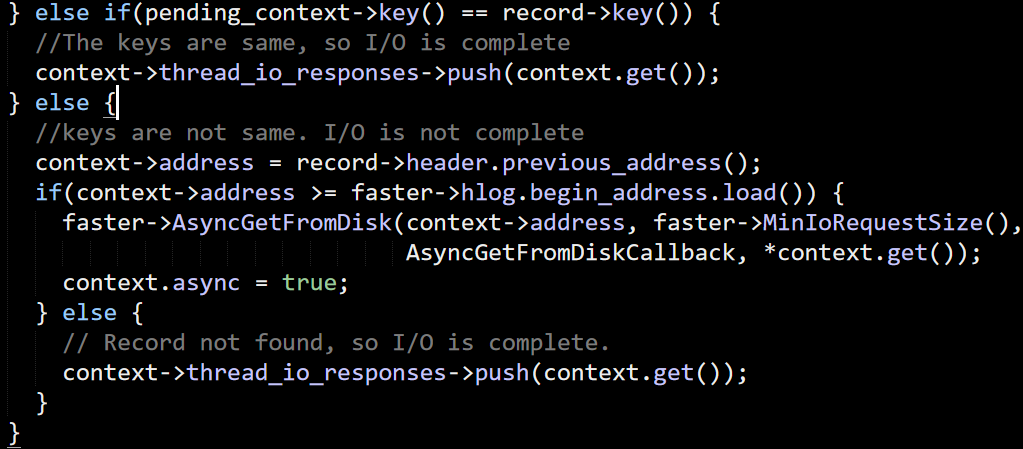

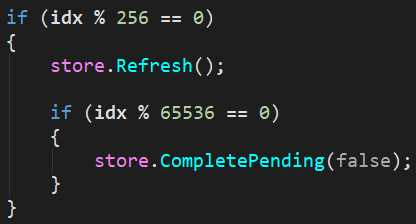

You can see it clearly in the way that the system is designed to be operated. You have dedicated threads that process requests as fast as they possibly can. However, there is no concept of working in any kind of operational environment, you can’t start using FASTER from an ASP.Net MVC app, for example. They models are just too different. I can think of a few ways to build a server using the FASTER model, but they are all pretty awkward and very specialized. This lead to the next issue with the project, it is highly specialized solution.

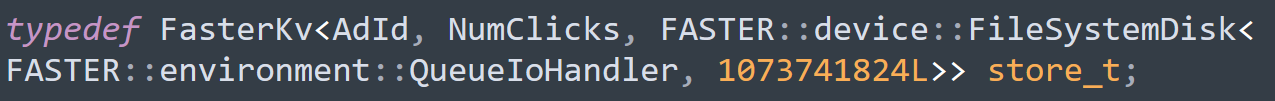

It isn’t meant for general consumption. In fact, as I can figure out, this is perfect if you have a relatively small working set that you do a lot of operations on. The examples I have seen given are related to tracking ads, which is a great example. If you want to store impressions on an ad, the active ads are going to pretty small, but you are going to have a lot of impressions on them. For other stuff, I don’t see a lot of usage scenarios.

FASTER is limited in the following ways:

- Get / Set / Update only – no way to query

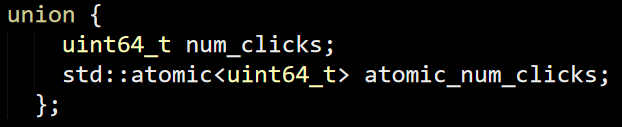

- No support for atomic operations on more than a single key

- Can support fixed length values only

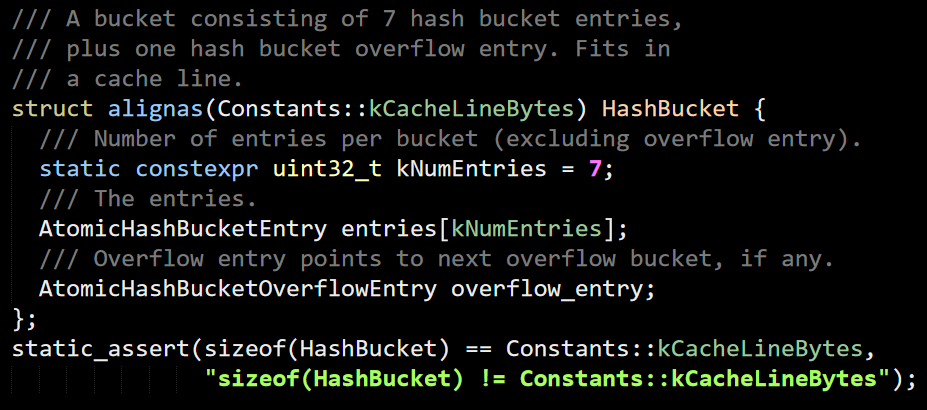

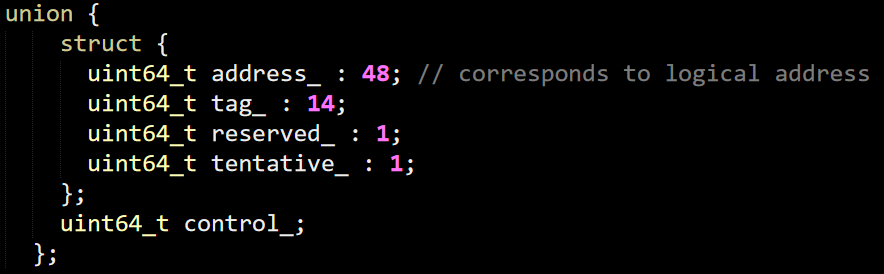

- Crash means data loss (of the most recent 14.4 GB, usually)

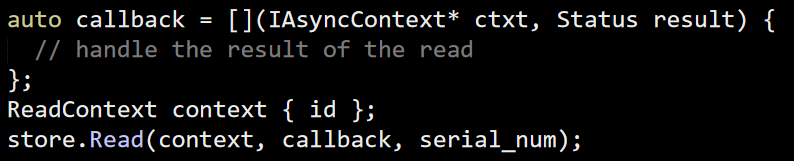

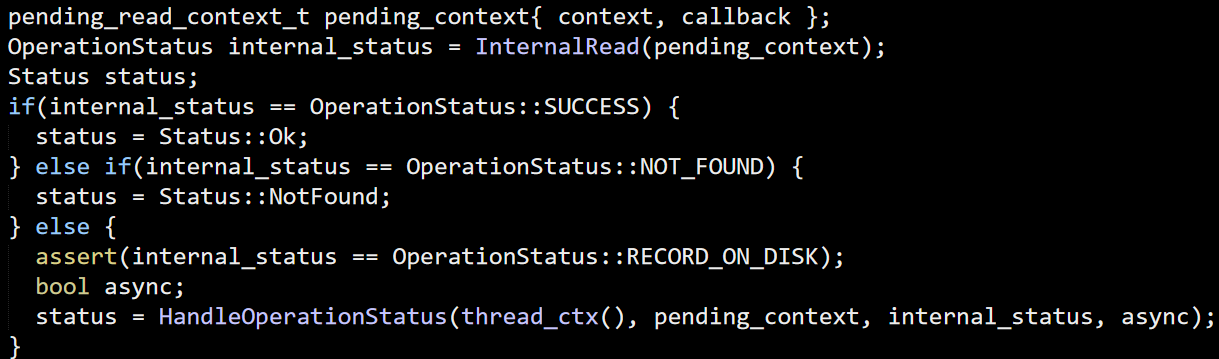

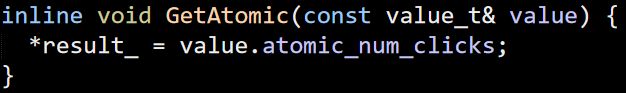

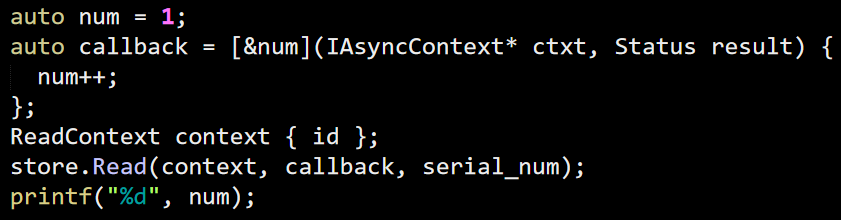

- The API is awkward to use, and you need to write a bit of (non trivial) code for each key/val you want to store.

- No support for compaction of data beyond dropping the oldest entries

Some of these issues can be mitigated. For example, compaction can be implemented, and you can force data to be written to disk faster if you want to, but those aren’t in the box and require careful handling.

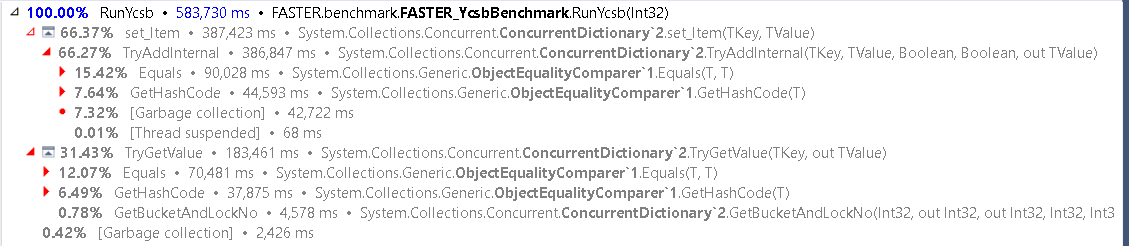

Now that I have gone over the code, I’m not quite sure what was the point there, to be honest. In terms of performance, you can get about 25% of the achieved performance by just using ConcurrentDictionary in .NET, I’m pretty sure that you can do better by literally just using a concurrent hash map in C++. This isn’t something that you can use as a primary data store, after all, so I wonder why not just keep all the data in memory and be done with it.

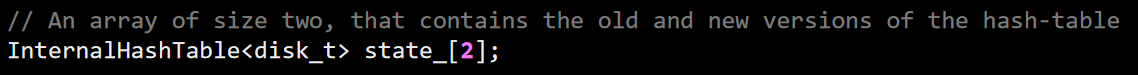

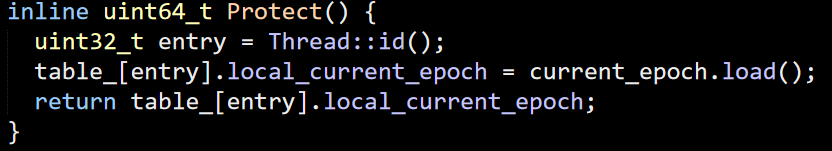

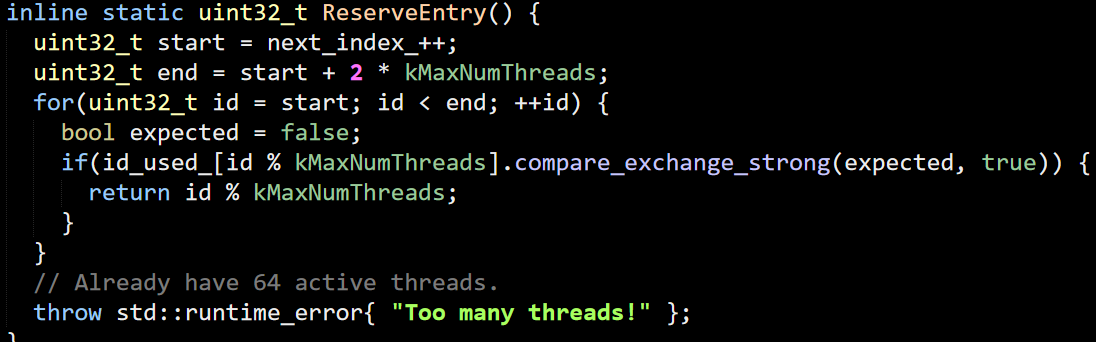

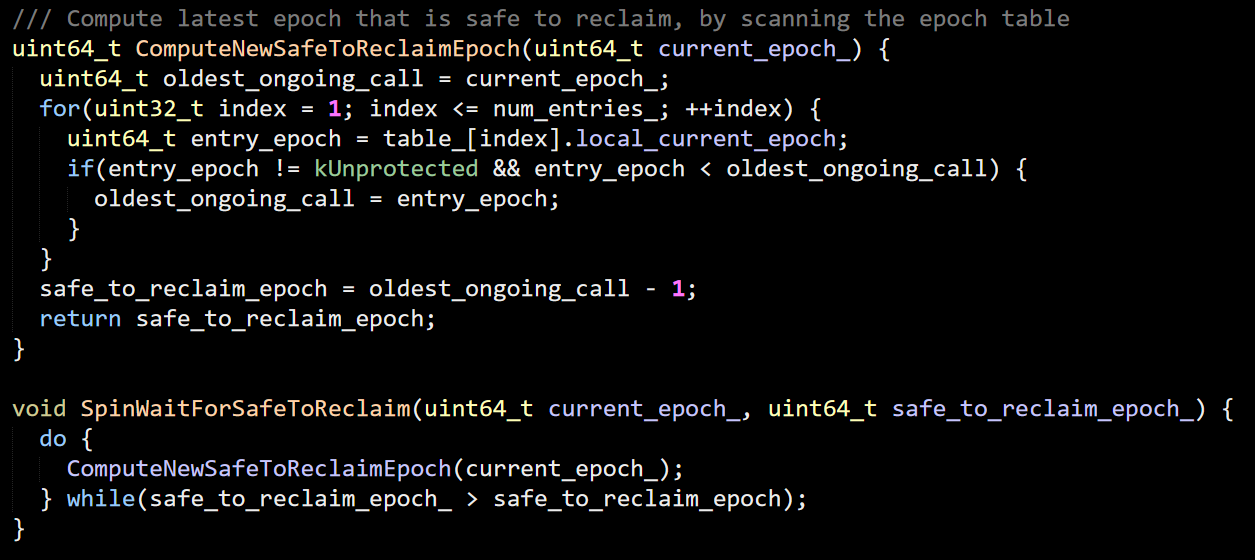

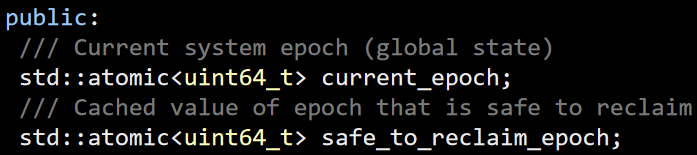

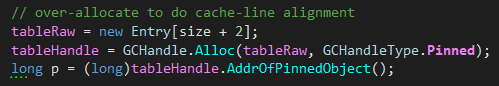

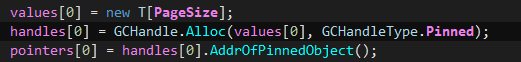

I liked the mutable / read only portions of the log, that is certainly a really nice way to do it, and I’m sure that the epoch idea simplified things during the implementation with the ability to not worry about concurrent accesses. However, the code is complex and I’m pretty sure that it is not going to be fun to debug / work with in real world scenarios.

To sum it up, interesting codebase and approaches, but I would caution from using it for real. The perf numbers are to salivate over, but the manner in which the benchmark was written means that it is not really applicable for any real world scenario.