Reviewing FASTERReading data from disk

One of the things that FASTER claims is that it is suitable for larger than memory datasets with its hybrid log approach. I briefly went over that code during my review, but I want to dedicate this post to discussing how FASTER work with the disk.

FASTER is using async I/O on Linux & Windows to do its I/O, which present its own challenges, in particular, how do you handle an operation that is going to need to hit the disk ( to read old data). Another thing that I would like to discover is how does it save the data to disk. We’ll start from the reading data part.

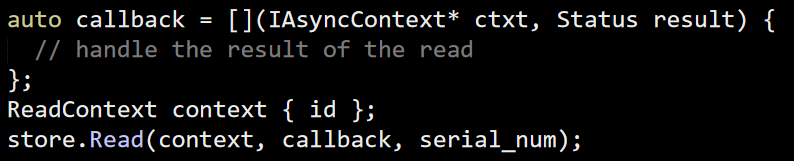

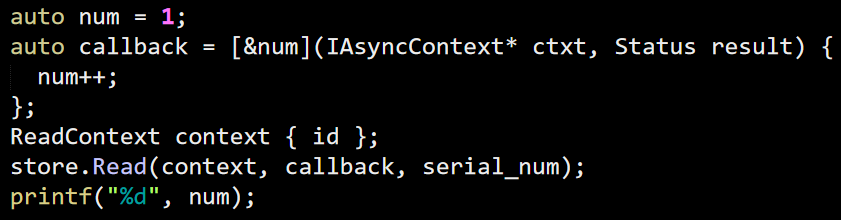

Reading in FASTER looks like this:

You pass a context, a callback and serial number. I’m not sure what the serial is about, I think it is about checkpoints, but not sure. You’ll be called with the results once the operation executed.

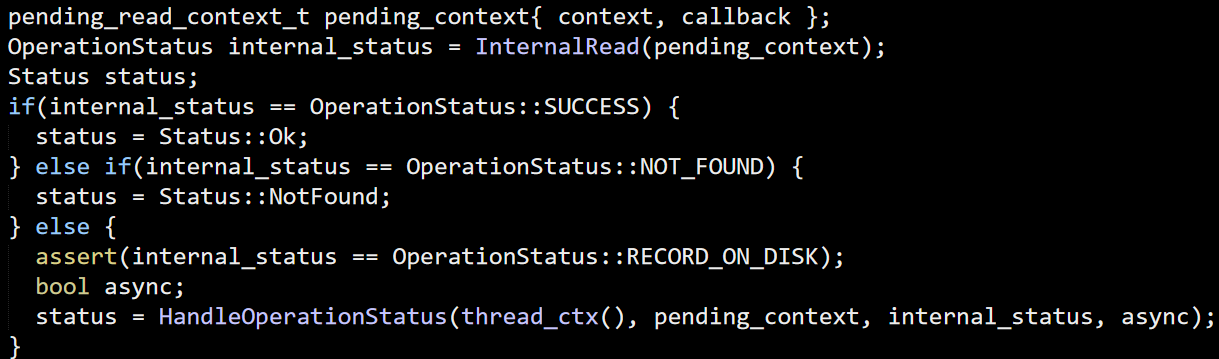

Here is the core of the Read method:

FASTER first checks the in memory details, and if it isn’t there it is either not found or on disk. This is actually really interesting, because it implies that FASTER keep track of all of the data items purely in memory. Let’s go to InternalRead and figure out how that works. We already looked into most of that in the previous post FindEntry is called to find the entry by it’s hash. FASTER keep all the hashes in memory, even while it is writing entries to disk. This way, it can easily answer if an entry exists or not. Note that as I read things, if FASTER has more than a certain number of values, hash collision rate is going to reach high percentage, requiring often expensive I/O to figure out whatever the value exists.

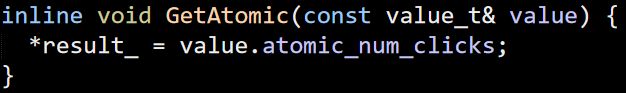

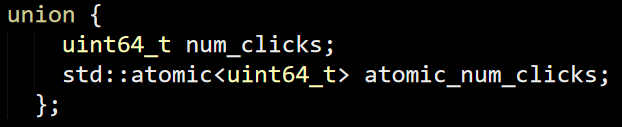

If the value is in memory, your ReadContext.GetAtomic() method will be called. Here is one such implementation:

Where the value was defined as:

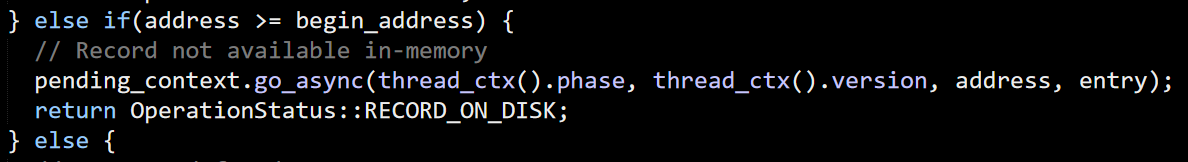

If the value has already been moved to the read only section, it will use the Get() method, instead, as an optimization. If the value is not on the mutable section or the read only section, it is on the disk, in which case we have this code:

The go_async() method just records the status of the operation when we started the async process, it doesn’t actually invoke anything async. That is done in the caller code, Read().

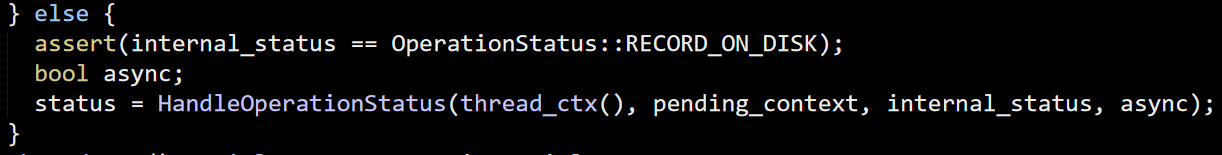

This, in turn, gets us to this piece of code:

Note that the code is full of handling of the current phase of the thread. I’m ignoring all of these for now, they aren’t important.

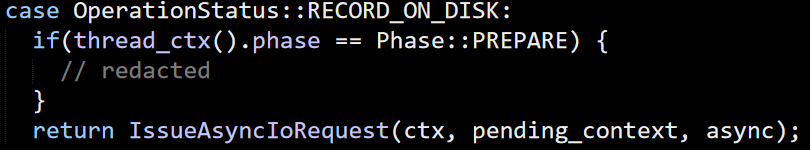

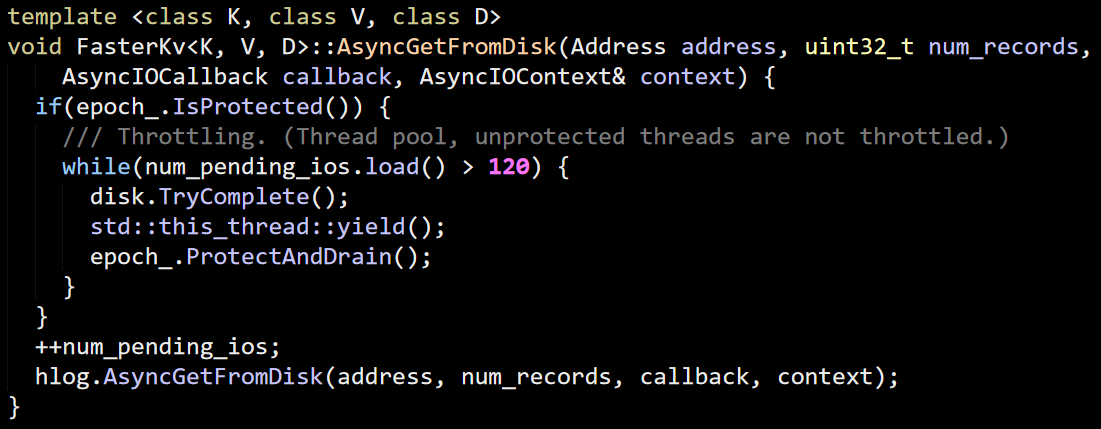

The next thing to look at is the async I/O request, which gets us to:

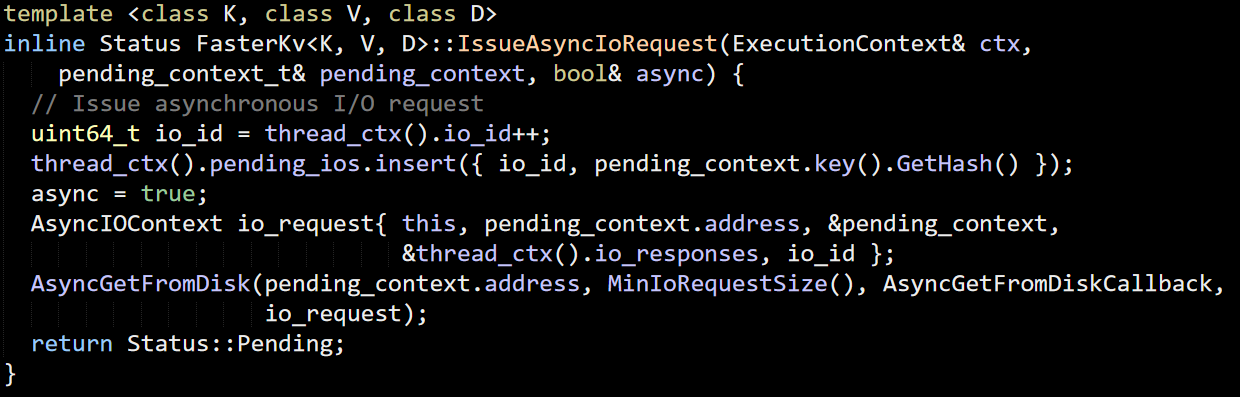

We first register the pending I/O, then actually starts to process the async call. Except that not really. AsyncGetFromDisk() isn’t actually doing I/O. Instead, it seems to be focused on limiting the number of concurrent I/O operations that are going on.

In this case, if there are more than 120 pending I/Os, it will call io_getevents() in Linux and GetQueuedCompletionStatus() and try to process any completed I/O immediately.

ProtectAndDrain is more interesting. It asks the epoch to complete any pending operations. Such actions are the creation of a new segment file or the moving of a segment from memory to disk.

I find it interesting that FASTER chose to stall the thread until all pending I/Os are completed. Given their model, it would make more sense to push such operation into the thread ops and resume process other requests. I guess that they expect this to be either a rare case or using this for throttling overall system load. You might also have noticed the num_records arguments, this is used in the hlog method:

That was confusing, I expected this to be the number of records (as in, how many records are read from disk) but this is the number of bytes to read.

The C++ memory model make async code a bit complex. In particular, if you’ll look at the first code snippet in this post, you’ll see that we pass a stack allocated struct to the Read() method. Because this method can complete in an async manner, what FASTER will do is to perform a deep copy of the data. Combine that with lambda’s capturing state, and I’m pretty sure that this code will cause a very subtle memory corruption in rare cases.

What I think will happen is that we capture by ref the stack variable and in 99% of the cases, we’ll run this in sync mode, meaning that there will be no issues. Only if the value needs to be read from disk will you get an issue. Because that function will already return but you still have the callback (and the captured reference now pointing to something completely different) still active. I think that a C++ developer would recognize this, and the fact that C++ require you to be explicit about your captures make this a lot easier to deal with.

It is important to note that there is no good way to actually handle the async nature of certain operations here. Any code that actually handle the operation need to go in the callback.

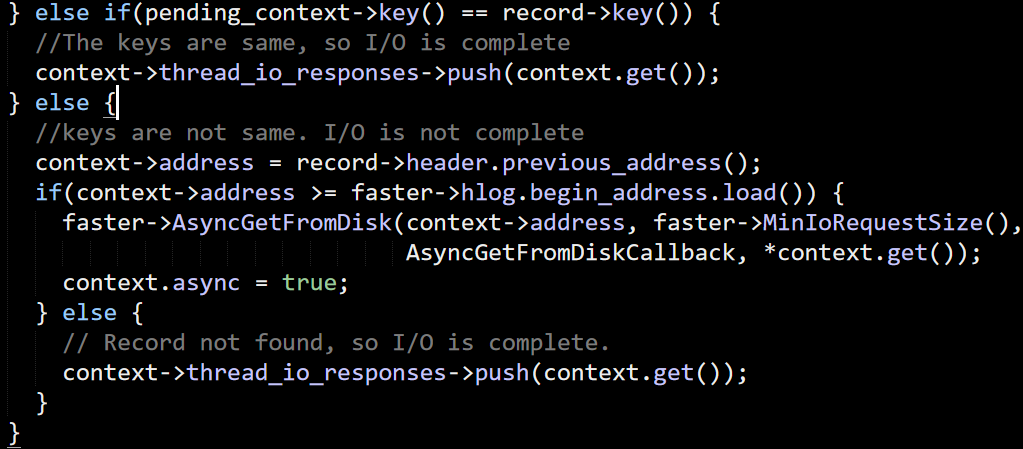

Another aspect to remember is that just reading from the disk doesn’t mean that you got the right value. You might have gotten a hash collision:

In other words, if you have a way to generate hash collisions, as soon as the value hits the disk, you are going to be facing with making N disk I/O requests to find if you have the value or not. Denial of service attacks against hash tables are well known and represent a significant risk of to services.

Next on my list if seeing how FASTER is actually writing the data to disk, but that will be in a separate post.

More posts in "Reviewing FASTER" series:

- (06 Sep 2018) Summary

- (05 Sep 2018) When the data hits the disk

- (04 Sep 2018) Reading data from disk

- (03 Sep 2018) The hash structure

- (31 Aug 2018) Working with the file system

- (30 Aug 2018) Digging into the C++ impl

- (29 Aug 2018) Let’s check these numbers

- (28 Aug 2018) Let’s start with managed code

- (27 Aug 2018) Reading the paper

Comments

Comment preview