Reviewing FASTERLet’s check these numbers

Before heading to the C++ implementation, I thought I would take the time to just walk through the FASTER codebase as it is performing a simple operation. The access violation error that I previously run into has been fixed, so I could run the benchmark. Here is my configuration:

I got the following results, when running on a single thread.

Total 142,603,719 ops done in 30 secs.

This is about 4.7 million operations per second, which is certainly nice. I then decided to compare this to ConcurrentDictionary to see what kind of performance that would give me. I made the following changes, which I’ll admit are pretty brute force way to go about it. But note that this is probably significantly less efficient then I could probably write it. Nevertheless, using ConcurrentDictionary with a single thread in the same benchmark gives me:

Total 84,729,062 ops done in 30 secs.

There isn’t much call for using this on a single thread, though. My machine has 20 cores, so let’s see what happens when I give FASTER its head, shall we?

2,330,054,219 ops done in 30.021 secs.

That is impressive, with about 77,668,473 operations per second. On the other hand, this is what happened when I run with 20 threads and ConcurrentDictionary:

671,071,685 ops done in 30.024 secs.

This gives us “only” 22,369,056 operations per second.

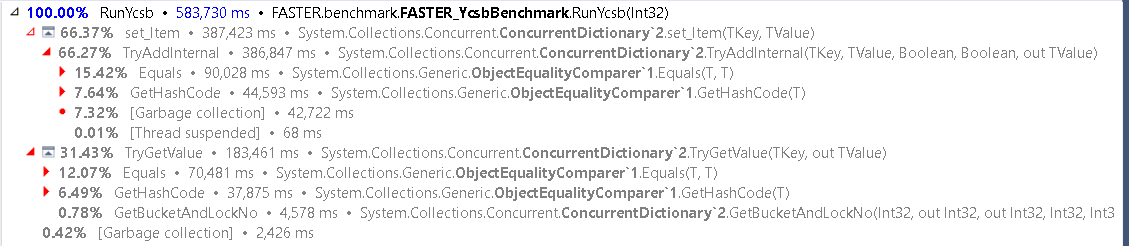

It is clear that FASTER is much better, right? The problem is that it isn’t much faster enough. What do I mean by this? I used idiomatic C# for the ConcurrentDictionary usage and got with 1/4 of FASTER’s perf. The FATER codebase is doing native calls and unsafe manipulation, dedicated allocation, etc. I expect to get better perf at that point, but “merely” 400% improvement isn’t enough for the kind of effort that was put into this. I run the concurrent dictionary in a sampling profiler, with 20 threads, and I got the following results.

On the other hand, using FASTER for the same scenario gives:

This is really interesting. You can see that the FASTER option spends all its time in either: InternalUpsert or inside the RunYcsb method (which is actually the store.Read() method that was inlined).

What is more interesting is that there are no additional calls there. The InternalUpsert call is 219 lines of code, and the code uses [MethodImpl(MethodImplOptions.AggressiveInlining)] quite aggressively (pun intended). On the other hand, the ConcurrentDictionary implementation has to make virtual method calls on each call.

There are several ways to handle this, including using generic struct that can eliminate most of the virtual calls. This is effectively what FASTER is doing, without the generics. FASTER also benefits from pre-allocating everything upfront. If you’ll look at the profiler results, you can see that these are the major source of “slowness” in the benchmark.

Given the nature of the benchmark, I feel that it is unfair to compare FASTER to persistent data stores and it should be compared more closely to a concurrent hash map. Given that this is effectively what FASTER is doing in this showcase benchmark, that seems a lot more fair. I checked the literature and we have this paper talking about concurrent hash maps where we see (Figure 2.a) numbers that near to 300 millions ops/sec for pure writes and 600 millions ops/sec for reads.

More posts in "Reviewing FASTER" series:

- (06 Sep 2018) Summary

- (05 Sep 2018) When the data hits the disk

- (04 Sep 2018) Reading data from disk

- (03 Sep 2018) The hash structure

- (31 Aug 2018) Working with the file system

- (30 Aug 2018) Digging into the C++ impl

- (29 Aug 2018) Let’s check these numbers

- (28 Aug 2018) Let’s start with managed code

- (27 Aug 2018) Reading the paper

Comments

Thanks for these reviews. I'd be interested to compare with this non blocking .NET ConcurrentDictionary-compatible dictionary https://github.com/VSadov/NonBlocking (from a Microsoft dev).

Simon, I tested that, and it was about 20% more costly than the BCL version. I didn't check exactly why, though.

Comment preview