In the previous post, I looked into the Bleve search engine library. Now, I want to go into the codebase and answer a simple question. How does Bleve handles sorting of queries. Here is my code:

During the search process, we have visitor defined:

This is called on every field (and term value) that is found in the query (it looks like only the relevant ones are touched, but that is still a lot). Eventually, this gets here:

At this point, we can see that we basically gather a list of of all the terms in the values field inside the UpdateVisitor. This is important, because we are later going to rely on the same order of iteration, as you can see in the Value call. Even though there is a DocumentMatch being passed there, it isn’t actually being used. Instead, it always take the first element in the values.

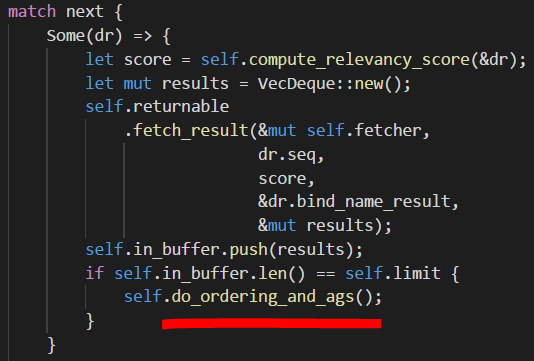

This is called on a per document level, so there is an expectation that the values will be smaller. On the other hand, during the sorting process, we’ll merge it all into a single location per document, as you can see:

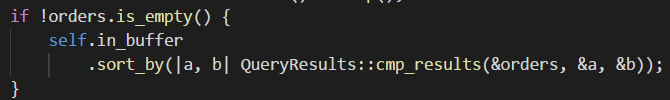

In other words, the doc.Sort is going to end up with an array of the values that we want to sort by. At this point, sorting is done by maintaining a heap and pushing values to it until we get the top N elements. Pretty simple overall.

It also allocates quite heavily, with arrays, slices and strings. I don’t have a good feeling for where it actually will be a problem in Go, but it is something to consider. In C#, I would be very worried about the eventual costs of all of these allocations.