Searching through textPart I, full text search in under 200 lines of code

Full text search is a really interesting topic, which I have been dipping my toes into again and again over the years. It is a rich area of research, and there has been quite a few papers, books and articles about the topic. I read a bunch of projects for doing full text search, and I have been using Lucene for a while.

I thought that I would write some code to play with full text search and see where that takes me. This is a side project, and I hope it will be an interesting one. The first thing that I need to do is to define the scope of work:

- Be able to (eventually) do full text search queries

- Compare and contrast different persistence strategies for this

- Be able to work with multiple fields

What I don’t care about: Analysis process, actually implementing complex queries (I do want to have the foundation for them), etc.

Given that I want to work with real data, I went and got the Enron dataset. That is over 517,000 emails from Enron totaling more than 2.2 GB. This is one of the more commonly used test datasets for full text search, so that is helpful. The first thing that we need to do is to get the data into a shape that we can do something about it.

Enron is basically a set of MIME encoded files, so I’ve used MimeKit to speed the parsing process. Here is the code of the algorithm I’m using for getting the relevant data for the system. Here is the relevant bits:

As you can see, this is hardly a sophisticated approach. We are spawning a bunch of threads, processing all half million emails in parallel, select a few key fields and do some very basic text processing. The idea is that we want to get to the point where we have enough information to do full text search, but without going through the real pipeline that this would take.

Here is an example of the output of one of those dictionaries:

As you can see, this is bare bones (I forgot to index the Subject, for example), but on my laptop (8 cores Intel(R) Core(TM) i7-6820HQ CPU @ 2.70GHz) with 16 GB of RAM, we can index this amount of data in under a minute and a half.

So far, so good, but this doesn’t actually gets us anywhere, we need to construct an inverted index, so we can ask questions about the data and be able to find stuff out. We are already about half way there, which is encouraging. Let’s see how far we can stretch the “simplest thing that could possibly work”… shall we?

Here is the key data structures:

Basically, we have an array of fields, each of which holds a dictionary from each of the terms and a list of documents for the terms.

For the full code for this stage, look at the following link, it’s less than 150 lines of code.

Indexing the full Enron data set now takes 1 minute, 17 seconds, and takes 2.5 GB in managed memory.

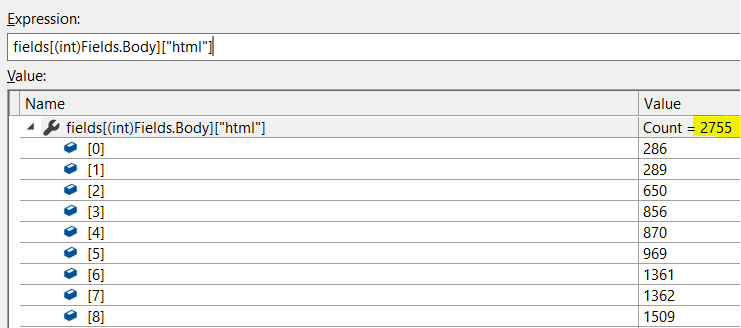

The key is that with this in place, if I want to search for documents that contains the term: “XML”, for example, I can do this quite cheaply. Here is how I can “search” over half a million documents to get all those that have the term HTML in them:

As you can imagine, this is actually quite fast.

That is enough for now, I want to start actually exploring persistence options now.

The final code bits are here, I ended up implementing stop words as well, so this is a really cool way to show off how you can do full text search in under 200 lines of code..

More posts in "Searching through text" series:

- (17 Oct 2019) Part III, Managing posting lists

- (16 Oct 2019) Part II, Exploring posting lists persistence

- (14 Oct 2019) Part I, full text search in under 200 lines of code

Comments

I smell a Lucene.NET replacement for Raven 5 in the future :-)

Bernhard, I don't think its realistic task, Lucene development took years, maybe its possible to adopt the new beta version and optimize it.

@Uri, including a more current Lucene.NET version like 4.8.0 would certainly be nice, the LevenshteinAutomata look beautiful; other than that, a few more extension points besides custom Analyzers would also be very helpful. I'm currently fighting the default scorer (DefaultSimilarity); where the idf(t) factor messes up my results. When your documents are addresses, a common street name will weight down the exact documents one is interested in. ;)

Just curious, is there a reason why you're managing

Tasks manually, instead of usingParallel.ForEachwithMaxDegreeOfParallelismset?Indexing and query is one part of story for full text search. Tokenize the given document is the other important piece of full text search. This might be a bit off topic. @Ayande. Will RavenDB be able to look into tokenize algorithm in the future? Such as including or instruction of using tokenize library. e.g. SentencePiece.

The result accuracy of full text search, I feel is more tight to tokenize algorithm. In Raven 3.5, there was capability of implement customized tokenize algorithm, I'm not sure about RavenDB 4 cloud. In which way we can achieve multi-lingual tokenize and search capability with RavenDB?

Felxi, Uri and Bernhard,

I started answering these, but it got too big: https://ayende.com/blog/188705-C/on-replacing-lucene?key=7900612ae91b440db4f24aaec22d05b6

Svick,

I want each task to handle a whole range of values, while

ForEachfeeds them a single one at a time.Jason, I don't actually care that much about tokenization. That is a completely separate piece of the pipeline and has very little impact on the design / work requirements for the whole thing. Note that you can customize the tokenization process in RavenDB right now. You use custom analyzers for this.In RavenDB 4.0, you can do the same and it is supported in RavenDB Cloud.

It does not create a separate

Taskfor each value, if that's what you meant.(E.g. the code

Parallel.ForEach(files, file => Console.Write($"{Task.CurrentId} "));prints on my machine something like1 1 1 3 3 5 3 6 2 1 1 1 1 4 5 3 3 2 6 1.)It does perform a delegate invocation for each value, but that should have negligible overhead compared with the file operations you're doing for each value.

@Ayande, cool I will look into it.

Svick, If you want per task state, (such as a bulk insert operation that would last over multiple invocations), it is hard to deal with it.This isn't the case in the current code, but I needed that for some other details, and that mattered there.

Comment preview