Fastest code is the one not runPart I – The cost of escaping strings

This series of posts is continuting the work I have outlined in this series. While in the previous series, I focused on the overall picture, here I want to talk about the small things we did to speedup pretty much every aspect of the system. In some cases, those are order of magnitude differences.

In this post, I want to talk about the following:

“Oren\r\nEini”

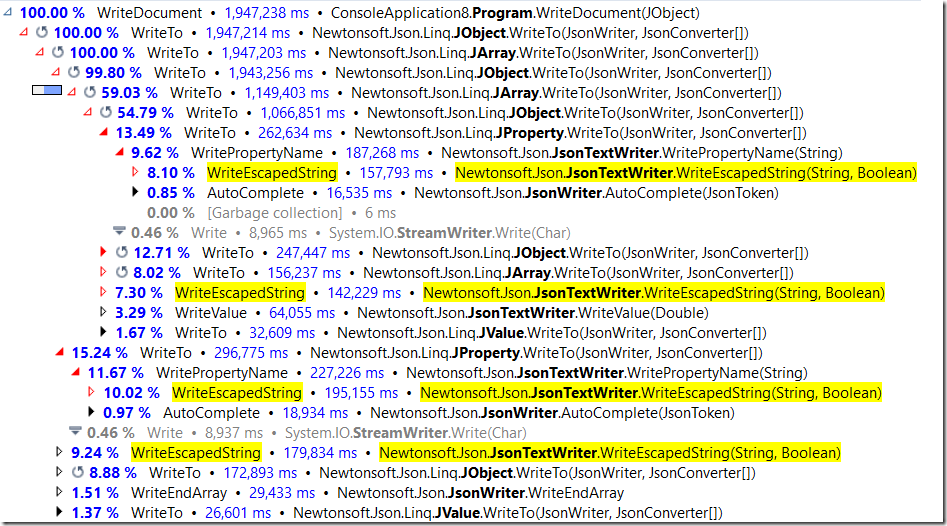

Such a simple thing, if you just look at the surface, and yet if you’ll profile the Json.NET code, you’ll see an interesting issue. I took an existing JSON file (about 67MB in size) and continiously wrote it to /dev/null under a profiler. The results are actually quite surprising.

In fact, this is a bit worse.

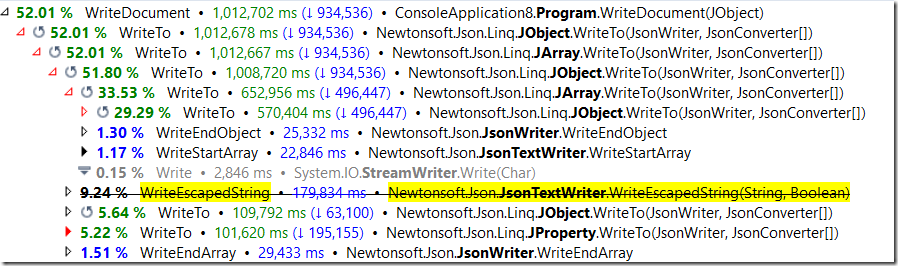

dotTrace has a great feature that allows you to remove “noise” from a trace. Here is what happens if we eliminate this call:

So, pretty obivously, this is an really expensive call. But how expensive is it, really? Note that this profiling run used a Null Stream, so there isn’t any I/O cost to consider. This is the pure computational cost of calling this method.

Let us look at a deeper profiling profiler of this method:

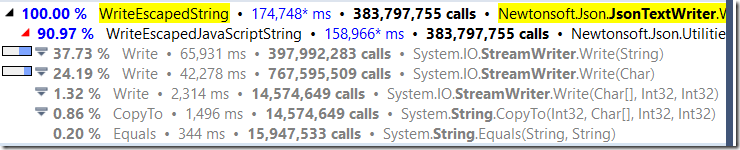

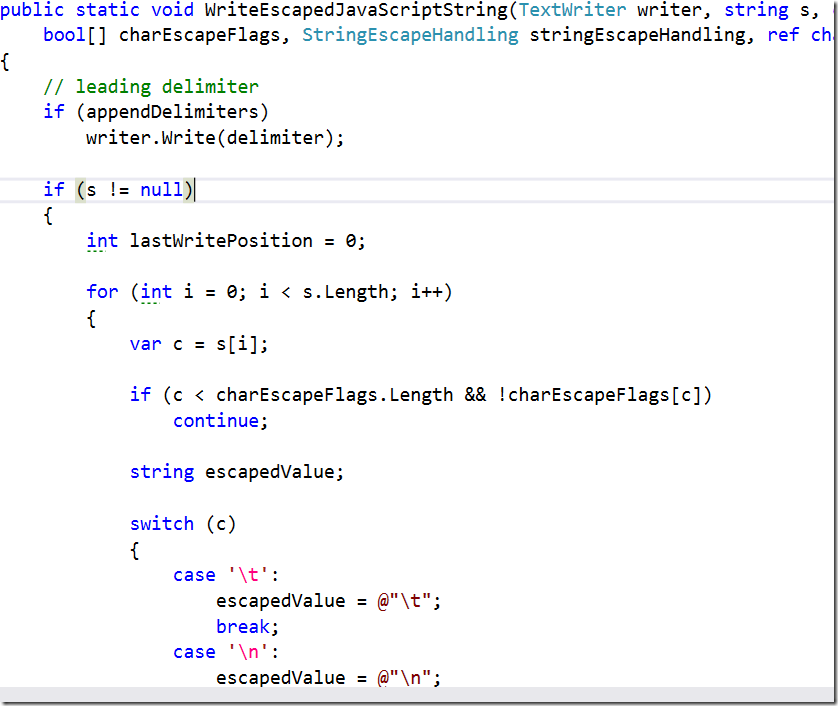

As you can see, WriteEscapedString does about 9% of work, then call WriteEscapedJavaScriptString, which make some calls to the StreamWriter. But about 33% of the time is spend doing its own thing, looking at the code, it is clear what is going on:

Basically, before it can write anything, this code needs to go over the string and find out if it has any escape characters. If it does, it needs write the escape sequence, then resume writing the string. This means that this code has to scan the string that it is writing several times, and much worse, it means that you can’t really do justice with those operations. Consider the string above, writing it out is actually several different calls to the writer. In fact, we have:

- “

- Oren

- \r

- \n

- Eini

- “

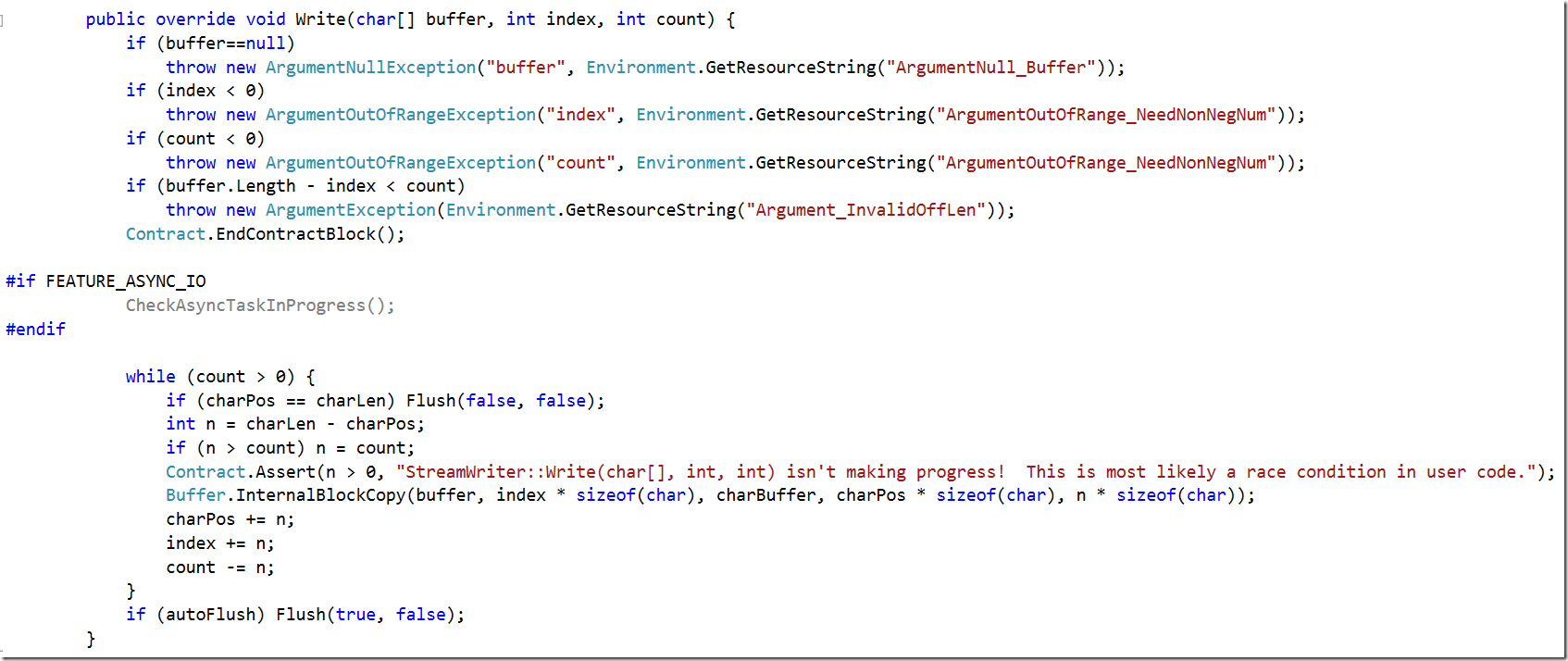

The problem here is that we lose a very powerful feature, the ability to batch multiple operations into a single I/O call. Sure, the TextWriter is going to be doing a bit of buffering for us, but that doesn’t help us really. Let us consider one of the most important calls we have in this code:

For the string above, we call this method twice, once for each portion of the string that isn’t escaped. That means that argument validation needs to run each and every time. And we also have to copy the memory in itsy bitsy pieces. Memory copy routines can get far greater speed if they can copy a large bunch of memory all at once, rather than being called on small sections of it.

In short, I hope that this convinced you that escaping strings is expensive. Stupidly so, when you come right down to it.

But what can you do, that is the cost of doing business, right?

Indeed, JSON.Net code is heavily optimized, and there isn’t much you can do about improving things at this point. You are stuck with the JSON format and the implications of it.

This is already a long post, but bear with me while I explain the reasoning.

The blittable format isn’t JSON. We read JSON and output JSON, but we aren’t limited to the same constraints as someone who is just consuming JSON. There are two separate use cases for the document using the blittable format. The first is when we need to work with them on the server side. This include such things as indexing, transformers, patching, etc. For those cases, if there is an escaped value, we need to get the unescaped version. In other words, I need the string with the line break in it, not the \r\n escape sequences. The other common use case, unfortunately, is when we need to write the document to the user, in which case we do need to write it out with all of the escape sequences.

What is left for us, apparently, is to choose our poision. We can optimize for either scenario, but not for both.

Except, that maybe we can. And that is what we actually did.

When we write a string value into the blittable format, we read escape sequences, and translate them into their unescaped values. In other words, we take a “\n” and store just the byte 13. But we also remember its position. The string we keep talking about? It would be stored as:

[10][O][r][e][n][10][13][E][i][n][i][2][4][0]

The first byte (10), is the length of the string. Then we have the actual unescaped version. If the server side code wants to read this string, we can just serve it directly from this memory, without any additional work. But what about when we need to write it down, and maintain the escape sequences? That is where the numbers in the end come into play. We need to skip 10 bytes past the length, where we’ll encounter 3 bytes: 2, 4, 0.

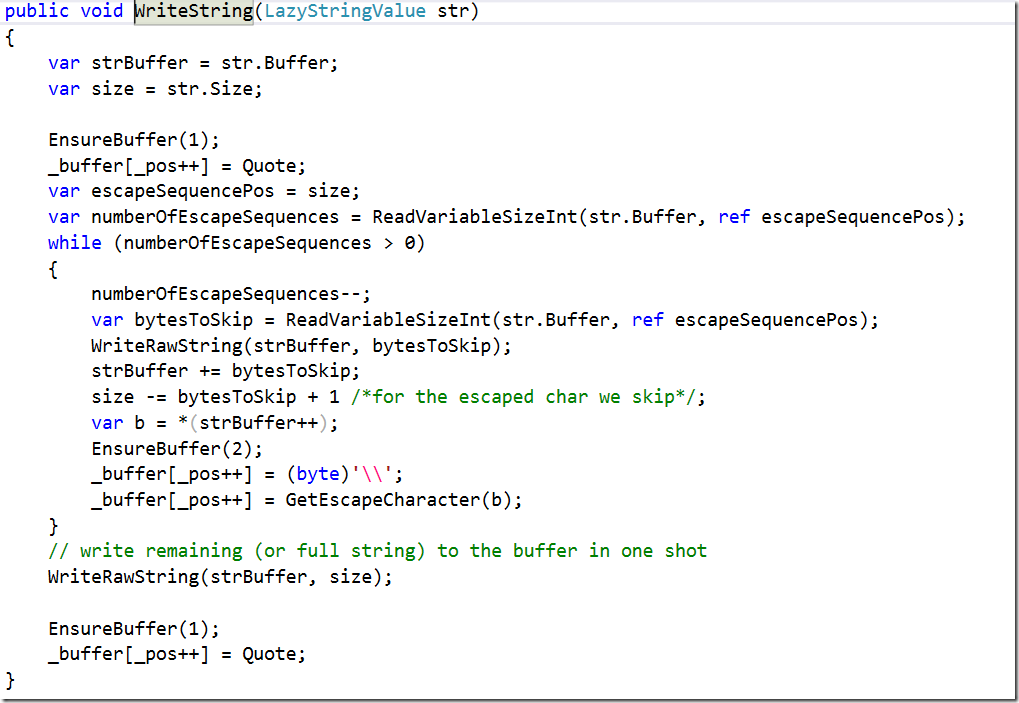

The first byte is the number of escape sequences in the string. The second is the number of bytes that we can copy until we get to the next escape sequence. Which means that our code for writing string has narrowed down to:

And that, in turn, means that if you don’t have any escape sequences, we can just call memcpy directly to write to the output buffer. And if you do have escape sequences, we just need to scan the escaped characters, we don’t need to scan the entire string.

There is so much saving in the air, it feels like Black Friday.

More posts in "Fastest code is the one not run" series:

- (29 Jan 2016) Part II – Memory management

- (28 Jan 2016) Part I – The cost of escaping strings

Comments

Maybe just track a single bit whether the string has any escapes or not? Most strings don't have any.

Mark, Since a single bit has to be stored in at least a byte (unless you want to move to complete bit craziness, it is easier to use a full byte, and in this case, this take a single byte.

True.

If every other character needs to be escaped (worst case), that's a lot of extra memory on the end of the string.

What about

string.IndexOfAnyto find the next character that needs to be escaped? That's what I use because the scan is implemented in native code so it's fast, and it also allows me to memcpy large chunks at a time like your solution. Did you explore that?Actually, I guess worst case would be if every character needs to be escaped.

Joseph, Sure, that is the worst case, but this tend to be: a) Rare b) No worse off than how we would store the data if we were storing it as direct JSON.

IndexOfAny requires you to do computation during the write process. That means that you need to scan the string at least twice (IndexOfAny, the memcpy). This way, we only need to go through it once

You have to two do scans anyway; one to create the information at the end of the string, and the memcpys and escaping. If you delay the first scan until you need it, you get the added benefit of using slightly less memory.

Are you assuming you will be writing a significant number of times from the same string, therefore caching the info at the end is a measurable optimization?

How do you handle Unicode chars, since the blittable format seems work with bytes instead of (multi byte) chars?

// Ryan

Joseph, You are missing something crucial here. The first scan (to create the escape positions) happens once. Then typically a document will be read many times. That means that when writing the document out, I can just skip ahead on every single write.

Ryan, We work with UTF8 data. Multiple byte characters are just more bytes. We don't care. Note that JSON allows to escape characters using \u788, but doesn't require it, so we are good

That was my question. Thanks! 👍

Thanks for the article! Just spotted a small thing, you could optimise the call to:

_buffer[pos++] = (byte)'\';

with a constant as it's always the same.

Comment preview