Fastest code is the one not runPart II – Memory management

One of the most important things that you need to do for high performance is to control your allocations. Indeed, the blittable format is almost entirely implemented in unmanaged memory. And we get a great deal of performance from not having the GC poke its annoying little nose into our data structures. That said, it means that we take the onus of managing the memory ourselves.

This post is about a couple of changes that we made to the memory management system in the blittable format, and some future directions we intend to explore.

The first thing that we did was to implement an unmanaged memory pool based on ConcurrentDictionary<int, ConcurrentStack<IntPtr>>. The int is the size (in power of two) of the allocated blocks. And we would allocate from the heap, and then store the buffers internally for reuse. That worked, but profiling showed that we had quite a bit of work in just leasing and returning the buffers to the thread safe pool.

Note that this was when we only tested using a single thread, we expect that the cost of working with it would only increase when using multiple threads.

That sucked.

Luckily, we use the context pattern for pretty much everything in blittable format (and more generally in RavenDB), so we can take advantage of that. We have a three stages process.

First, we allocate the memory when we first request it. Then, when we are done using it, we return it to the context, not the global pool. This allows us to avoid cross thread coordination costs entirely. And it is quite common for threads to need to run the same buffers multiple times, so we save the “check in the buffer” just to “lease me this buffer again, please” style of work.

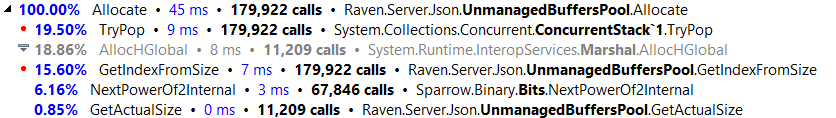

Here are the costs when using a single shared, thread safe pool:

As you can see, we spent quite a bit of time just managing the thread safe collections. You don’t see it in this profile, but other profiling sessions show the concurrent dictionary under load as well. And this is in a single threaded, no contention benchmark.

We moved the memory allocations to the context. Because a context is single treaded, we can rely on much simpler (and cheaper) collections, and we can also reuse contexts. A request checks out a context, which has its own cached buffers. It runs through the request, then return the context as a whole to the buffer. Each of those contexts can then manage their own memory and only rarely need to go to the global pool. There is some complexity about making sure that overly fat contexts hang around, but that is basically it.

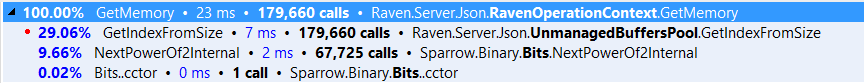

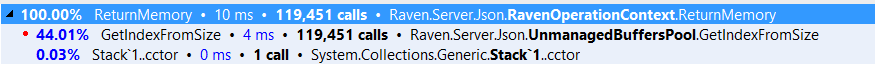

And when we need to release the data from the context, we can do all of that in a single bulk operation. Here are the profiler results after:

I admit, it is a bit disappointing to see only a 100% improvement, but I can comfort myself with knowing that the saving in multi threaded scenarios are much higher.

I mentioned that we also have some ideas on improving this furhter. This idea (which we deferred right now because there are other more important things to do) include just allocating a big buffer (128MB in size) per context. We'll not commit all of it, merely reserve the address space, and start allocating from it directly. Basically, each allocation would simply be a pointer increment (with occational calls to commit the rest of the reserved address space).

Now, in order to free the memory, all we need would be to reset the position to zero, and we are set to reuse the context again at effectively zero cost. If this sounds familiar to you, this is because this is primarily how allocations actually work in .NET, except that we explicitly control the size and the lifetime of all the objects in there.

It also has no cost in GC, which is quite nice. As I said, we are thinking about this, but at this point, I think that we are fast enough that we don't need to go there yet. We'll have to see under concurrent usage what this will be.

More posts in "Fastest code is the one not run" series:

- (29 Jan 2016) Part II – Memory management

- (28 Jan 2016) Part I – The cost of escaping strings

Comments

We do this in OpenLDAP slapd as well, a per-thread memory context that just increments a pointer on alloc calls. It originally also had mark/release functions to free large chunks of memory at once (without just resetting the entire context) but we switched from that to a regular free() approach. Some times I think we still should use mark/release style though.

Howard, Are your thread doing request processing? The nice thing about such systems is that they generally don't have memory that leak into the global state. That is, all the memory that they need to allocate during the processing of the request is going to be freed. There is nothing that will live beyond the scope of the request. You can handle that by allocating a big chunk of memory (on Linux, that just reserve the memory, not even allocating the unused portions), and allocate from that by bumping the pointer. On the end of the request, you just reset the pointer to the initial size, and voila, you "free'ed" it all. No memory management overhead, and typically great utilization of the memory you actually use.

Comment preview