Production postmortemThe case of the infected cluster

RavenDB is a critical system for many of our clients, that means that we get our share of “the sky is falling” stories, and we have a team of people we train to be able to diagnose and troubleshoot issues in production. This series of post is about the kind of issues that we run into, how they were resolved, and what the underlying issue was.

The scenario, a customer had a total system meltdown because RavenDB would stop responding to requests. Restarting the server would result in everything working, but in about 10 minutes, they would run into the same issue.

Complicating matters was the fact that the customer was running in a high availability cluster, with two quite powerful nodes that had failover setup. However, the problem would typically impact a single server, eventually leading to it stopping processing requests, while the second server would be humming along beautifully. When the first server would stop, the second server would continue for a few more minutes, then die in the same manner.

The issue was clearly related to the load on those server. The website is pretty busy, with millions of queries to RavenDB per hour, but we had no reason to think that the system was over capacity. Why is that?

- The system has been running around for over a month on the same configuration, with moderate memory consumption and low CPU usage throughout that time.

- The last upgrade to the RavenDB servers happened about a week ago, and no new traffic patterns should cause that load.

- The last RavenDB client upgrade happened that morning, but it was a minor change and should reduce load on the server. But the problem would show up within 10 – 20 minutes, and the client upgrade happened several hours before it started happening.

- The operations team swore that there were no changes made to either server recently.

The behavior was puzzling because we didn’t have high CPU, high memory or paging. Typical indications that the system is under too much of a load. Furthermore, I/O rates were quite good, and testing the disk speed and throughput showed that everything is all right.

We tried to revert all recent changes, to both clients and servers. We monitored the servers to see what happens when the problem recurred, and eventually we figured out what is the probable issue. The affected server would slowly show a growth pattern in the number of threads being used. Slowly going to upward of 700 threads. The non impacted server would sit on around 100 threads or so, until the first server would fail, in which case all load would hit the okay server, and it would be fine, for a while, then the thread count would start climbing up, the request timing would increase, and eventually things would stop.

One thing that was very interesting was that the problem was that RavenDB would still be responding (albeit a bit badly) under those conditions, and there was no indication that there are any issues with it as far as it was concerned. But under this failure condition we did see that we start getting a rather strange error.

The server is too busy, could not acquire transactional access

This rather poorly worded message is an indication that we hit an internal limit inside RavenDB. By default, we have a limit of 512 concurrent requests inside the server. That is done to make sure that we aren’t swamping the server with too many requests that would take it down. The good news was that this is a configurable value, and we were able to set Raven/MaxConcurrentServerRequests to a higher value to get a little bit more breathing space. Once we have done that, the system would still become slower, but it would stabilize at some point, even though it would still be much slower than what we come to expect of it.

Intermediary conclusions were:

- Something is causing requests to take a lot longer than they should.

- Because they take longer than they should, we see a convoy of requests.

- We can’t handle requests as fast as they come, so they start to pile up.

- The thread pool (which dispatches requests) is handling this by slowly increasing the number of threads.

- But we still aren’t able to catch up with the number of requests coming in.

- At some point, the number of concurrent requests hits the limit, and we start rejecting some requests

- We would see major slow downs because the thread pool would increase the number of threads in the pool only on a slow and measured pace.

- We don’t have any idea what is causing us to slow down.

Remember, this is a system that has been in production for a while now. In fact, the current traffic numbers are actually only about one third of the peek that was hit shortly after the system was released, and we handled that load with no issue.

We came back to the drawing board, and started look at pretty much anything suspicious. Memory was reasonable, and we have few dozens GB still free. CPU utilization was moderate, with over half of the system capacity unused. I/O rates from the disk according to performance monitor was excellent. Everything was good. So we took a dump of the process and proceeded to try to figure out what is going on from that.

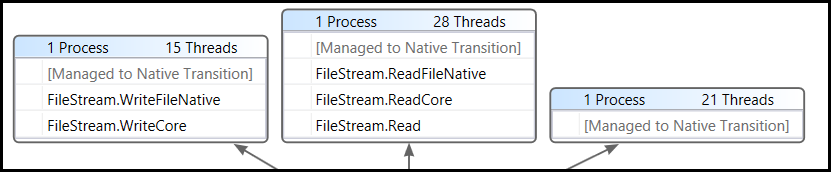

We saw something like this (spread over 150+ threads and in various modes, but this gets the point):

95% of the threads were stuck in various I/O related methods. Like NtCreateFile, NtCloseFile, etc. Other threads were hanging in .NET related code that was also doing file I/O. So we can pretty conclusively state that the problem is I/O related.

But what? We tested the disk I/O, it was good. We run I/O tests and they were fine. The measurements we got from the monitoring tools all said that the I/O is fine.

And yet we could see that I/O was the culprit. What the hell?!

At this point, we start suspecting that the issue was with something in the system. Now, each of the servers was capable to handling the entire load of the server (and then some) on its own. But both machine exhibit the same signs. That led us to believe that this isn’t some sort of hardware issue. It is something that both machine had.

A common trouble maker in this area is any kind of tool that hooks into sys calls. For example, anti virus software. In fact, that was one of the very first questions that we asked. Does this machine have anti virus on it? We got a negative answer early on. But after seeing those results, we went in and double checked. The machine did have an anti virus installed, and that is a huge red flag in those circumstances.

Anti virus software like to hook into all kind of system calls, and it is commonly associated with problems because of that. We asked the operations team to disable the anti virus on those servers to see if it would have an impact on the issue. Indeed, they stopped the anti virus and restarted the RavenDB servers, and then we waited. 10 minutes later, we saw no increase in the number of threads, and 25 minutes later, we were holding steady on the same performance that we came to expect.

Today, the customer sent us their monitoring system reports and there is a huge drop from 30% – 50% CPU usage to single digits on the day in question, and then it is flat graph for all the rest of the week.

Our hypothesis is that the anti virus either auto updated itself, or was actually updated by ops as part of routine maintenance task, and for some reason it has taken a liking to the kind of I/O RavenDB was generating. So it inspected us. And as it did so, it added overhead for each and every I/O call we made. And considering that we are a database, I/O is kinda of important for us. That additional overhead would cause us to respond much more slowly, which would mean that we would have additional request queue up, all the way until we start rejecting them. And we didn’t see those at the I/O metrics for the system because the overhead happen after the I/O is already completed.

I also suspect that this runs for all I/O operations, including things that should be buffered (therefor fast), so it has a big impact on our assumptions (this I/O call should be buffered, so it is fast, but it is checked by the AV, so it is not, leading to the convoy).

Because the Anti Virus was installed on both servers, they were both impacted, and the more load we put on them, the more the AV had to do, the more it slowed us down, the more requests we had queued, the bigger the trouble.

In other words, be very aware what is actually going on your system. As part of the post mortem analysis, we realized that we should have probably run fltmc.exe tool to check what are the file system filters that are installed and probably could have figured out that this is an Anti Virus issue much faster.

More posts in "Production postmortem" series:

- (07 Apr 2025) The race condition in the interlock

- (12 Dec 2023) The Spawn of Denial of Service

- (24 Jul 2023) The dog ate my request

- (03 Jul 2023) ENOMEM when trying to free memory

- (27 Jan 2023) The server ate all my memory

- (23 Jan 2023) The big server that couldn’t handle the load

- (16 Jan 2023) The heisenbug server

- (03 Oct 2022) Do you trust this server?

- (15 Sep 2022) The missed indexing reference

- (05 Aug 2022) The allocating query

- (22 Jul 2022) Efficiency all the way to Out of Memory error

- (18 Jul 2022) Broken networks and compressed streams

- (13 Jul 2022) Your math is wrong, recursion doesn’t work this way

- (12 Jul 2022) The data corruption in the node.js stack

- (11 Jul 2022) Out of memory on a clear sky

- (29 Apr 2022) Deduplicating replication speed

- (25 Apr 2022) The network latency and the I/O spikes

- (22 Apr 2022) The encrypted database that was too big to replicate

- (20 Apr 2022) Misleading security and other production snafus

- (03 Jan 2022) An error on the first act will lead to data corruption on the second act…

- (13 Dec 2021) The memory leak that only happened on Linux

- (17 Sep 2021) The Guinness record for page faults & high CPU

- (07 Jan 2021) The file system limitation

- (23 Mar 2020) high CPU when there is little work to be done

- (21 Feb 2020) The self signed certificate that couldn’t

- (31 Jan 2020) The slow slowdown of large systems

- (07 Jun 2019) Printer out of paper and the RavenDB hang

- (18 Feb 2019) This data corruption bug requires 3 simultaneous race conditions

- (25 Dec 2018) Handled errors and the curse of recursive error handling

- (23 Nov 2018) The ARM is killing me

- (22 Feb 2018) The unavailable Linux server

- (06 Dec 2017) data corruption, a view from INSIDE the sausage

- (01 Dec 2017) The random high CPU

- (07 Aug 2017) 30% boost with a single line change

- (04 Aug 2017) The case of 99.99% percentile

- (02 Aug 2017) The lightly loaded trashing server

- (23 Aug 2016) The insidious cost of managed memory

- (05 Feb 2016) A null reference in our abstraction

- (27 Jan 2016) The Razor Suicide

- (13 Nov 2015) The case of the “it is slow on that machine (only)”

- (21 Oct 2015) The case of the slow index rebuild

- (22 Sep 2015) The case of the Unicode Poo

- (03 Sep 2015) The industry at large

- (01 Sep 2015) The case of the lying configuration file

- (31 Aug 2015) The case of the memory eater and high load

- (14 Aug 2015) The case of the man in the middle

- (05 Aug 2015) Reading the errors

- (29 Jul 2015) The evil licensing code

- (23 Jul 2015) The case of the native memory leak

- (16 Jul 2015) The case of the intransigent new database

- (13 Jul 2015) The case of the hung over server

- (09 Jul 2015) The case of the infected cluster

Comments

What's your opinion about AV on servers in general? I'm asking because you must have collected quite some experience with that.

AV is for end users. End users doesn't work on servers.

For servers, do run AV! Ensure that there are known exclusions in place for the applications and services under operation.

For web servers, exclude any CDN resource directories, temporary caches & pagefile locations. If you site accepts uploads, this folder should be scanned on-demand. Then run the full scans over all folders and files out-of-hours to keep it in check.

For application servers, the binaries / static resources served for any web services are good place to exclude, pagefile locations. Again, run the full scans over all folders and files (included exclusions) out-of-hours to keep it in check.

For database servers, exclude the data directory of the Transaction Logs and Data Files including Temp DBs - or even the exact files themselves if at all possible. Again, run the full scans over all folders and files (included exclusions) out-of-hours to keep it in check.

Raise these exclusions and mitigations with your SecOps teams and I believe it would make a good balance of both worlds.

Was it NOD32 ? I've had similar incidents with my applications and had to prove to the client each time that it was their AV was killing the performance and not my app.

Tobi, In general, I hate AV on servers. Dominic, below, seems to make a lot of sense with regards to how to setup and configure AV on servers, but just about any time that I have run into AV on servers it was part of some production issue that was really hard to narrow down.

Such things are pretty easy and fast to find if you use ETW since you can see all call stacks including the AV Software in the kernel and not just in your process. I know you are no fan of ETW because it is too complex to use. The price for this is that you will need more guessing what apart from yourself in your own process could be slowing you down.

Alois, Given a production system, how do you use ETW to get stack traces? And can you use it for timings as well?

The biggest advantage of ETW are stack traces and exact timings. If the virus scanner will put your thread to a halt you can see it via the context switch events where you can see for every single WaitForSingleObject call where you block for anything how long it did block AND which thread did unblock you. With ReadyThread events you can even get the call stack who did release your thread (e.g. call ReleaseMutex, PulseEvent, ...). For starters you can use WPRUI which is part of the Windows Performance Toolkit will give you all of these Details with an easy to use GUI. Normally you want to fine tune the data collection depending on what you are after (anything, GC related issues, long term profiling, ...) which is complex but once you have it you can track down virtually anything. E.g. you can see network hickups (TCP Retransmission) by enabling the TCP ETW Diagnostics Provider where each retransmission event relates to a delay of ca. 400ms. Since that stuff is in place I can track down the hardest distributed problems at customer sites. I can tell you if it is the network, the client PC or the server which has an issue. By profiling both machines at once (https://etwcontroler.codeplex.com/) where you can combine it with WireShark to see all network stuff too you can record so much Information that you can tell for every hickup what the root cause is.

Alois, Are there any good guides to get into this?

Wouldnt it a good idea to use the Antivirus integration in Windows API to detect that and give a fat warning when an Antivirus Software is installed?

Steve, Sure, because ops love it when you tell them that you don't want to run with an AV installed. And just having an AV isn't bad, in fact, they had the AV all along, but for some mysterious reason it decided to do extra checks on us all of a sudden

That was not what I had in mind. I would raise a warning when an AV gets online that it can interrupt performance when not properly configured. Users can dismiss it, and everything is fine.

Steve, That doesn't actually do anything for us. It is just a way for us to CYA.

What we'll do (and thanks for the suggestion) is use this to detect that in the debug information we send, so we can narrow down on this more easily

Sure here are good links: https://randomascii.wordpress.com/2012/05/11/the-lost-xperf-documentationcpu-scheduling/ Here is a .NET sample which is largely obsolete unless you want to Register the ETW Provider manually but it Shows a rudimentary script to collect the data by yourself. http://geekswithblogs.net/akraus1/archive/2013/06/02/153041.aspx And here a typical case study where an database did become very slow and how I could track it down to the root cause. http://geekswithblogs.net/akraus1/archive/2013/08/03/153594.aspx

Besides this there are plent y of tutorials on Defrag Tools about WPA and xperf. MS Virtual Academy has also a very good set of tutorials: http://www.microsoftvirtualacademy.com/training-courses/windows-performance-jump-start

One thing to note is that if you are running on Win7 (Server 2008 R2) x64 you should consider to use NGen to get correct call stacks. Dynamically generated (JITed) code will not give you good call stacks due to limitations of the Windows Kernel Stackwalker. If you do not have an excessive amount of JITed code in your process you should get pretty far with NGenned binaries. On Win8 all of these issues are fixed.

Comment preview