Production postmortemEfficiency all the way to Out of Memory error

RavenDB is written in C#, and as such, uses managed memory. As a database, however, we need granular control of our memory, so we also do manual memory management.

One of the key optimizations that we utilize to reduce the amount of overhead we have on managing our memory is using an arena allocator. That is a piece of memory that we allocate in one shot from the operating system and operate on. Once a particular task is done, we can discard that whole segment in one shot, rather than try to work out exactly what is going on there. That gives us a proper scope for operations, which means that missing a free in some cases isn’t the end of the world.

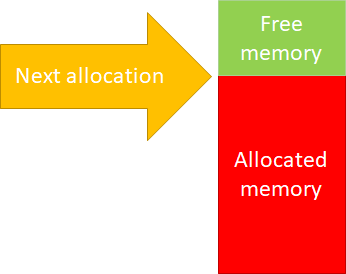

It also makes the code for RavenDB memory allocation super simple. Here is what this looks like:

Whenever we need to allocate more memory, we’ll just bump the allocator up. Initially, we didn’t even implement freeing memory, but it turns out that there are a lot of long running processes inside of RavenDB, so we needed to reuse the memory inside the same operation, not just between operations.

The implementation of freeing memory is pretty simple, as well. If we return the last item that we allocated, we can just drop the next allocation position by how many bytes were allocated. For that matter, it also allows us to do incremental allocations. We can ask for some memory, then increase the allocation amount on the fly very easily.

Here is a (highly simplified) example of how this works:

As you can see, there isn’t much there. A key requirement here is that you need to return the memory back in the reverse order of how you allocated it. That is usually how it goes, but what if it doesn’t happen?

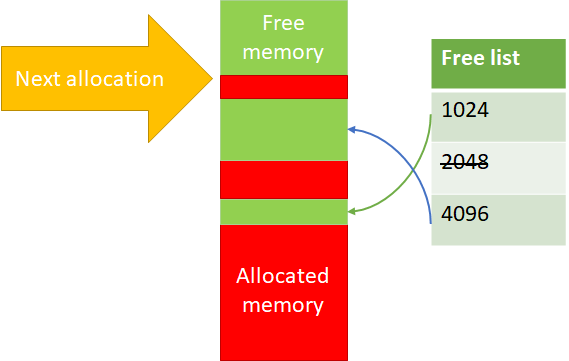

Well, then we can’t reuse the memory directly. Instead, we’ll place them in a free list. The actual allocations are done on powers of two, so that makes things easier. Here is what this actually looks like:

So if we free, but not from the top, we remember the location and can use it again. Note that for 2048 in the image above, we don’t have any free items.

I’m quite fond of this approach, since this is simple, easy to understand and has a great performance profile. But I wouldn’t be writing this blog post if we didn’t run into issues, now would I?

A customer reported high memory usage (to the point of memory exhaustion) when doing a certain set of operations. That… didn’t make any sense, to be honest. That was a well traveled code path, any issue there should have been long found out.

They were able to send us a reproduction and the support team was able to figure out what is going on. The problem was that the code in question did a couple of things, which altogether led to an interesting issue.

- It allocated and deallocated memory, but not always in the same order – this is fine, that is why we have the free list, after all.

- It extended the memory allocation it used on the fly – perfectly fine and an important optimization for us.

Give it a moment to consider how could these two operations together result in a problem…

Here is the sequence of events:

- Loop:

- Allocate(1024) -> $1

- Allocate(256) -> $2

- Grow($1, 4096) -> Success

- Allocate(128) -> $3

- Free($1) (4096)

- Free($3) (128)

- Free($2) (256)

What is going on here?

Well, the issue is that we are allocating a 1KB buffer, but return a 4KB buffer. That means that we add the returned buffer to the 4KB free list, but we cannot pull from that free list on allocation.

Once found, it was an easy thing to do (detect this state and handle it), but until we figured it out, it was quite a mystery.

More posts in "Production postmortem" series:

- (07 Apr 2025) The race condition in the interlock

- (12 Dec 2023) The Spawn of Denial of Service

- (24 Jul 2023) The dog ate my request

- (03 Jul 2023) ENOMEM when trying to free memory

- (27 Jan 2023) The server ate all my memory

- (23 Jan 2023) The big server that couldn’t handle the load

- (16 Jan 2023) The heisenbug server

- (03 Oct 2022) Do you trust this server?

- (15 Sep 2022) The missed indexing reference

- (05 Aug 2022) The allocating query

- (22 Jul 2022) Efficiency all the way to Out of Memory error

- (18 Jul 2022) Broken networks and compressed streams

- (13 Jul 2022) Your math is wrong, recursion doesn’t work this way

- (12 Jul 2022) The data corruption in the node.js stack

- (11 Jul 2022) Out of memory on a clear sky

- (29 Apr 2022) Deduplicating replication speed

- (25 Apr 2022) The network latency and the I/O spikes

- (22 Apr 2022) The encrypted database that was too big to replicate

- (20 Apr 2022) Misleading security and other production snafus

- (03 Jan 2022) An error on the first act will lead to data corruption on the second act…

- (13 Dec 2021) The memory leak that only happened on Linux

- (17 Sep 2021) The Guinness record for page faults & high CPU

- (07 Jan 2021) The file system limitation

- (23 Mar 2020) high CPU when there is little work to be done

- (21 Feb 2020) The self signed certificate that couldn’t

- (31 Jan 2020) The slow slowdown of large systems

- (07 Jun 2019) Printer out of paper and the RavenDB hang

- (18 Feb 2019) This data corruption bug requires 3 simultaneous race conditions

- (25 Dec 2018) Handled errors and the curse of recursive error handling

- (23 Nov 2018) The ARM is killing me

- (22 Feb 2018) The unavailable Linux server

- (06 Dec 2017) data corruption, a view from INSIDE the sausage

- (01 Dec 2017) The random high CPU

- (07 Aug 2017) 30% boost with a single line change

- (04 Aug 2017) The case of 99.99% percentile

- (02 Aug 2017) The lightly loaded trashing server

- (23 Aug 2016) The insidious cost of managed memory

- (05 Feb 2016) A null reference in our abstraction

- (27 Jan 2016) The Razor Suicide

- (13 Nov 2015) The case of the “it is slow on that machine (only)”

- (21 Oct 2015) The case of the slow index rebuild

- (22 Sep 2015) The case of the Unicode Poo

- (03 Sep 2015) The industry at large

- (01 Sep 2015) The case of the lying configuration file

- (31 Aug 2015) The case of the memory eater and high load

- (14 Aug 2015) The case of the man in the middle

- (05 Aug 2015) Reading the errors

- (29 Jul 2015) The evil licensing code

- (23 Jul 2015) The case of the native memory leak

- (16 Jul 2015) The case of the intransigent new database

- (13 Jul 2015) The case of the hung over server

- (09 Jul 2015) The case of the infected cluster

Comments

Comment preview