RavenDB 3.5 whirl wind tourDeeper insights to indexing

The indexing process in RavenDB is not trivial, it is composed of many small steps that need to happen and coordinate with one another to reach the end goal.

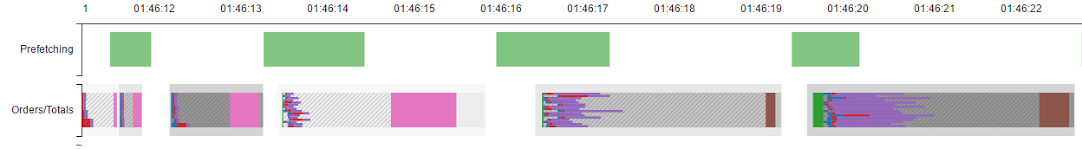

A lot of the complexity involved is related to concurrent usage, parallelization of I/O and computation and a whole bunch of other stuff like that. In RavenDB 3.0 we added support for visualizing all of that, so you can see what is going on, and can tell what is taking so long.

With RavenDB 3.5, we extended that from just looking at the indexing documents to looking into the other parts of the indexing process.

In this image, you can see the green rectangles representing prefetching cycles of documents from the disk, happening alongside indexing cycles (representing as the color rectangles, with visible concurrent work and separate stages.

The good thing about this is that it make it easy to see whatever there is any waste in the indexing process, if there is a gap, we can see it and investigate why that happens.

Another change we made was automatic detection of corrupted Lucene indexes (typical case, if you run out of disk space, it is possible that the Lucene index will be corrupted, and after disk space is restored, it is often not recoverable by Lucene), so we now can automatically detect that scenario and apply the right action (trying to recover from a previous instance, or resetting the index).

As a reminder, we have the RavenDB Conference in Texas in a few months, which would be an excellent opportunity to see RavenDB 3.5 in all its glory.

More posts in "RavenDB 3.5 whirl wind tour" series:

- (25 May 2016) Got anything to declare, ya smuggler?

- (23 May 2016) I'm no longer conflicted about this

- (19 May 2016) What did you subscribe to again?

- (17 May 2016) See here, I got a contract, I say!

- (13 May 2016) Deeper insights to indexing

- (11 May 2016) Digging deep into the internals

- (09 May 2016) I'll have the 3+1 goodies to go, please

- (04 May 2016) I’ll find who is taking my I/O bandwidth and they SHALL pay

- (02 May 2016) You want all the data, you can’t handle all the data

- (29 Apr 2016) A large cluster goes into a bar and order N^2 drinks

- (27 Apr 2016) I’m the admin, and I got the POWER

- (25 Apr 2016) Can you spare me a server?

- (21 Apr 2016) Configuring once is best done after testing twice

- (19 Apr 2016) Is this a cluster in your pocket AND you are happy to see me?

Comments

Nice! One question: corrupted indexes in case of full disks would still be possible in v4 with Voron as index storage?

njy, No, that isn't possible. Voron makes Lucene ACID, so a full disk will just meant hat we can't index, it should auto fix itself on the fly when there is enough space.

@Oren: "Voron makes Lucene ACID" such a short sentence, such a powerful one. Re-reading this phrase and considering how important Lucene is and how brittle sometimes is, more and more it feels like such a huge accomplishment. Congrats to you all.

Comment preview