I spoke a lot about relatively large features, such as Clustering, Global Configuration and the Admin Console. But RavenDB 3.5 represent a lot of time and effort from a pretty large team. Some of those changes you'll probably not notice. Additional endpoints, better heuristics, stuff like that, and some of them are small tiny features that get lost in the crowd. This post is about them.

Sharding

RavenDB always had a customizable sharding strategy, while the default sharding strategy is pretty smart, you can customize it based on your own knowledge of your system. Unfortunately, we had one trouble about that. While you could customize it, when you did so, it was pretty much an all or nothing approach. Instead of RavenDB analyzing the query, extracting the relevant servers to use for this query and then only hitting them, you got the query and had to do all the hard work yourself.

In RavenDB 3.5, we changed things so you have multiple levels of customizing this behavior. You can still take over everything, if you want to, or you can let RavenDB do all the work, give you the list of servers that it things should be included in the query, and then you can apply your own logic. This is especially important in scenarios where you split a server. So the data that used to be "RVN-A" is now located on "RVN-A" and on "RVN-G". So RavenDB analyze the query, does it things and end up saying, I think that I need to go to "RVN-A", and then you can detect that and simply say: "Whenever you want to go to RVN-A, also go to RVN-G". So the complexity threshold is much lowered.

Nicer delete by index

The next feature is another tiny thing. RavenDB supports the ability to delete all record matching a query. But in RavenDB 3.0, you have to construct the query yourself (typically you would call ToString() on an existing query) and the API was a bit awkward. In RavenDB 3.5, you can now do the following:

await session.Advanced.DeleteByIndexAsync<Person, Person_ByAge>(x => x.Age < 35);

And this will delete all those youngster from the database, easy, simple, and pretty obvious.

I did mention that this was the post about the stuff that typically goes under the radar.

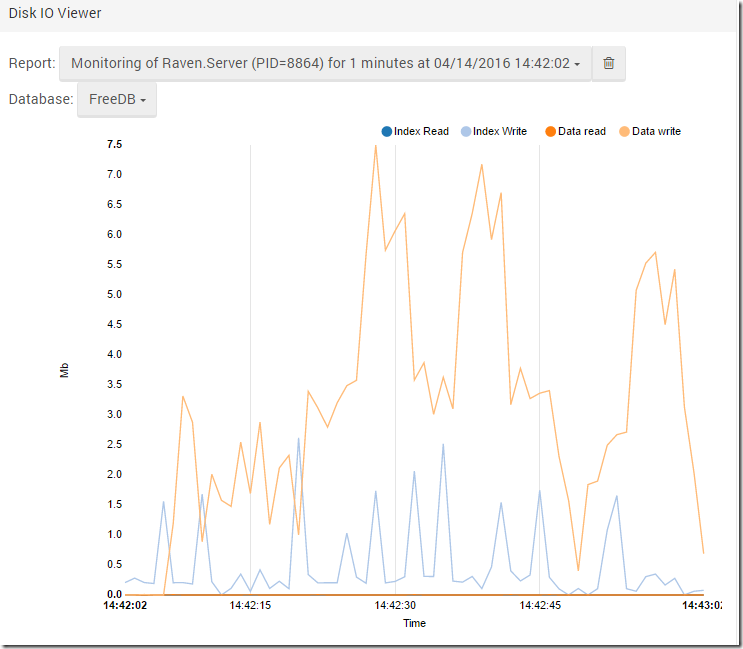

Query timings and costs

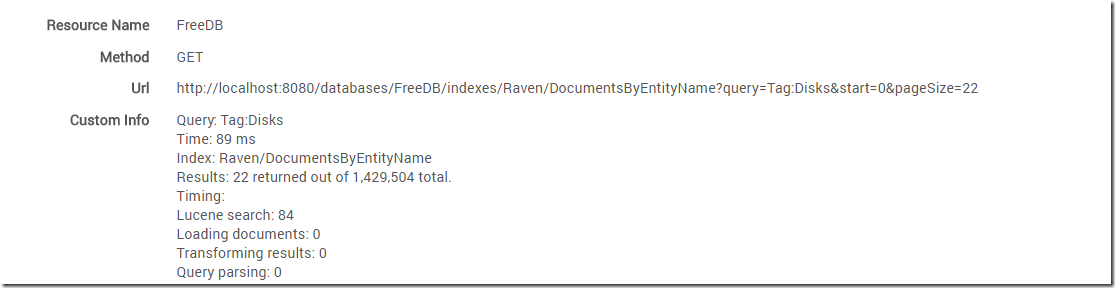

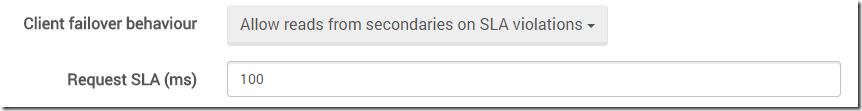

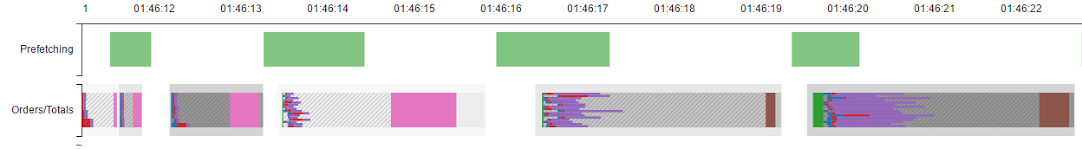

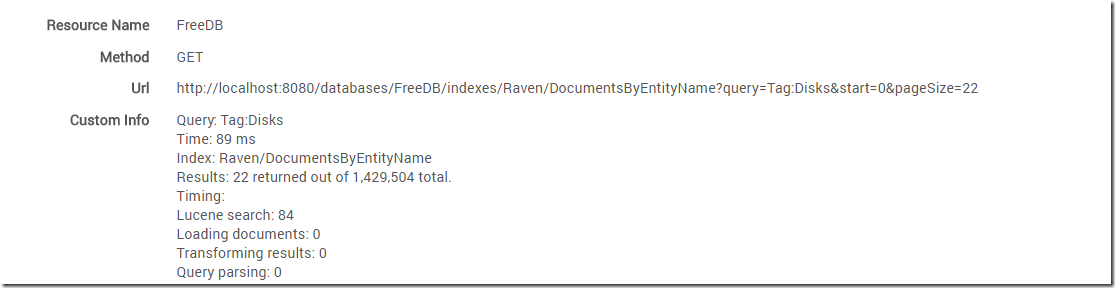

This nice feature appears in the Traffic Watch window. When you are looking into queries, you'll now not only get all the relevant details about this query, but will also be able to see the actual costs in serving it.

In this case, we are seeing a very expensive query, even though it is a pretty simple one. Just looking at this information, I can tell you why that is.

Look into the number of results, we are getting 22 out of 1.4 millions. The problem is that Lucene doesn't know which ones to return, it need to rank them by how well they match the query. In this case, they all match the query equally well, so we waste some time sorting the results only to get the same end result.

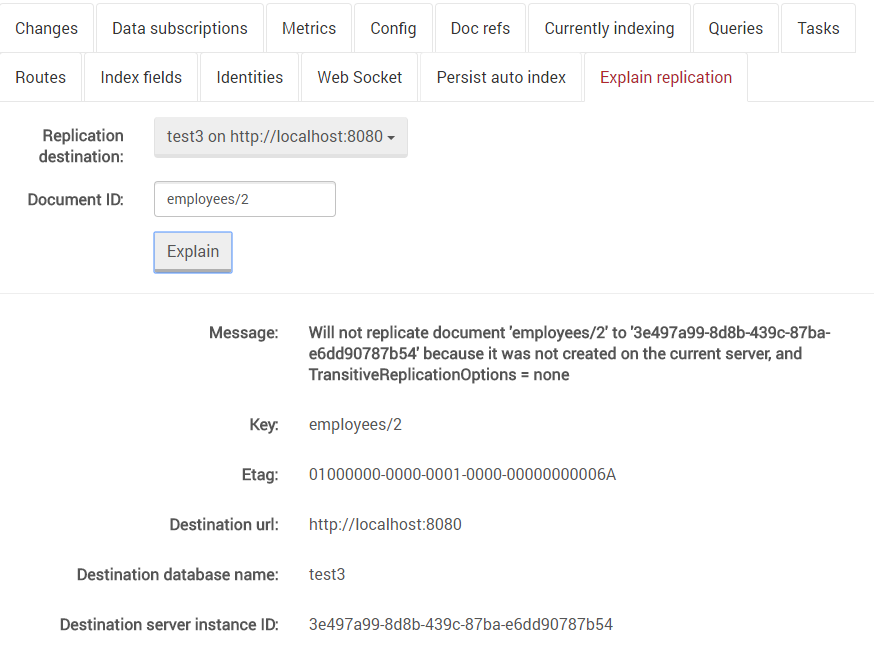

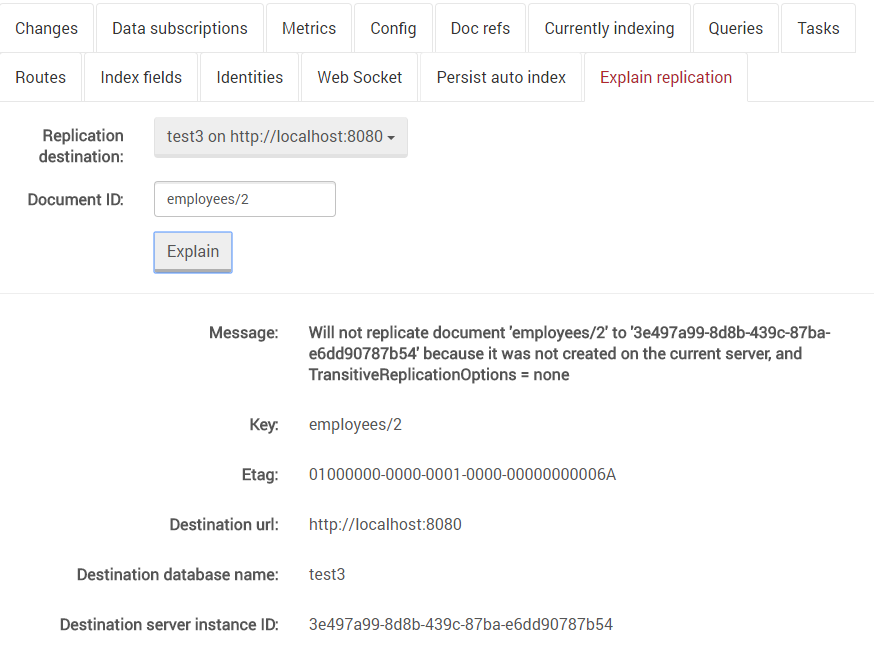

Explain replication behavior

Occasionally we get a user that complains that after setting up replication, some documents aren't being replicated. This is usually by design, because of some configuration or behavior, but getting to the root of it can take a non trivial amount of time.

In order to help that, we added a debug endpoint and a UI screen to handle just that:

Now you can explicitly ask, why did you skip replication over this document, and RavenDB will run through its logic tree and tell you exactly what the reason is. No more needing to read the logs and find exactly the right message, you can just ask, and get an immediate answer back.

Patch that tells you how much work was done

This wasn't meant to be in this post, as you can see from the title, this is a tiny stupid little feature, but I utterly forgot that we had it, and when I saw it I just loved it.

The premise is very simple, you are running a patch operation against a database, which can take a while. RavenDB will now report to you what the current state of the process is, so you can see that it is progressing easily, and not just wait until you get the "good / no good" message in the end.

_thumb.png)

As a reminder, we have the RavenDB Conference in Texas in shortly, which would be an excellent opportunity to see RavenDB 3.5 in all its glory.

_thumb.png)