RavenDB 3.5 whirl wind tourIs this a cluster in your pocket AND you are happy to see me?

The first thing you’ll see when you run RavenDB 3.5 is the new empty screen:

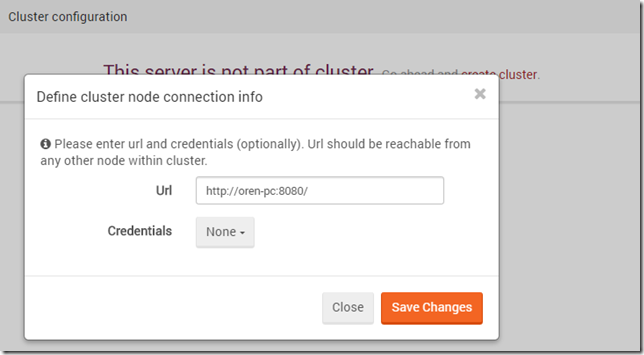

You get the usual “Create a database” and the more interesting, Create Cluster. In RavenDB 3.0 clusters were ad hoc things, you created them by bridging together multiple nodes using replication. In RavenDB 3.5, we have made that into a first class concept. Clicking on the “create cluster” link will give you the following:

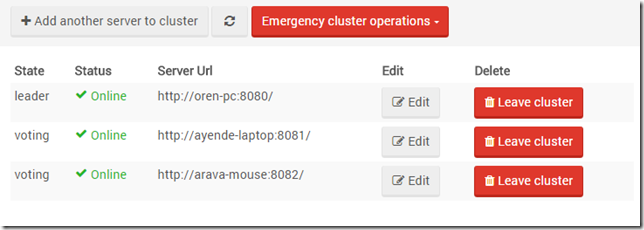

And then you can start adding additional nodes:

RavenDB cluster is using Raft (more specifically, Rachis, our Raft implementation) to bridge together multiple server into a single distributed consensus. This allows you to manage the entire cluster in one go.

Once you have created the cluster, you can always configure it (add / remove nodes) by clicking the cluster icon on the bottom.

Raft is a distributed consensus algorithm, which allows a group of server to agree on the order of a set of commands to execute (not strictly true, but for more details, see my previous posts in the topic).

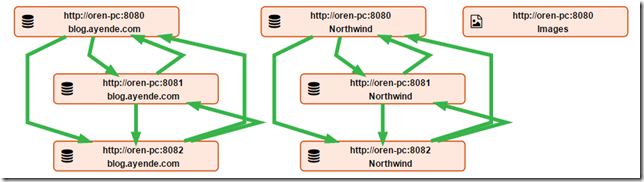

We use Raft to connect server together, but what does this mean? RavenDB replication is still running in a multi master node, which means that each server can still accept requests. We use Raft for two distinct purposes.

The first of which is to distribute changes of operations across the cluster. Adding a new database, changing a configuration settings. This allows to be sure that such changes are accepted by the cluster before they are actually performed.

But the one that you’ll probably notice most of all is that shown in the cluster image, Raft is a strong leader system, and we use it to make sure that all the writes in the cluster are done on the leader. If the leader is taken down, we’ll transparently select a new leader, clients will be informed about it, and all new writes will go to the new leader. When the previous leader recover, it will join the cluster as a follower, and there will be no disruption of service. This is done for all the databases in the cluster, as you can see from the follow topology view:

Note that while the leader selection is handled via Raft, the leader database is replicating to the other nodes using multi-master system, so if you need to wait until a document is present in a majority of the cluster, you need to wait for write assurance.

The stable leader in the presence of failure means that we won’t have the clients switching back and forth between nodes, they will be informed of the leader, and stick to it. This generates a much more stable network environment, and allowing you to configure the cluster details once and have it propagate everywhere is a great reduction in operational work. I’ll talk more about this in my next post in this series.

As a reminder, we have the RavenDB Conference in Texas in a few months, where we’ll present RavenDB 3.5 in all its glory.

More posts in "RavenDB 3.5 whirl wind tour" series:

- (25 May 2016) Got anything to declare, ya smuggler?

- (23 May 2016) I'm no longer conflicted about this

- (19 May 2016) What did you subscribe to again?

- (17 May 2016) See here, I got a contract, I say!

- (13 May 2016) Deeper insights to indexing

- (11 May 2016) Digging deep into the internals

- (09 May 2016) I'll have the 3+1 goodies to go, please

- (04 May 2016) I’ll find who is taking my I/O bandwidth and they SHALL pay

- (02 May 2016) You want all the data, you can’t handle all the data

- (29 Apr 2016) A large cluster goes into a bar and order N^2 drinks

- (27 Apr 2016) I’m the admin, and I got the POWER

- (25 Apr 2016) Can you spare me a server?

- (21 Apr 2016) Configuring once is best done after testing twice

- (19 Apr 2016) Is this a cluster in your pocket AND you are happy to see me?

Comments

What is expected release date for 3.5?

Dejan, We want to release it at the same time as the RavenDB Conference

Cool!!

Comment preview