Excerpts from the RavenDB Performance team reportJSON & Structs in Voron

What seems to be a pretty set in stone rule of thumb is that when building a fool proof system, you will also find a better fool. In this case, the fool was us.

In particular, during profiling of our systems, we realized that we did something heinous, something so bad that it deserve a true face palm:

In essence, we spent all this time to create a really fast key/value store with Voron. We spent man years like crazy to get everything working just so… and then came the time to actually move RavenDB to use Voron. That was… a big task, easily as big as writing Voron in the first place. The requirements RavenDB makes of its storage engine are anything but trivial. But we got it working. And it worked good. Initial performance testing showed that we were quite close to the performance of Esent, within 10% – 15%, which was good enough for us to go ahead with.

Get things working, get them working right and only then get them working fast.

So we spent a lot of time making sure that things worked, and worked right.

And then the time came to figure out if we can make things faster. Remember, Voron is heavily based around the idea of memory mapping and directly accessing values at very little cost. This is important, because we want to use Voron for a whole host of stuff. That said, in RavenDB we use it to store JSON documents, so we obviously store the JSON in it. There are quite a lot of book keeping information that we keep track of.

In particular, let us focus on the indexing stats. We need to keep track of the last indexed etag per index, when it was changed, number of successful indexing attempts, number of failed indexing attempts, etc.

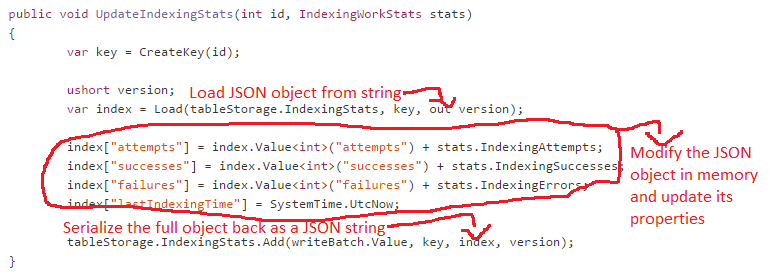

Here is how we updated those stats:

As you can imagine, a lot of the time was actually spent just deserializing and deserializing the data back & forth from JSON. In fact, a lot of time was spend doing just that.

Now, indexing status needs to be queried on every single query, so that was a high priority for us to fix. We did this by not storing the data as JSON, but storing the data in its raw form directly into Voron.

Voron gives us a memory of size X, and we put all the relevant data into that memory, and pull the data directly from the raw memory. No need for serialization/deserialization step.

There is a minor issue about the use of strings .While using fixed size structure (like the indexing stats) is really easy, we just read/write to that memory. When dealing with variable length data (such as strings), you have harder time at it, but we dealt with that as well by defining a proper way to encode string lengths and read them back.

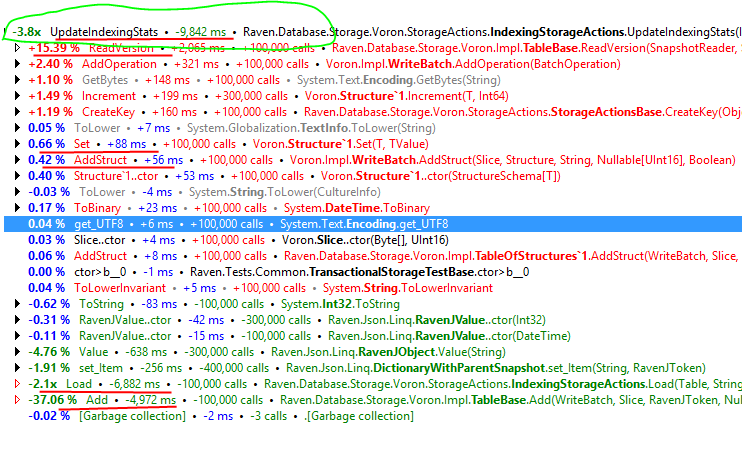

The results, by the way:

That means that we got close to 4 times faster! That is certainly going to help us down the road.

More posts in "Excerpts from the RavenDB Performance team report" series:

- (20 Feb 2015) Optimizing Compare – The circle of life (a post-mortem)

- (18 Feb 2015) JSON & Structs in Voron

- (13 Feb 2015) Facets of information, Part II

- (12 Feb 2015) Facets of information, Part I

- (06 Feb 2015) Do you copy that?

- (05 Feb 2015) Optimizing Compare – Conclusions

- (04 Feb 2015) Comparing Branch Tables

- (03 Feb 2015) Optimizers, Assemble!

- (30 Jan 2015) Optimizing Compare, Don’t you shake that branch at me!

- (29 Jan 2015) Optimizing Memory Comparisons, size does matter

- (28 Jan 2015) Optimizing Memory Comparisons, Digging into the IL

- (27 Jan 2015) Optimizing Memory Comparisons

- (26 Jan 2015) Optimizing Memory Compare/Copy Costs

- (23 Jan 2015) Expensive headers, and cache effects

- (22 Jan 2015) The long tale of a lambda

- (21 Jan 2015) Dates take a lot of time

- (20 Jan 2015) Etags and evil code, part II

- (19 Jan 2015) Etags and evil code, Part I

- (16 Jan 2015) Voron vs. Esent

- (15 Jan 2015) Routing

Comments

SQL Server sometimes keeps such information in memory data structures. When that data changes it writes a log record to record the change. It does not update a statistics row each time some data structure changes.

I understand that for fixed size structures you could directly map to a packed struct. For those with variable length string fields, would it be possible to make them fixed size by including fixed size char array fields, or do you need to size encode them to avoid wasting space?

Alex, We could certainly do that, yes. The problem with fixed char is that then you have a hard limit. We ended up encoding them, it was very cheap

That's basically why databases use binary serialization. That's why rows in SQL server has nullmaps etc. JSON is not the most performancet way of storing data :-)

Microsoft's Bond serialization library (https://github.com/Microsoft/bond) released recently has a very nice concept of transforms, that transform data on the fly between formats (Json, xml, binary) https://microsoft.github.io/bond/manual/bond_cs.html#transcoder , and it's hell of allot faster than .Net serialization/deserialization.

The only thing is that Bond is schematized, therefore it cannot replace a generic serialization library in every situation.

@Catalin we are looking into several alternatives regarding binary serialization. Having a generic solution would be very important not only at the database level, but at the bulk insertion scenarios.

@Federico, What about msgpack? seems like a very fast concept http://msgpack.org/

Comment preview