Note, this post was written by Federico.

I want first to give special thanks to Thomas, Tobi, Alex and all the others that have made so many interesting comments and coded alternative approaches. It is a great thing to have so many people looking over your work and pointing out interesting facts that in the heat of the battle with the code we could easily overlook.

It was just three weeks since I wrote this series of posts, and suddenly three weeks seems to be a lot of time. There had been very interesting discussions regarding SSE, optimization, different approaches to optimize specific issues, about lowering measurement errors, that I was intending to write down in a follow up post. However, there was an earth shattering event happening this week that just made that post I wanted to write a moot exercise.

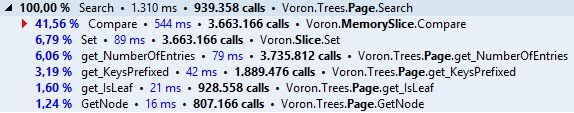

Let’s first recap how we ended up optimizing compares.

At first we are using memcmp via P/Invoke. Everything was great for a while, until we eventually hit the wall and we needed all the extra juice we could get. Back at the mid of 2014 we performed the first optimization rounds where for the first time introduced a fully managed pointers based solution. That solution served us well, until we started to optimize other paths and really stress RavenDB 3.0.

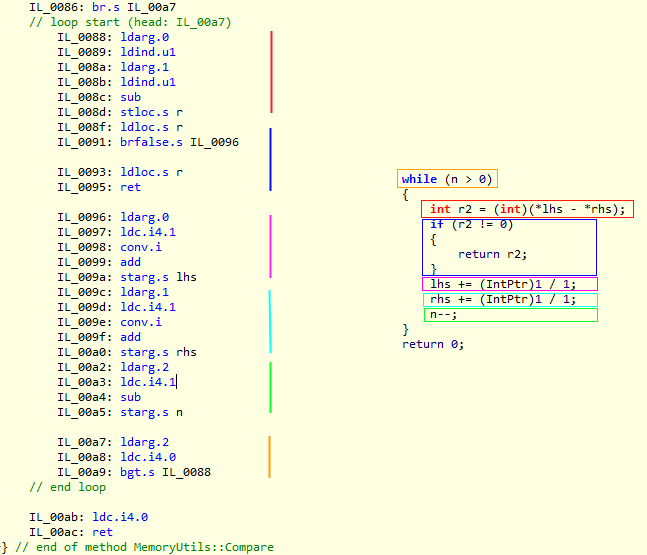

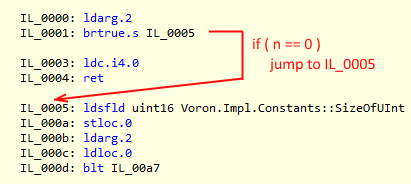

In the second round, that eventually spawned this series, we introduced branch-tables, bandwidth optimizations, and we had to resort to every trick on the book to convince the JIT to write pretty specific assembler code.

And here we are. 3 days ago, on the 3rd of February, Microsoft released CoreCLR for everyone to dig into. For everyone interested in performance it is a one in a million opportunity. Being able to see how the JIT works, how high-performance battle tested code is written (not looking at it in assembler) is quite an experience.

In there, specifically in the guts of the mscorlib source we noticed a couple of interesting comments specifically one that said:

// The attributes on this method are chosen for best JIT performance. // Please do not edit unless intentional.

Needless to say that caught our attention. So we did what we usually do, just go and try it ![]() .

.

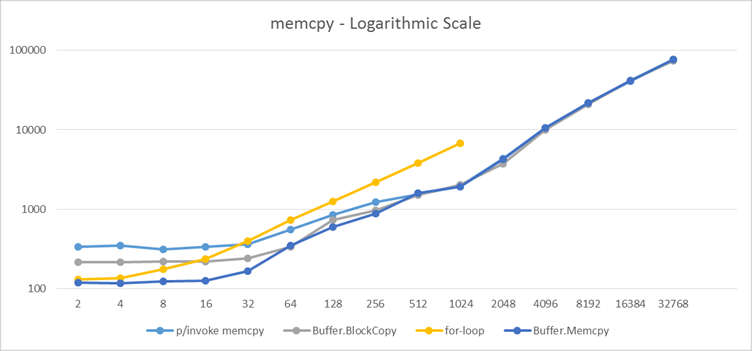

The initial results were “interesting” to say the least. Instantaneously we could achieve faster speed-ups for our copy routines (more on that in a future series --- now I feel lucky I didn’t wrote more than the introduction). In fact, the results were “so good” that we decided to give it a try for compare, if we could at least use the SSE optimized native routine for big memory compares we would be in a great spot.

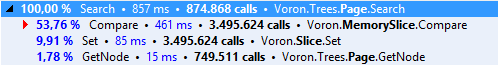

The result of our new tests? Well, we were in for a big surprise.

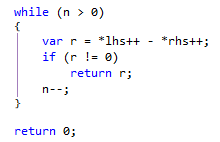

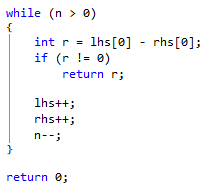

We had the most optimized routine all along. The code in question:

[DllImport("msvcrt.dll", CallingConvention = CallingConvention.Cdecl, SetLastError = false)] [SuppressUnmanagedCodeSecurity] [SecurityCritical] [ReliabilityContract(Consistency.WillNotCorruptState, Cer.Success)] public static extern int memcmp(byte* b1, byte* b2, int count);

We just needed to be a bit more permissive, aka disable P/Invoke security checks.

From the MSDN documentation for SuppressUnmanagedCodeSecurity:

This attribute can be applied to methods that want to call into native code without incurring the performance loss of a run-time security check when doing so. The stack walk performed when calling unmanaged code is omitted at run time, resulting in substantial performance savings.

Well I must say substantial is an understatement. We are already running in full-trust and also memcmp is safe function by default, then no harm is done. The same cannot be said by memcpy, where we have to be extra careful. But that is another story.

- Size: 2 Native (with checks): 353 Managed: 207 Native (no checks): 217 - Gain: -4,608297%

- Size: 4 Native (with checks): 364 Managed: 201 Native (no checks): 225 - Gain: -10,66667%

- Size: 8 Native (with checks): 354 Managed: 251 Native (no checks): 234 - Gain: 7,26496%

- Size: 16 Native (with checks): 368 Managed: 275 Native (no checks): 240 - Gain: 14,58334%

- Size: 32 Native (with checks): 426 Managed: 366 Native (no checks): 276 - Gain: 32,6087%

- Size: 64 Native (with checks): 569 Managed: 447 Native (no checks): 384 - Gain: 16,40625%

- Size: 128 Native (with checks): 748 Managed: 681 Native (no checks): 554 - Gain: 22,92418%

- Size: 256 Native (with checks): 1331 Managed: 1232 Native (no checks): 1013 - Gain: 21,61895%

- Size: 512 Native (with checks): 2430 Managed: 2552 Native (no checks): 1956 - Gain: 30,47035%

- Size: 1024 Native (with checks): 4813 Managed: 4407 Native (no checks): 4196 - Gain: 5,028594%

- Size: 2048 Native (with checks): 8202 Managed: 7088 Native (no checks): 6910 - Gain: 2,575982%

- Size: 8192 Native (with checks): 30238 Managed: 23645 Native (no checks): 23490 - Gain: 0,6598592%

- Size: 16384 Native (with checks): 59099 Managed: 44292 Native (no checks): 44041 - Gain: 0,5699277%

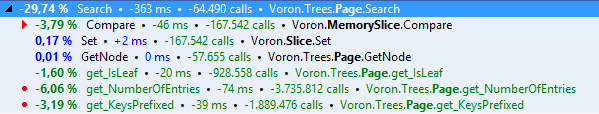

The gains are undeniable, especially where it matters the most for us (16 - 64 bytes). As you can see the managed optimizations are really good, at the 2048 level and up we are able to compete with the native version (accounting for the P/Invoke that is).

The .Compare() code ended up looking like this:

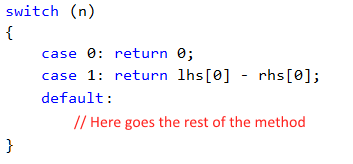

[MethodImpl(MethodImplOptions.AggressiveInlining)] public static int Compare(byte* bpx, byte* bpy, int n) { switch (n) { case 0: return 0; case 1: return *bpx - *bpy; case 2: { int v = *bpx - *bpy; if (v != 0) return v; bpx++; bpy++; return *bpx - *bpy; } default: return StdLib.memcmp(bpx, bpy, n); } }

With these results:

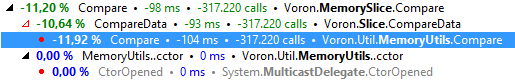

- Size: 2 - Managed: 184 Native (no checks): 238

Size: 4 - Managed: 284 Native (no checks): 280 - Size: 8 - Managed: 264 Native (no checks): 257

- Size: 16 - Managed: 302 Native (no checks): 295

- Size: 32 - Managed: 324 Native (no checks): 313

The bottom-line here is, until we are allowed to generate SSE2/AVX instructions in .Net I doubt we will be able to do a better job, but we are eagerly looking forward for the opportunity to try it out when RyuJIT is released. *

* We could try to write our own optimized routine in C++/Assembler (if that is even possible), but that is something it is out of scope at the moment (we have bigger fishes to fry performance-wise).