Excerpts from the RavenDB Performance team reportOptimizing Compare, Don’t you shake that branch at me!

Note, this post was written by Federico. In the previous post after inspecting the decompiled source using ILSpy we were able to uncover potential things we could do.

By now we already squeeze almost all the obvious inefficiencies that we had uncovered through static analysis of the decompiled code, so now we will need another strategy. For that we need to analyze the behavior in runtime in the average case. We did something like that when in this post when we made an example using a 16 bytes compare with equal arrays.

To achieve that analysis live we will need to somehow know the size of the typical memory block while running the test under a line-by-line profiler run. We built a modified version of the code that stored the size of the memory chunk to compare and then we built an histogram with that (that’s why exact replicability matters). From our workload the histogram showed that there were a couple of clusters for the length of the memory to be compared. The first cluster was near 0 bytes but not exactly 0. The other cluster was centered around 12 bytes, which makes sense as the keys of the documents were around 11 bytes. This gave us a very interesting insight. Armed with that knowledge we made our first statistical based optimization.

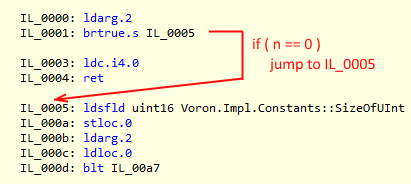

You can notice the if statement at the start of the method, which is a pretty standard bounding condition. If the memory blocks are empty, therefore they are equal. In a normal environment such check is so simple that nobody would bother, but in our case when we are measuring the runtime in the nanoseconds, 3 extra instructions and a potential branch-miss do count.

That code looks like this:

That means that not only I am making the check, we are also forcing a short-jump every single time it happens. But our histogram also tells us that memory blocks of 0 size almost never happen. So we are paying with 3 instructions and a branch for something that almost never happen. But we also knew that there was a cluster near the 0 that we could exploit. The problem is that we would be paying 3 cycles (1 nanosecond in our idealized processor) per branch. As our average is 97.5 nanoseconds, we have 1% improvement in almost any call (except the statistically unlikely case) if we are able to get rid of it.

Resistance is futile, that branch needs to go. ![]()

In C and Assembler and almost any low level architecture like GPUs, there are 2 common approaches to optimize this kind of scenarios.

- The ostrich method. (Hide your head in the sand and pray it just work).

- Use a lookup table.

The first is simple, if you don’t check and the algorithm can deal with the condition in the body, zero instructions always beats more than zero instruction (this case is a corner case anyways, no damage is dealt). This one is usually used in massive parallel computing where the cost of instructions is negligible while memory access is not. But it has its uses in more traditional superscalar and branch-predicting architectures (you just don’t have so much instructions budget to burn).

The second is more involved. You need to be able to “index” somehow the input and pay with less instructions than do the actual branches (at a minimum of 1 nanosecond each aka 3 instructions of our idealized processor). Then create a branch table and jump to the appropriate index which itself will jump to the proper code block using just 2 instructions.

Note: Branch tables are very well explained at http://en.wikipedia.org/wiki/Branch_table. If you made it that far you should read it, don’t worry I will wait.

As the key take away if your algorithm have a sequence of 0..n, you are in the best world, you already have your index. Which we did ![]() .

.

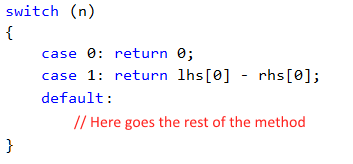

I know what you are thinking: Will the C# JIT compiler be smart enough to convert such a pattern into a branch table?

The short answer is yes, if we give it a bit of help. The if-then-elseif pattern won’t cut it, but what about switch-case?

The compiler will create a switch opcode, in short our branch table, if our values are small and contiguous.

Therefore that is what we did. The impact? Big, but this is just starting. Here is what this looks like in our code:

I’ll talk about the details of branch tables in C# more in the next post, but I didn’t want to leave you hanging too much.

More posts in "Excerpts from the RavenDB Performance team report" series:

- (20 Feb 2015) Optimizing Compare – The circle of life (a post-mortem)

- (18 Feb 2015) JSON & Structs in Voron

- (13 Feb 2015) Facets of information, Part II

- (12 Feb 2015) Facets of information, Part I

- (06 Feb 2015) Do you copy that?

- (05 Feb 2015) Optimizing Compare – Conclusions

- (04 Feb 2015) Comparing Branch Tables

- (03 Feb 2015) Optimizers, Assemble!

- (30 Jan 2015) Optimizing Compare, Don’t you shake that branch at me!

- (29 Jan 2015) Optimizing Memory Comparisons, size does matter

- (28 Jan 2015) Optimizing Memory Comparisons, Digging into the IL

- (27 Jan 2015) Optimizing Memory Comparisons

- (26 Jan 2015) Optimizing Memory Compare/Copy Costs

- (23 Jan 2015) Expensive headers, and cache effects

- (22 Jan 2015) The long tale of a lambda

- (21 Jan 2015) Dates take a lot of time

- (20 Jan 2015) Etags and evil code, part II

- (19 Jan 2015) Etags and evil code, Part I

- (16 Jan 2015) Voron vs. Esent

- (15 Jan 2015) Routing

Comments

So what's the point of the these optimizations of 1%, 3% ... etc, do they have a sizable impact on the Raven?

Are you preparing for some benchmarking competition against other products, or is this just a push squeeze the most out of Raven?

Catalin, A 3% here, and a 2% there adds up to quite a lot. Especially when we are talking about making optimizations to methods that are calls tens of millions of times. In the case of compare, that is called all the time in our code (for every get request, for every put, for every scan, pretty much all the time). Even small improvements will yield good return.

The difference between 200 req/sec and 206 req/sec isn't that big, but it means another half a million more requests a day per node, for example. And the total result isn't just a 3% improvement overall.

@Catalin just to put it in context. A bulk insert of 50000 small documents will cause (if I don't recall wrongly, I am being conservative here) more or less 3M calls to this function. Or about half a second every 50000 elements. And it is not even a much used function when bulk inserting because it is a very optimized path which avoid searching the trees as much as possible.

Are you sure the switch provided a speedup? Last time I looked small switches were compiled to if-else by the JIT. The threshold was 2 or 3 so I'm not sure what you have been getting here.

Also, a table-based switch has quite a few instructions in the header. At the very least there is a branch for the default case. It has an indirect branch (to a dynamic location). Those are not good for performance. In all cases it is more expensive than a single predicted branch.

A 100% predicted branch (like the n == 0) branch should cost 1-2 cycles that maybe even overlap with other work so they are nearly free.

The methodology of looking at IL to determine what to do is really unsound. Look at the generated x64.

As an idea you could have a switch with, say, 20 cases for [0, 19] and for each of those you hard-code the comparison in a loop-free way. That might hurt because of code size though.

Tobi, No, we are pretty sure that this was responsible for a significant speed up :-) And the JIT doesn't turn switch to if/else. See: http://www.dotnetperls.com/if-switch-performance

And code size is an issue that we need to take into account. In particular, we want to make sure that the entire method can fit inside L1 easily, otherwise we will need to move it back and forth when it is called.

Tobi, Note that the difference is whatever you have a jump table that is full or sparse. A sparse jump table would likely go into if/else mode. But a full jump table is more efficent and will be the one used.

OK, the switch is only being removed if there are at most two cases (tested it just now).

Still, the switch header is 8 instructions including one direct and one indirect branch. Wherever the speedup came from it is unexplained why the switch would cause it. The switch is inferior by every measure. Any idea why it would be faster in this particular case?

Tobi, You might be counting instructions, but you are ignoring the fact that we are reducing the number of branches that the CPU has to deal with

On my count the branch count increased from one to two including one indirect branch.

Tobi, Where do you see that? The compiler is probably doing something like this:

jumpTable[ n ] ();

No branching at all.

Tobi, Note that what we are counting is the conditional branching. That is what we care about, because it means that the CPU need to go into speculative mode, and maybe throw stuff out if the prediction turned out to be wrong

In order to answer such question I advise looking at thex86 code. There you see:

[code]

if ((uint)n >= 2) goto default; //branch

var switchOffset = table[n];

var switchAddr = switchOffset + 0x123456;

goto switchAddr; //branch

[/code]

A jump to a dynamic address required prediction. That's why it counts as a dynamic branch and not as a jump. It stalls the speculation pipeline.

@tobi the stalls do exists as you noted, however there is a very important distinction. We are optimizing for RavenDB not for a general purpose memcmp routine. So you have to assess the behavior based on your typical workload.

The compare is usually used to decide in what direction we will be moving on the trees, therefore it has 2 important characteristics:

While this may change in the (near) future due to polyglot persistence. Today we can exploit that, and that is why the optimized method is so counterintuitive (assembler wise).

On the other hand, even Thomas' method (http://ayende.com/blog/169828/excerpts-from-the-ravendb-performance-team-report-optimizing-memory-comparisons-size-does-matter) which was the inspiration for a new iteration cannot exploit the clusters near 0. I will probably write a follow up about that too.

About the switch, in assembler it is pretty tight. While we use the assembler output for optimization purposes, I wasn't showing the assembler output because the serie was long enough even without looking at the different assembler alternatives, but for reference this is the switch: http://imgur.com/j2xqlfs

@tobi One thing I didnt mention but makes sense to, is the following; as long as you dont touch memory (L1, L2, etc) you have a lot of register instructions to burn. Not that many as in GPUs but the budget is not negligible and you can exploit that.

For example, while the switch itself may be composed of 8 instructions and also a potential branch misprediction, note that all of them are register based. That means that for each L1-hit memory read, you still have 4 cycles to burn (non-math register ops typically are in the 1-cycle cost range).

Both memcmp and memcpy are memory read/write intensive, those reads will hide many branch mispredictions and/or extra operations when performed on registers. Finding ways to exploiting that imbalance is the trick we used here.

Needless to say, I am hating not having access to an intrisec to do prefetching enough to actually suggest that as a future enhancement of the CLR. https://github.com/dotnet/roslyn/issues/166#issuecomment-72403213

It should have read: "That means that for each L1-miss memory read"

Comment preview