Excerpts from the RavenDB Performance team reportDo you copy that?

Note, this post was written by Federico. This series relies heavily on the optimization work we did on the optimizing memory comparisons, if you haven’t read it, I suggest you start there instead.

If memory compare is the bread, memory copy is the butter in an storage engine. Sooner or later we will need to send the data to disk, in the case of Voron we use memory mapped files so we are doing the copy ourselves. Voron also uses a copy-on-write methodology to deal with tree changes, so there are plenty of copy commands around. ![]()

Differently from the memory compare case where an optimized version already existed. In the case of memory copy we relied on a p/invoke call to memcpy because we usually move lots of memory around and it is hard to compete in the general case with an assembler coded version. Scrap that, it is not hard, it is extremely hard!!! Don’t underestimate the impact that SSE extensions and access to the prefetching operation can have on memcpy. [1]

However, usually not all memory copies are created equally and there is plenty opportunity to do some smart copy; our code wasn’t exactly an exception to the rule. The first work involved isolating the places where the actual “big copies” happen, especially where the cost of actually doing a p/invoke call gets diluted by the sheer amount of data copied [2] in the statistically representative case. You guessed right, for that we used our FreeDB example and the results were very encouraging, there were a couple of instances of “big copies”. In those cases using the P/Invoke memcpy was not an option, but for the rest we had plenty of different alternatives to try.

The usual suspects to take over our P/Invoke implementation for small copies would be Buffer.BlockCopy, the MSIL cpblk operation and Buffer.Memcpy which is internal, but who cares, we can still clone it ![]() .

.

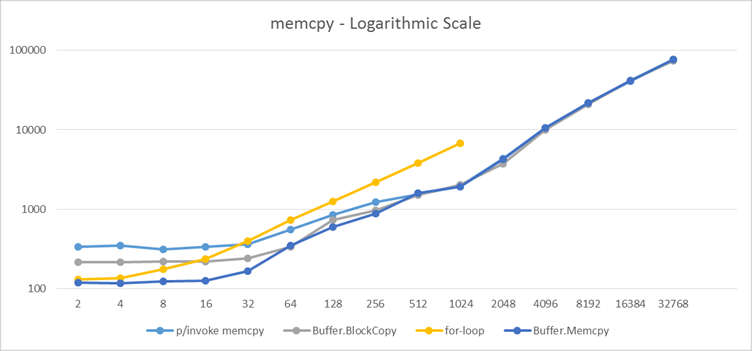

The general performance landscape for all our alternatives is:

What we can get from this is: Buffer.Memcpy should be the base for any optimization effort until we hit the 2048 bytes where all behave more or less in the same way. If we have to choose between Buffer.BlockCopy and memcpy though, we will select the latter because when running in 32 bits the former is pretty bad. [3]

Having said that, the real eye opener here is the for-loop which is always a bad bet against Buffer.Memcpy. Specially because that’s usual strategy followed when copying less than 32 bytes.

There is also another interesting tidbit here, Buffer.Memcpy has some pretty nasty discontinuities around 16 bytes and 48 bytes.

Size: 14 Memcpy: 128 Stdlib: 362 BlockCopy: 223 ForLoop: 213 Size: 16 Memcpy: 126 Stdlib: 336 BlockCopy: 220 ForLoop: 235 Size: 18 Memcpy: 170 Stdlib: 369 BlockCopy: 262 ForLoop: 303 Size: 20 Memcpy: 160 Stdlib: 368 BlockCopy: 247 ForLoop: 304 Size: 22 Memcpy: 165 Stdlib: 399 BlockCopy: 245 ForLoop: 312 Size: 44 Memcpy: 183 Stdlib: 499 BlockCopy: 257 ForLoop: 626 Size: 46 Memcpy: 181 Stdlib: 563 BlockCopy: 264 ForLoop: 565 Size: 48 Memcpy: 272 Stdlib: 391 BlockCopy: 257 ForLoop: 587 Size: 50 Memcpy: 342 Stdlib: 447 BlockCopy: 290 ForLoop: 674 Size: 52 Memcpy: 294 Stdlib: 561 BlockCopy: 269 ForLoop: 619

What would you do here?

[1] With RyuJIT there are new vectorised operations (SIMD). We will certainly look for opportunities to implement a fully managed version of memcpy if possible.

[2] For a detailed analysis of the cost of P/Invoke and the Managed C++/CLI Interface you can go the this article: http://www.codeproject.com/Articles/253444/PInvoke-Performance

[3] For detailed metrics check: http://code4k.blogspot.com.ar/2010/10/high-performance-memcpy-gotchas-in-c.html

More posts in "Excerpts from the RavenDB Performance team report" series:

- (20 Feb 2015) Optimizing Compare – The circle of life (a post-mortem)

- (18 Feb 2015) JSON & Structs in Voron

- (13 Feb 2015) Facets of information, Part II

- (12 Feb 2015) Facets of information, Part I

- (06 Feb 2015) Do you copy that?

- (05 Feb 2015) Optimizing Compare – Conclusions

- (04 Feb 2015) Comparing Branch Tables

- (03 Feb 2015) Optimizers, Assemble!

- (30 Jan 2015) Optimizing Compare, Don’t you shake that branch at me!

- (29 Jan 2015) Optimizing Memory Comparisons, size does matter

- (28 Jan 2015) Optimizing Memory Comparisons, Digging into the IL

- (27 Jan 2015) Optimizing Memory Comparisons

- (26 Jan 2015) Optimizing Memory Compare/Copy Costs

- (23 Jan 2015) Expensive headers, and cache effects

- (22 Jan 2015) The long tale of a lambda

- (21 Jan 2015) Dates take a lot of time

- (20 Jan 2015) Etags and evil code, part II

- (19 Jan 2015) Etags and evil code, Part I

- (16 Jan 2015) Voron vs. Esent

- (15 Jan 2015) Routing

Comments

@Federico: it appears that a similar strategy to the memcmp problem is a winner here. This obviously very closely mirrors what Buffer.Memcpy is doing, without caring about alignment. However instead of the P/Invoke to memcpy for larger sizes, at least on my system a delegate call to a generated CPBLK IL instruction is substantially faster (up to at least average buffer sizes of 8K).

Again, I just grabbed the latest copy from Voron's MemUtil source and added that to a little bench: https://gist.github.com/anonymous/ae90b03efd9082d781f1

From the results you can see that <disclaimer> on my system in this microbench,</disclaimer> for small sizes the switch over the first 16 bytes, accessing bytes in memory sequential order and not doing any jumps, a tight loop for sizes < 1024 and CPBLK for larger buffers is a winner on x64.

Given the bad press for CPBLK on x86, I found the results there rather surprising. It starts to be faster than anything else at around an average buffer size of 24 bytes.

Also, interestingly, vectorised implementations are not necessarily superior, because it appears new intel processors are heavily optimized to do fast 'rep movsb' as a preferred copy implementation. Refer to a discussion (with some comparisons) here: https://forums.handmadehero.org/index.php/forum?view=topic&catid=4&id=142

@Alex, I wrote this 3 weeks ago. Lots of things happened since then. I like the CPBLK alternative because until 512 in my tests here (I will have to confirm that in fourth generation i7) is faster than the alternatives. And if that continue to be true in i7 we will surely use that for lower than 512 bytes.

However, the current measurements with the very latest version (committed in my branch yesterday) shows this:

1024 MemUtils.Copy : 841 ms, 8,50 GB/s, 1,50 times fastest MemUtils.Current : 658 ms, 10,87 GB/s, 1,18 times fastest CPBLK Raw : 774 ms, 9,24 GB/s, 1,38 times fastest CPBLK : 639 ms, 11,19 GB/s, 1,14 times fastest memcpy : 559 ms, 12,79 GB/s, 1,00 times fastest

4096 MemUtils.Copy : 857 ms, 17,81 GB/s, 1,35 times fastest MemUtils.Current : 691 ms, 22,09 GB/s, 1,08 times fastest CPBLK Raw : 884 ms, 17,26 GB/s, 1,39 times fastest CPBLK : 860 ms, 17,75 GB/s, 1,35 times fastest memcpy : 637 ms, 23,96 GB/s, 1,00 times fastest

16384 MemUtils.Copy : 1078 ms, 28,32 GB/s, 1,06 times fastest MemUtils.Current : 1054 ms, 28,96 GB/s, 1,03 times fastest CPBLK Raw : 1416 ms, 21,56 GB/s, 1,39 times fastest CPBLK : 1404 ms, 21,74 GB/s, 1,38 times fastest memcpy : 1021 ms, 29,90 GB/s, 1,00 times fastest

You have to make a very small change to achieve this and I have already wrote a blog post about this yesterday night, but we will have to ask Oren when it is scheduled to be published ;)

Alex, The post Federico is talking about is here: http://ayende.com/blog/170114/excerpts-from-the-ravendb-performance-team-report-optimizing-compare-the-circle-of-life-a-post-mortem?key=deee125618ca474d84ba5711d1751c75

It is set to be published in two weeks, but you can get a sneak pick to it now.

@Alex I have been testing your benchmark a bit and there is something fishy it in. Somehow even if I copy the whole CPBLK code I cannot achieve the same time for 4 to 24 bytes. Therefore the must be some cache effects there that we are missing, I believe the problem is that for less than 4096 bytes you are using fixed buffers, therefore all is loaded into L1 cache.

But even if I get rid of the cache effects, the code is consistently better in my own test harness (which accounts for L1/L2 eviction). I am investigating the generated assembly as we speak.

This code is what we want to test when RyuJIT is released: apparently the fastest version of aligned memcpy known to man. :) http://www.wenda.io/questions/115387/very-fast-memcpy-for-image-processing.html

Thanks for the sneak preview. Using this change (i.e. adding these attributes to memcpy) and modifying "MemUtilsCopy" to mirror the "MemUtils.Compare" from the sneak preview, I see memcpy and "MemUtilsCopy" perform roughly the same as the "CPBLK Raw" variant on all sizes. The optimization for smaller buffer sizes (<1024) in the "CPBLK" variant is still the fastest up to around 1K sizes though on my system (Core i7-3615 QM). See https://gist.github.com/anonymous/b9c983d144776cd67ca8

So clearly results will vary a bit with different architectures.

btw, MS releasing CoreCLR into the open is pretty awesome. This for sure will give me some hours of fun while browsing through some interesting stuff.

@federico. regarding cache effects: if I increase the minimum size of the buffers to 8 * 4096, I still get the same results.

or more extreme, increasing to 128 * 4096, there is still the same relative speeds, although overall performance drops significantly

Found out the difference. It seems that the direct call to the unmanaged memcpy causes the call to incur in using 3 registers more. That believe it or not causes the JIT to generate a sub-par switch statement (in order to avoid using more registers) and causes the difference. I fixed it calling BulkCopy which is managed instead.

http://i.imgur.com/KorbNMq.png

These are the results on our test harness that ensures the CPU cannot guess the memory location or use the L1/L2 cache (that's why megabytes per second is smaller) and also pays the cost of fixing the array to be "consistent" with BlockCopy which doesn't require it but has to do it internally.

I am still waiting for the benchmarks on the i7 to know where to do the cut-over... As you can see the 1024 threshold is not always as efficient, so we are going to choose something in the middle. ;)

@Federico, that image looks decidedly odd. Why would throughput drop that dramatically after around 1K and again after 64K?

Check out this beautiful sigmoid, which does what I would expect (increase with buffer size and start to plateau). http://i.imgur.com/Fl9wcB5.png.

Cache boundaries can explain that. We dont have the ability to tell the processor that we are not going to touch the written memory, therefore it will evict the L1/L2/L3 lines and polute the cache. The read/write cycle of big chunks of memory will in the end can cause that difference.

Our benchmark may be wrong (nobody is perfect) but I would certainly distrust a benchmark that does not shows those boundaries. Not having those discontinuiting means that you cannot compare 4K with 16K and so on, because the general scenario is different for each one of those. Ensuring that the CPU must read to cache (cannot use cached data) is a good thing.

BTW I didnt consider it odd, because I have seen other benchmarks of memcpy that has exactly the same behavior.

Comment preview