Excerpts from the RavenDB Performance team reportOptimizing Compare – Conclusions

Note, this post was written by Federico. We are done with the optimizations, but the question remains. Was all this trouble worthwhile?

If you remember from the first post in this series, based on our bulk insert scenario I said that each comparison in average takes us 97.5 nanoseconds. Now let’s first define what that means.

The most important thing is to understand what are the typical characteristics of this scenarios.

- All the inserts are in the same collection.

- Inserts are in batches and with sequential ids.

- Document keys share the first 10 bytes. (which is very bad for us but also explains why we have a cluster around 13 bytes).

- No map-reduce keys (which are scattered all over the place)

- No indexing happens.

In short, we gave ourselves a pretty bad deal.

We are dealing with a live database here, we can control the scenario, but it is insanely difficult to control the inner tasks as we do with micro-benchmarks. Therefore all percentage gains use call normalization which allows us to make an educated guess of what would have been the gain under the same scenario. The smaller the difference in calls, the less noise or jitter in the measurement.

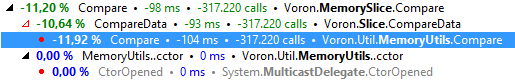

So without further ado this is the comparison before-after with call normalization.

With our nightmare scenario we get a 10% improvement. We went from 97.5 nanoseconds per compare to 81.5 nanoseconds. Which is AWESOME if we realize we were optimizing an already very tight method.

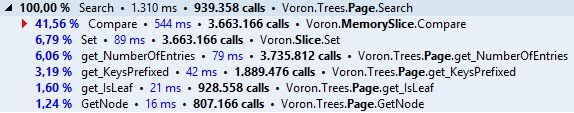

What this means in context? Let’s take a look at the top user on this workload, searching a page.

Before:

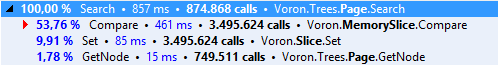

After:

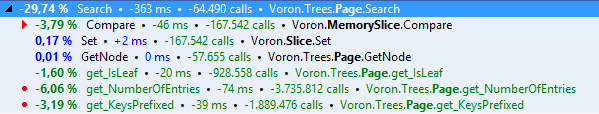

It seems that we got better, but also there is something different. Why those gets are missing? In the first batch of optimizations we requested the compiler to aggressively inline those properties, now those properties are part of the call-site with optimized code. A bit off-topic but when optimizing first look for these before going in an optimization frenzy.

This is the search method after optimization.

Our 10% improvement on the Memory Compare gave us almost an extra 4% in the search when bulk inserting. Which for a method that is called in the billions range calls on real life workloads, is pretty damn good.

And this concludes the series about memory compare, hope you have enjoyed it.

PS: With all the information provided, where do you guess I will look afterwards for extra gains?

More posts in "Excerpts from the RavenDB Performance team report" series:

- (20 Feb 2015) Optimizing Compare – The circle of life (a post-mortem)

- (18 Feb 2015) JSON & Structs in Voron

- (13 Feb 2015) Facets of information, Part II

- (12 Feb 2015) Facets of information, Part I

- (06 Feb 2015) Do you copy that?

- (05 Feb 2015) Optimizing Compare – Conclusions

- (04 Feb 2015) Comparing Branch Tables

- (03 Feb 2015) Optimizers, Assemble!

- (30 Jan 2015) Optimizing Compare, Don’t you shake that branch at me!

- (29 Jan 2015) Optimizing Memory Comparisons, size does matter

- (28 Jan 2015) Optimizing Memory Comparisons, Digging into the IL

- (27 Jan 2015) Optimizing Memory Comparisons

- (26 Jan 2015) Optimizing Memory Compare/Copy Costs

- (23 Jan 2015) Expensive headers, and cache effects

- (22 Jan 2015) The long tale of a lambda

- (21 Jan 2015) Dates take a lot of time

- (20 Jan 2015) Etags and evil code, part II

- (19 Jan 2015) Etags and evil code, Part I

- (16 Jan 2015) Voron vs. Esent

- (15 Jan 2015) Routing

Comments

Comment preview