Properly getting into jailServices with data sharing instead of RPC or messaging

The design of Macto (that is this prison management application I’m discussing) so far seems pretty strange. Some of the constraints are common, the desire for resiliency and being able to have each independent portion of the system work in isolation. However, the way we go about handling this is strange.

The design of Macto (that is this prison management application I’m discussing) so far seems pretty strange. Some of the constraints are common, the desire for resiliency and being able to have each independent portion of the system work in isolation. However, the way we go about handling this is strange.

I looked up the definition of a micro services architecture and I got to Martin Fowler, saying:

..the microservice architectural style [1] is an approach to developing a single application as a suite of small services, each running in its own process and communicating with lightweight mechanisms, often an HTTP resource API.

This wouldn’t work for our prison system, because we are required to continue normal operations when we can’t communicate with the other parts of the system. A prison block may not be able to talk to the Registration Office, but that doesn’t mean that it can error. Operations must continue normally.

So RPC over HTTPS is out, but what about messaging? We can use a queue to dispatch commands / events to the other parties in the system, no?

The answer is that we can do that, but let’s consider how that will work. For events, that would work quite nicely, and have roughly the same model that we have now. In fact, with the RavenDB ETL process and the dissemination protocol in place, that is pretty much what we have. Changes to my local state are pushed via ETL to a shared known location and then distributed to the rest of the system.

But what about commands? A command in the system can be things like “we got a warrant for releasing an inmate”. That is something that must proceed successfully. If there is a communication breakdown, generating an error message is not acceptable, in this case, someone will hand deliver the warrant to the block and get this inmate out of the prison.

In other words, we have the notion of commands flowing in the system, but the same command can also come from the Registration Office or from a phone call or from a lawyer physically showing up at the block and shoving a bunch of papers at the sergeant on duty demanding an immediate release.

All of this leads me to create an data architecture that is based on local data interaction with a backbone to share it, while relying on external channels to route around issues between parts of the system. Let’s consider the case of a releasing an inmate. The normal way it works is that the Registration Office prepare a list of inmates that needs to be released today.

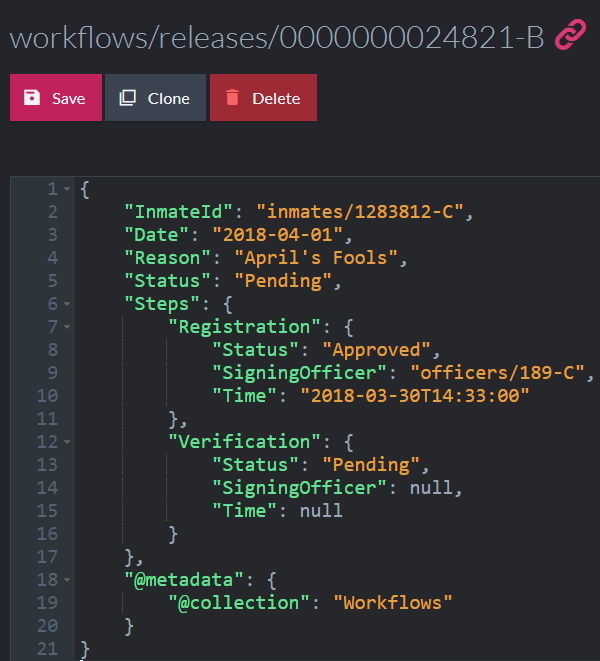

Here is one such document, which is created on the Registration Office server.

This then flow via ETL to the rest of the prison. Each block will get the list of inmates that they need to process for release and the Command & Control Center is in charge that they are processed and released properly. I’ll have a separate post to talk about such workflows, because they are interesting, but the key here is that we don’t actually have a command being sent.

Instead, we create a document starting the workflow for release. This is exactly the same as an event being published and it will be received by the other parties in the prison. At this point, each party is going to do their own thing. For example, the Command & Control Center will verify that the bus to the nearest town is ordered and each block’s sergeant is in charge of getting the inmates processed out of the block and into the hand of the Registration Office, which will handle things such as returning personal effects, second verification that the right inmate is released, etc.

Unlike a command, which typically have a success / error code, we use this notion as well as timed alerts to verify that things happen. In other words, the Command & Control Center will be alerted if the inmate isn’t out of the prison by 10:00 AM and will take steps to check why that has happened. The Registration Office will also do its own checks at the close of the day, to ensure that no inmates are still in the prison when they shouldn’t.

Note that this involves multiple parties cooperating with each other, but none of them explicitly rely on request / response style of communication.

This is message passing, and also event publication, but I find that this is a much more passive manner in which you’ll work. Instead of explicitly interacting with the outside world, you are always operating on your own data, and additional work and tasks to do shows up as it is replicated from the other parts of the system.

Another benefit of this approach is that it also ensures that there are multiple and independent verification steps for most processes, which is a good way to avoid making mistakes with people’s freedom.

More posts in "Properly getting into jail" series:

- (19 Mar 2018) The almighty document

- (16 Mar 2018) Data processing

- (15 Mar 2018) My service name in Janet

- (14 Mar 2018) This ain’t what you’re used to

- (12 Mar 2018) Didn’t we already see this warrant?

- (09 Mar 2018) The workflow of getting an inmate released

- (08 Mar 2018) Services with data sharing instead of RPC or messaging

- (07 Mar 2018) The topology of sharing

- (06 Mar 2018) Data flow

- (02 Mar 2018) it’s not a crime to be in an invalid state

- (01 Mar 2018) Physical architecture

- (28 Feb 2018) Counting Inmates and other hard problems

- (27 Feb 2018) Introduction & architecture

Comments

There's a general agreement among software creators and their customers that software replaces papers and going paperless is A Good Thing ™. And then after introducing an IT solution everyone starts complaining that the papers were so much better to work with and allowed for much greater flexibility. Especially for order handling workflow, where you could print copies of the order, hand it out to proper people and be sure they have everything they need to do the job. And you could always put some additional info on the papers when there was a need for special handling. Plus, papers are so much easier to handle for production or service staff when they dont have to fumble with some computer/terminal to find the information and they can just put a piece of paper on the table and concentrate on doing actual work instead.

And your approach is the same idea applied to software design - make a digital piece of paper that almost physically follows the process, is always there and has everything necessary to do the work, then pass it around and just make sure it's not lost somewhere in between. No central registry, no central decision about where the papers go, just do your task and pass the message to the next station.

Rafal, Yes, that is very much the model that I have. I think that you put this beautifully. The whole idea is to ensure that we have the same flexibility as we used to have, but also the advantages of not having to actually deal with paper, having things searchable, archivable, globally visible, etc.

one of my users worked with paper for 35 years (financial sector) and when the department went digital, which I implemented, he continued to print out every single record and work off the paper, then update the digital version, basically ended up duping all his work. He didn't feel that the work existed if it wasn't in his filing cabinet. Every year Iron mountain had to store boxes (or shred perhaps) of all his superfluous printouts.

Peter, There is a sense of trust that is very hard to replicate in a computer system. I can absolutely understand the desire to have a physical copy of such records.

Comment preview