In our prison system, we have a lot of independent parts, by design. Each of them is expected to work independently of the rest of the system, but also cooperate with them. That typically require a particular mindset when designing the application.

In our prison system, we have a lot of independent parts, by design. Each of them is expected to work independently of the rest of the system, but also cooperate with them. That typically require a particular mindset when designing the application.

Let’s lay out the different aspects of integration between the various pieces, shall we?

- Each part of the system should be able to run completely independently from the other pieces.

- There are offline methods for communications (literally a guy walking down to the block with a piece of paper) that can be a backup communication plane but can also by pass the system entirely (a warrant being served directly to the block’s officers).

- There are two distinct options for communication: Commands (release this inmate, ensure inmate is ready to go to court at date) and notifications (inmates count, status, etc)

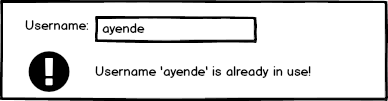

- We trust, but verify, each piece of information that we receive.

The first point seems like a pretty standard requirement, but in combination of the second point, we get into a particularly interesting issue. We may have the same information entered into the system by multiple parties at different times.

For example, a warrant to release an inmate might be served directly to the block’s officer. The release is processed, and then the warrant arrives to the Registration office, which will also enter it. At some later time, this data is merged and we need to ensure that it make sense to the people reading it.

The offline communication plane is also a very important design considerations for a system that reflects the real world. It means that we don’t have to provide too complex an infrastructure for surviving production. In fact, given the fact that a prison is going to hardly have a top notch technical operations team (they might have a top notch operations team, but they refer to something quite different), we don’t want to build something that rely on good communications.

To make sense of such a system, we need to define data ownership and data flow between the various systems. Because this is a really complex system, we’ll take a few example and analyze them properly.

- The legal status of an inmate.

- The location of an inmate.

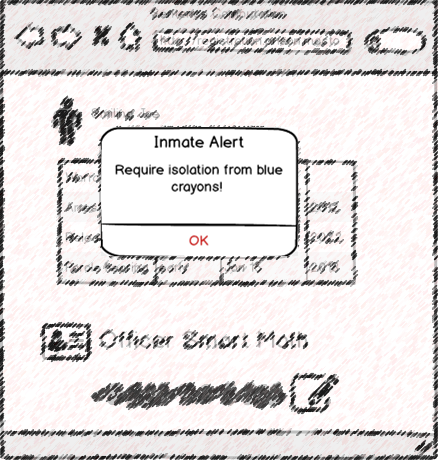

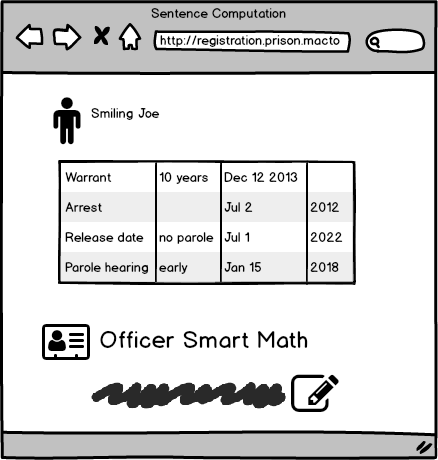

What is the meaning of legal status? It means under what warrant it is in the prison (held until trial, 48 hours hold, got a final judgement). At its simplest, it is what date should this person be released. But in practical terms, this can be much more complex and may have conditions on where this inmate can be held, what they can do, etc.

Everything about the legal status of an inmate is the responsibility of the Registration Office. Any movements of inmates into or out of the prison must go through the Registration Office. Actually, this isn’t quite true. Any movement of an inmate from the responsibility of the prison must go through the Registration Office. But the physical location of the inmate is the responsibility of the block into which the inmate was assigned.

A good example of this would be an inmate that has been hospitalized. They are not physically inside the prison, but the prison is still responsible for them. The Registration Office doesn’t usually care for such details (but sometimes they do, for example, if the inmate has a court date that they’ll miss, they need to notify the court) because there isn’t a change in who is in charge of the inmate.

This is complex, but this is also the real world, and we need to manage this complexity. So, let’s define the ownership rules and data flow behavior:

- Legal Status is owned by Registration Office and is being disseminated from there to all interested parties.

- The location of an inmate and its current physical status are owned by the blocked it is assigned to and disseminated from there to all interested parties.

- The assignment of an inmate to a particular block is also interesting. This piece of information is owned by the Registration Office, but it is not their (sole) decision. This may take a bit of explaining.

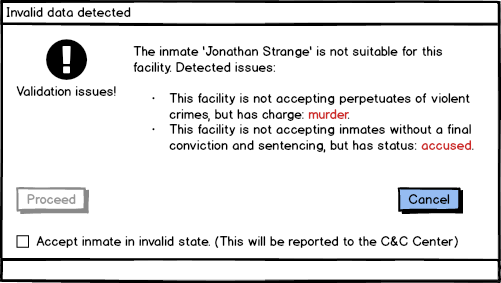

The block an inmate is assigned to is determined by a bunch of stuff. What is the legal status of the inmate, what is the previous / expected behavior of this inmate, whatever the inmate needs to be isolated from / together with certain people, information / recommendation from the intelligence office, court decisions, the free space available on each block, the inmate medical status and many other details that are not quite important.

The Registration Office will usually make the initial placement of where an inmate is going to go, but this is not their decision, there is a workflow involved that has input from way too many parties. The official decision is at the hands of the prison commander, but recording this decision (and the data ownership of it) is at the hands of the Registration Office.

Okay, enough domain knowledge, let’s talk about the technical details, shall we? I’m sorry that I have to do such an info dump, and I’m trying to contain it to relevant pieces, but if I don’t include the semantics of what we are doing, it will make very little sense or be extremely artificial.

The legal status of inmates in the Registration Office needs to be sent to other parties in the prison. In particular, all the blocks and the Command & Control Center.

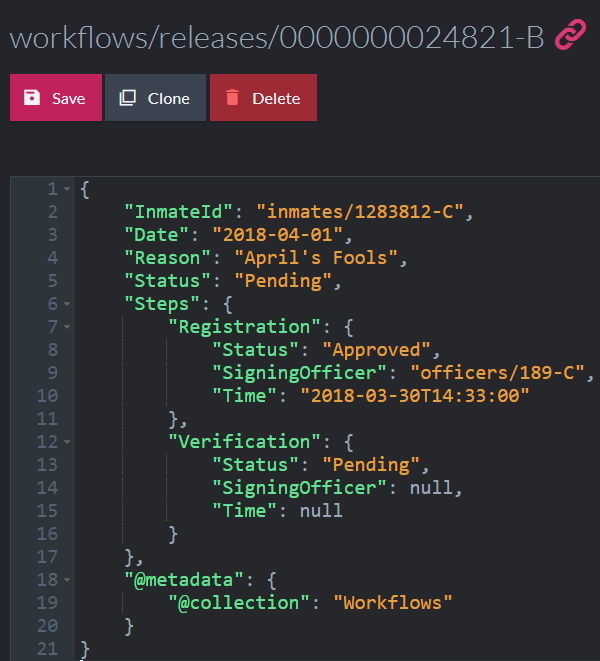

We can deal with this by defining the following RavenDB ETL process from the Registration Office:

What this does is simply define the data that we’ll share to the outside world. If I was building this for real, this will probably be a lot bigger, because an inmate is a really complex topic. What is important here is that we define this as an explicit process. In other words, this is part of the service contract that we have with the outside world. The shape of the data and the way we publish it may only be changed if we contact all parties involved. Practically speaking, this usually means that we can only add data, but never remove any fields from the system.

Note that in this case, we simplify the model as we send it out. The warrants for this inmate aren’t going out, and we just pull the latest status and release dates from the most up to date warrant. This is a good example of how we avoid exposing our internal state to the outside world, giving us the flexibility to change things later.

The question now is where does this data goes to? RavenDB ETL will write the data to an external database, and here we have a few options. First, we can define an ETL target for each of the known parties that want this data (each of the blocks and the Command & Control Center, at this time). But while that would work, it isn’t such a great idea. We’ll have to duplicate the ETL definition for each of those.

A better option is to send the (transformed) data to a dedicated database that will be our integration source. Consider the following example:

In this case, we can have this dedicated public database that exposes all the data that the Registration Office shares with the rest of the world. Any part that wants this information can setup external replication from this database to their own. In this manner, when the Intelligence Office decides to make it known that they also needs to access the inmate registration data, we can just add them as a replication destination for this database.

Another option is not to have each individual party in the prison share its own status, but have a single shared database that each of them write to. This can look like this:

In this case, any party that wants to share data will be writing it to the shared database, and anyone who reads it will have access to it through replication from there. This way, we define a data pipeline of all the shared data in the prison that anyone can hook up to.

This post is getting long enough that I’ll separate the discussion of the actual topology of the data and handling the incoming data to separate posts, stay tuned.

This post is the conclusion for this series (unless I’ll get some interesting questions). So far, I outlined how to break apart the system, the data flow and data processing inside it, a lot about the internal constraints and the business logic as it is applied. There hasn’t been a lot of code, because I wanted to keep things at the architecture level rather do low level dive.

This post is the conclusion for this series (unless I’ll get some interesting questions). So far, I outlined how to break apart the system, the data flow and data processing inside it, a lot about the internal constraints and the business logic as it is applied. There hasn’t been a lot of code, because I wanted to keep things at the architecture level rather do low level dive.