The Guts n’ Glory of Database InternalsWhat the disk can do for you

I’m currently in the process of getting some benchmark numbers for a process we have, and I was watching some metrics along the way. I have mentioned that disk’s speed can be effected by quite a lot of things. So here are two metrics, taken about 1 minute apart in the same benchmark.

This is using a Samsung PM871 512GB SSD drive, and it is currently running on a laptop, so not the best drive in the world, but certainly a respectable one.

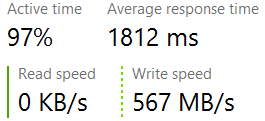

Here is the steady state operation while we are doing a lot of write work. Note that the response time is very high, in computer terms, forever and a half:

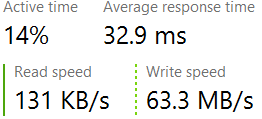

And here is the same operation, but now we need to do some cleanup and push more data to the disk, in which case, we get great performance.

But oh dear good, just look at the latency numbers that we are seeing here.

Same machine, local hard disk (and SSD to boot), and we are seeing latency numbers that aren’t even funny.

In this case, the reason for this is that we are flushing the data file along side the journal file. In order to allow to to proceed as fast as possible, we try to parallelize the work so even though the data file flush is currently holding most of the I/O, we are still able to proceed with minimal hiccups and stall as far as the client is concerned.

But this can really bring home the fact that we are actually playing with a very limited pipe, and there little that we can do to control the usage of the pipe at certain points (a single fsync can flush a lot of unrelated stuff) and there is no way to throttle things and let the OS know (this particular flush operation should take more than 100MB/s, I’m fine with it taking a bit longer, as long as I have enough I/O bandwidth left for other stuff).

More posts in "The Guts n’ Glory of Database Internals" series:

- (08 Aug 2016) Early lock release

- (05 Aug 2016) Merging transactions

- (03 Aug 2016) Log shipping and point in time recovery

- (02 Aug 2016) What goes inside the transaction journal

- (18 Jul 2016) What the disk can do for you

- (15 Jul 2016) The curse of old age…

- (14 Jul 2016) Backup, restore and the environment…

- (11 Jul 2016) The communication protocol

- (08 Jul 2016) The enemy of thy database is…

- (07 Jul 2016) Writing to a data file

- (06 Jul 2016) Getting durable, faster

- (01 Jul 2016) Durability in the real world

- (30 Jun 2016) Understanding durability with hard disks

- (29 Jun 2016) Managing concurrency

- (28 Jun 2016) Managing records

- (16 Jun 2016) Seeing the forest for the trees

- (14 Jun 2016) B+Tree

- (09 Jun 2016) The LSM option

- (08 Jun 2016) Searching information and file format

- (07 Jun 2016) Persisting information

Comments

Have you thought about creating priority queues in front disks?

OmariO, To do what? That is the job of the OS. Note that it is typically GOOD to have a lot of load on the disk, there are many systems that can perform better if they have many outstanding I/O requests because they are shallow (long duration) but deep (many requests are fine)

I mean OS doesn't know that you want your journal writes to complete faster. It treats all the requests equally. If both the journal and the data files are located on the same disk it may make sense to create 2 queues, monitor number and kinds of requests queued to the disk and schedule appropriately.

OmariO, The problem is that we don't have enough information to do so. The disk may actually be able to support concurrent writes effectively. In fact, most of them do. It may be a RAID 0 or striped so that I/O will be great. There is also the fact that what you are describing is already done by the OS directly. When I'll like to do is to provide some sort of priorities hints there, but that turn out to be very hard.

Windows actually supports File I/O priorities (although it's API is somewhat arcane). You might want to try that.

Ziv, Yes, Widnows (and Linux) has ways to reduce the I/O priorities for certain tasks, which would be great. Unfortunately, exactly how that works is a mystery, especially when you combine that with memory mapped I/O and issuing flushing to disk. It make it very hard to reason about it.

Comment preview