The Guts n’ Glory of Database InternalsSearching information and file format

In the previous post, I showed how we can use a simple text based format to store records, and what it means for reading / writing those records. Searching in such a system means that you have quite a bit of work to do, since you need to scan the whole file to do so.

That isn’t going to really work for us. So we need to introduce indexes. An index is actually really simple idea. Given that we have the original file, we’ll have the following index for username:

Basically, the first column in the index is the value of the username, and those values are sorted, so searching the index has a O(logN) complexity associated with it. Once we have done that, we have the record number, and we can use that to find the position in the file and read the whole record.

All in all, this is pretty basic, right? So why am I dedicating a whole post to this topic?

Well, the issue with indexes would be so simple if we were planning on only doing them once. But we want to update them, and that lead to certain issues. While the index above shows only a few records, the actual data size we are talking about here is a million records. That gives us a total index size of about 16MB. What happens if we need to add a new username? In this case, a die hard fan whose username is ‘baseball’ ?

Well, in order to maintain the order, we’ll have to put it between the first two entries. Which will require us to move the rest of the data by that same amount. Effectively, in order to add a single entry to the index, we’ll have to write 16MB.

In other words, because files don’t allow us to dynamically add / remove data from the middle of the file without a lot of expensive I/O, we can’t really use flat files for this. It isn’t a surprise, but I wanted to start from the bare minimum and walk us up in the complexity hierarchy as we discover why we need those things.

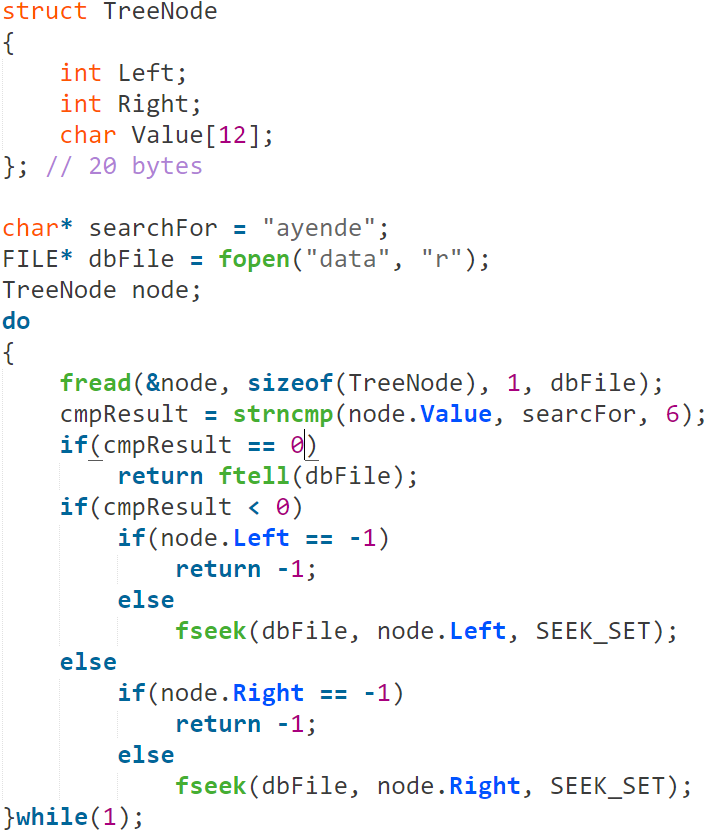

So we need to find a file format that will allow us to update things without having to shuffle the entirety of the file every time we do that. Looking at the literature, I can compare the flat file approach to having a sorted array, and it has the same problems. The typical solution for that is to use a binary search tree. In this way, we always have the root of the tree at beginning of the file, and use offsets to jump around in the file according to where we need to go.

The code for searching in this kind of file looks like this:

Note that this is with all error handling removed, and while it gives us what we wants, this solution has several issues.

To start with, if we want to have good performance, we’ll need to balance the tree on inserts / deletes, and that can be complex. Not only that, but it also means that the number of file operations that we’ll actually perform is pretty high here. This isn’t good, because files reside on (very slow) hardware, so we probably don’t want to use that.

Instead, we need to find something that will allow us to do a lot less seeks in the file (which takes about 10 – 20 ms on a standard HDD), and can handle concurrent work better (won’t be fighting over the disk head position so much). We also need to make sure that the amount of work that we have to do when we modify something is minimal. In the case of a balanced tree, the amount of work to balance it can be extremely high, and the number of places you’ll have to jump around (thus, seek) is incredible.

In the next post, we’ll discuss persistent algorithm options here, and what we should use to store the data.

More posts in "The Guts n’ Glory of Database Internals" series:

- (08 Aug 2016) Early lock release

- (05 Aug 2016) Merging transactions

- (03 Aug 2016) Log shipping and point in time recovery

- (02 Aug 2016) What goes inside the transaction journal

- (18 Jul 2016) What the disk can do for you

- (15 Jul 2016) The curse of old age…

- (14 Jul 2016) Backup, restore and the environment…

- (11 Jul 2016) The communication protocol

- (08 Jul 2016) The enemy of thy database is…

- (07 Jul 2016) Writing to a data file

- (06 Jul 2016) Getting durable, faster

- (01 Jul 2016) Durability in the real world

- (30 Jun 2016) Understanding durability with hard disks

- (29 Jun 2016) Managing concurrency

- (28 Jun 2016) Managing records

- (16 Jun 2016) Seeing the forest for the trees

- (14 Jun 2016) B+Tree

- (09 Jun 2016) The LSM option

- (08 Jun 2016) Searching information and file format

- (07 Jun 2016) Persisting information

Comments

In your code, you probably meant "if (cmpResult == 0) return ftell(dbFile);", i.e. return the position if the string was found.

Daniel, Correct, thanks, fixed

Excellent posts. Please keep going.

In your code, you probably meant "cmpResult = strncmp(node.Value, searchFor, 6);" i.e. typo in searchFor

Comment preview