The Guts n’ Glory of Database InternalsUnderstanding durability with hard disks

One of the most important aspect of a database engine is that it needs to support durability. Now, that is a personal opinion as a database author, but I consider this to be a pretty important consideration when selecting a database. Other database engines disagree, from pure in memory databases that lose all the data on restart to databases that makes “best effort” and will work as long as they don’t crash in the wrong place.

Because there are several different metrics for what durable means, I’ll provide several levels of possible durability requirements.

- None – if the application / server restarts, all the data is lost. Typically is used for in memory databases, explicitly giving up durability for performance.

- Try – the data is written to disk, but no attempt is made to make sure that it is coherent / up to date. Typically is used to startup an in memory / near in memory database from cold state.

- Crash – if the database has confirmed a write, and then immediately crashed, the data is still there. Most databases try to get to this level.

- Power loss – if the database has confirmed a write, even complete power loss of the entire machine will still keep the written data. This is where you wanna be.

Note that in all those cases, I’m talking about single node databases, distributed stuff is a lot more complex, so I’ll not touch it here.

This is when talking about durability, but there is also the notion of atomicity, in other words, a transaction that is composed of multiple operations should either be there complete (and certain if confirmed to the client) or not be there at all (rollback), there should never be a situation where some of the records went in, and some didn’t.

Finally, there is the paranoid mode for durability, in which you don’t trust the hardware, so you write to multiple locations, hash it and validate. Personally, at that level, I think that this is the purpose of the file system to verify that, and this is where the responsibility of the database ends, but if you are stuck with a poor file system choice (like FAT a decade or two ago), that was certainly something that you’ll have to consider.

At any rate, one of the major problems with gaining durability is that you have to pay so much for it. In order to actually be durable, you have to write your changes to the actual disk platter, and that is something that might actually require physical parts to move, so that is something that is very costly. How costly? A high end (15,000 RPM) hard disk can do a theoretical maximum of 250 such writes per second, and that is an extremely theoretical number. In most cases, even on high end hard disks, you’ll see a maximum of a 100 – 150 per second. You can probably double or triple that for high end SSD drive, but those are still very poor numbers, compared to the number of operations you can do in memory and in the CPU in that time frame.

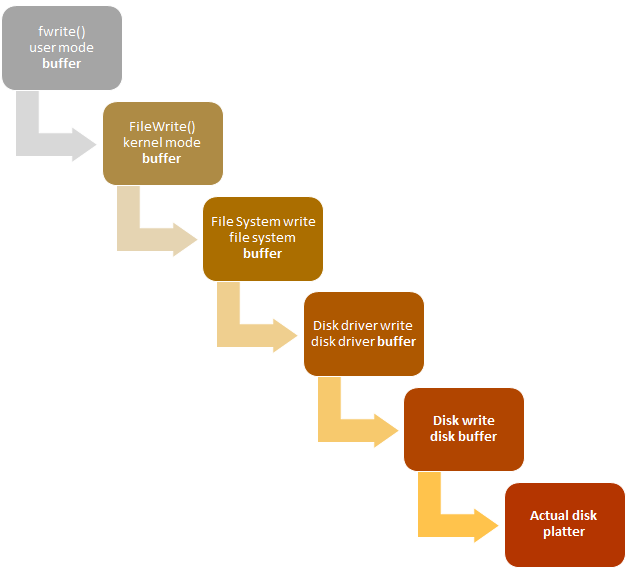

That puts a pretty hard limit on the number of times you can hit the disk, but that is not something that we can tolerate, so the process of making a write in most operation systems, looks like this:

Notice the number of buffers in the middle? Each of them is going to gather the I/O as it can before issuing a (large) write to the layer below them. With the idea that this way, we can amortize the cost of going to the physical disk among many different writes. It works, it works quite well, in fact, to the point where most of the time, you don’t really think how slow the hardware is really is.

But for databases, this is a horrendous problem. To start with, if I’m issuing a write to the disk, I have no idea when this actually hit the platter. For fun, all through this chain (which is actually simplified), each component is free to re-order the writes in any way it feels like it, so we can’t even rely on the order of the writes to ensure consistency. No, in order to protect itself from data loss / corruption in the presence of power loss, we have to tell the operating system to actually skip all those layers and flush directly to disk.

As I said, this is expensive to do. In particular, the normal way to do to make your writes, and then to to call fsync(fd) in order to flush those changes down the chain, all the way to the disk. This has a few issues, however. In particular, note that in the gap between the file system and the disk driver, we’ll lose the correlation between writes made to a particular file and any other writes made to that device. That end result is that the fsync command forces us to flush the entire disk driver buffer (and the disk buffers, etc). In production systems, that can be hundreds of MB that were innocently sitting there, slowly being written to disk, and suddenly you have this disruptive fsync that need to be written, so everything is flushed to disk, and the fsync (which you expect to be short, because you wrote on only a few dozen KB) now takes a minute, because it is actually flushing 100 MB writes from totally different process.

This post is getting long enough, so I’ll defer the actual discussion on how databases actually achieve durability to the next one, in the meantime, just consider the complexities involved in making sure that the data is on the disk, and how much of the design of modern databases is spent in optimizing just this part.

More posts in "The Guts n’ Glory of Database Internals" series:

- (08 Aug 2016) Early lock release

- (05 Aug 2016) Merging transactions

- (03 Aug 2016) Log shipping and point in time recovery

- (02 Aug 2016) What goes inside the transaction journal

- (18 Jul 2016) What the disk can do for you

- (15 Jul 2016) The curse of old age…

- (14 Jul 2016) Backup, restore and the environment…

- (11 Jul 2016) The communication protocol

- (08 Jul 2016) The enemy of thy database is…

- (07 Jul 2016) Writing to a data file

- (06 Jul 2016) Getting durable, faster

- (01 Jul 2016) Durability in the real world

- (30 Jun 2016) Understanding durability with hard disks

- (29 Jun 2016) Managing concurrency

- (28 Jun 2016) Managing records

- (16 Jun 2016) Seeing the forest for the trees

- (14 Jun 2016) B+Tree

- (09 Jun 2016) The LSM option

- (08 Jun 2016) Searching information and file format

- (07 Jun 2016) Persisting information

Comments

What do you think about the approach of always writing using WRITE_THROUGH? That way you can control durability at the individual IO level.

When you tell the OS to write a buffer to disk is the write atomic? Ordered?

Mark, Just using WRITE_THROUGH doesn't actually give you the benefit of no buffering. You need both. See here: https://msdn.microsoft.com/en-us/library/windows/desktop/aa363858(v=vs.85).aspx

This particular item is of interest:

This is mentioned only when both are valid. There is also a mention of performance benefit when using both because we skip metadata updates.

There is also another issue, we don't actually want to read from the journal (unless we crashed, which is different), so there is no real point in filling the system cache with stuff that should never be read.

OmariO,

Disk writes are atomic to a single sector (in other words, a 512 bytes write should be atomic), but in practice, you don't rely on that. You force flushes (either via the flags or via fsync) to make sure that there is proper ordering between those operations.

Note that the file system is free to reorder and break apart I/O requests as it wishes, and only fsync / flags can cause it to do this directly in a way that an application can take advantage of it.

Right, but what do you think about doing that (using both) as an IO strategy?

Mark, That is what we are using, yes. Wait for tomorrow's post with the full details :-)

So when disk writes your buffer that spans a bunch of sectors it does so in the order - one sector after another? Can it happen that it writes atomically the last sector first and then loses power in its capacitors?

OmariO, There is absolutely no guarantee with regards to what order the disk is handling the write. In particular, while you can probably safely assume that a write that cover an entire sector is sort of atomic, that isn't always the case (consider remote file systems), and you can't assume that the writes will go in any particular direction.

When you have multi sector write, for example, the drive is actually encouraged to write it in a different order then the one you specified (maybe the disk head is in the proper position to start from a particular location, so it will start writing there), so you can have a write to sectors 1,2,3 and 5, but not 4 (in the same multi sector write). That would be strange, but depending on the actual layout of the data on disk, that is possible.

Fragmentation plays into that a lot, too. So even though you think you issues a three sector writes that are right next to each other, they may have been allocated very far away, so they can be written in any order.

Basically, the short answer is that until you get a fsync() result back, you can't really assume anything about the data on disk. And you need to be suspicious of fsync under certain systems (sometimes admin / kernel / driver just turn it off for whatever reason).

Thanks for the detailed answer. Looking forward for the next post!

Argh! This is worse than Game of Thrones. You set up a fascinating discussing, and then say "more on this in the next post". I want details!

Ross, There are currently 15 posts in this series, talking about everything in detail. Trying to get it all in one post means that people skip a lot of the details.

Hi Oren, this might be slightly off topic, but there is a really interesting paper by the late Jim Grey examining disk failure rates. If you haven't seen it before, it's a great read http://arxiv.org/abs/cs/0701166

Craig, Yeah, this is what happens when you can't trust the disk. It is a bit high than the numbers I'm familiar with, but he is talking about decades old low end hardware. Note that at this point, the entity responsible for figuring out is the file system, not the database itself, there are some things that a database can do to recover from them, but they are quite expensive, and a good file system should already be doing them.

And you are supposed to run your db on something a bit better than a single low end hard disk.

agreed, what surprised me though was the failure rates in the SAN firmware, which I didn't expect. it's admittedly a rather old article. it would be interesting to see a follow up study...

I have two questions related to the use of fsync: 1. How much performance does it cost to wait for the writes to complete (i.e. moving from the "Try" to the "Crash" or "Power loss" level)? 2. How do you deal with admins disabling fsync or hard drives ignoring fsync?

Daniel, With fsync & friends, we are seeing on one machine steady state of 65MB/sec writes to disk, with peeks of about 550MB/sec.

Without it, we are seeing steady state of about 20MB /sec with peeks of about 550MB/sec (but usually the peeks are around 330MB/sec).

To compare, writing 2 million 2KB items with fsync costs gives us a rate of about 7.4 items per ms. Without fsync, we are talking about 7.8 items per ms.

Now, to be fair, this is just by removing the fsync all, the rest of the code still make sure that the preparation for actually writing with fsync. If we didn't have it at all, we would see a major difference in the architecture of the solution. Because we would be able to rely on different guarantees from the OS.

In other words, while I have some numbers here, they are very suspect.

Another project of ours, writing a log file, can do roughly 400 MB / second sustained without putting too much pressure on the disk, by explicitly utilizing the buffered nature of such calls.

In general, I would say that the cost of fsync and friends is an order of magnitude. If you are calling fsync directly, this is really expensive. If you are doing it in the optimized fashion we do, it is still expensive, but much less so.

As for admins that turn this off. They sometimes have valid reason (battery backup exists), and there is no way the database itself can discover whatever this is the case or not. Both that and lying drives are stuff that you just have to live with, document carefully and trust the administrator to make the right decisions.

Comment preview