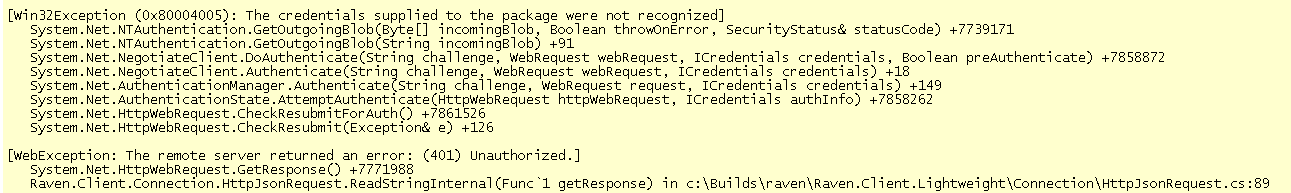

This is a story from the time (all those week or two ago) when we were building RaccoonBlog. The issue of managing CSS came up. We use the same code base for multiple sites, and mostly just switch around the CSS values for colors, etc.

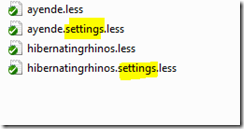

It looks something like this:

We are using the less syntax to generate a more reasonable development experience with CSS. We started with the Chirpy extension, which provides build time CSS generation from less files inside Visual Studio.

Well, I say started because when I heard that we were using an extension I sort of freaked out a bit. I don’t like extensions, I especially don’t like extensions that I have to install now on every machine that I use to work on RaccoonBlog. The answer to that was that it takes 2 minutes or less, and is completely managed within the VS AddIn UI, so it is really painless.

More than that, I was upset that it ruined my F5 scenario. That is, I could no longer make a change to the CSS file, hit F5 in the browser and see the changes. The answer to that is that I could still hit F5, I would just have to do that inside of Visual Studio, which is where I usually edit CSS anyway.

I didn’t like the answers very much, and we went with a code base solution that preserved all of the usual niceties of plain old CSS files (more on that in my next post).

The problem with tooling over code is that you need to have the tooling with you. And that is often an unacceptable burden. Consider the case of me wanting to make a modification on production to the way the site looks. Leave aside for a bit the concept of making modifications on productions, taking away the ability to do so is a Bad Thing, because if you need to do so, you need to do so Very Much.

Tooling are there to support and help you, but if tooling impact the way you work, it has better not have any negative implications. For example, if one member of the team is using the NHibernate Profiler, no one else on the team is impacted, they can go on and develop the application without any issue. But when using a tool like Chirpy, if you don’t have Chirpy installed, you can’t really work with the application CSS, and that isn’t something that I was willing to tolerate. Other options were raised as well, “we can do this in the build, you don’t need to do this via Chirpy”, but that just raised the same problem of breaking the Modify-CSS-Hit-F5-In-Browser cycle of work.

The code to solve this problem was short, to the point and really interesting, and it preserved all the usual CSS properties without creating dependencies on tools which might not be there when we need them.