reHow memory mapped files, filesystems and cloud storage works

Kelly has an interesting post about memory mapped files and the cloud. This is in response to a comment on my post where I stated that we don’t reserve space up front in Voron because we support cloud providers that charge per storage.

From Kelly’s post, I assume she thinks about running it herself on her own cloud instances, and that is what here pricing indicates. Indeed, if you want to get a 100GB cloud disk from pretty much anywhere, you’ll pay for the full 100GB disk from day 1. But that isn’t the scenario that I actually had in mind.

I was thinking about the cloud providers. Imagine that you want to go to RavenHQ, and get a db there. You sigh up for a 2 GB plan, and all if great. Except that on the very first write, we allocate a fixed 10 GB, and you start paying overage charges. This isn’t what you pay when you run on your own hardware. This is what you would have to deal with as a cloud DBaaS provider, and as a consumer of such a service.

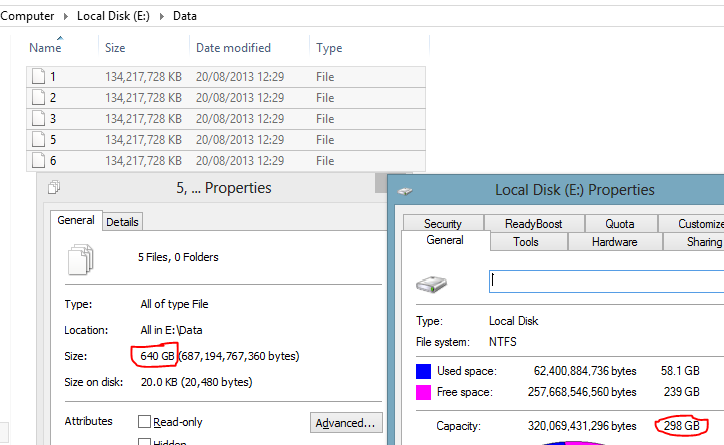

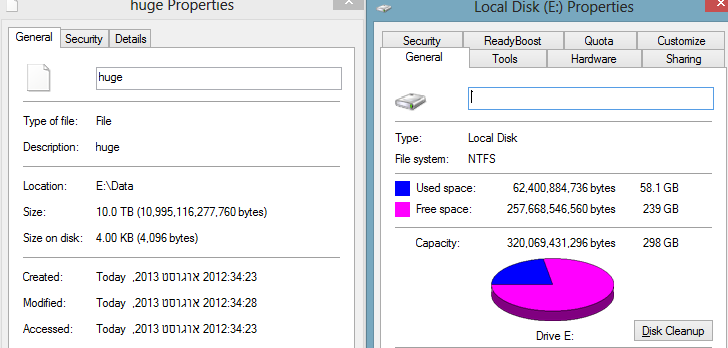

That aside, let me deal a bit with the issues of memory mapped files & sparse files. I created 6 sparse files, each of them 128GB in size in my E drive.

As you can see, this is a 300GB disk, but I just “allocated” 640GB of space in it.

This also shows that there has been no reservation of space on the disk. In fact, it is entirely possible to create files that are entirely too big for the volume they are located on.

I did a lot of testing with mmap files & sparseness, and I came to the conclusion that you can’t trust it. You especially can’t trust it in a cloud scenario.

But why? Well, imagine the scenario where you need to use a new page, and the FS needs to allocate one for you. At this point, it need to find an available page. That might fail, let us imagine that this fails because of no free space, because that is easiest.

What happens then? Well, you aren’t access things via an API, so there isn’t an error code it can return, or an exception to be thrown.

In Windows, it will use Standard Exception Handler to throw the error. In Linux, that will be probably generate a SIVXXX error. Now, to make things interesting, this may not actually happen when you are writing to the newly reserved page, it may be deferred by the OS to a later point in time (or if you call msync / FlushViewOfFile). At any rate, that means that at some point the OS is going to wake up and realize that it promised something it can’t deliver, and in that point (which, again, may be later than the point you actually wrote to that page) you are going to find yourself in a very interesting situation. I’ve actually tested that scenario, and it isn’t a good one form the point of view of reliability. You really don’t want to get there, because then all bets are off with regards to what happens to the data you wrote. And you can’t even do graceful error handling at that point, because you might be past the point.

Considering the fact that disk full is one of those things that you really need to be aware about, you can’t really trust this intersection of features.

More posts in "re" series:

- (05 Dec 2025) Build AI that understands your business

- (02 Dec 2025) From CRUD TO AI – building an intelligent Telegram bot in < 200 lines of code with RavenDB

- (29 Sep 2025) How To Run AI Agents Natively In Your Database

- (22 Sep 2025) How To Create Powerful and Secure AI Agents with RavenDB

- (29 May 2025) RavenDB's Upcoming Optimizations Deep Dive

- (30 Apr 2025) Practical AI Integration with RavenDB

- (19 Jun 2024) Building a Database Engine in C# & .NET

- (05 Mar 2024) Technology & Friends - Oren Eini on the Corax Search Engine

- (15 Jan 2024) S06E09 - From Code Generation to Revolutionary RavenDB

- (02 Jan 2024) .NET Rocks Data Sharding with Oren Eini

- (01 Jan 2024) .NET Core podcast on RavenDB, performance and .NET

- (28 Aug 2023) RavenDB and High Performance with Oren Eini

- (17 Feb 2023) RavenDB Usage Patterns

- (12 Dec 2022) Software architecture with Oren Eini

- (17 Nov 2022) RavenDB in a Distributed Cloud Environment

- (25 Jul 2022) Build your own database at Cloud Lunch & Learn

- (15 Jul 2022) Non relational data modeling & Database engine internals

- (11 Apr 2022) Clean Architecture with RavenDB

- (14 Mar 2022) Database Security in a Hostile World

- (02 Mar 2022) RavenDB–a really boring database

Comments

Why does being a cloud provider create additional costs to your users? As I pointed out in my post, Windows Azure uses a better storage model where you will not get charged.

Where memory mapped files can cause problems is shared hosting environments where you are charged for the inside-the-filesystem usage instead of physical media but these services are dying very quickly because this model doesn't make sense anymore for several reasons (competitive pricing, isolation, etc).

If you run a cloud provider yourself and you are charging for file system based consumption I would say you have built a flawed system. You need to be selling virtual disks. Windows Azure itself does not have this flaw, so neither should someone elses cloud.

Kelly, Think about the scenario for a RavenDB on the cloud provider. The space needs to go somewhere, and each db has its own files. Now, how do you charge this? Usually you charge per size and per transaction. Let us go with per size, because that is obvious, visible and tend to be a good indicator for the cost of having a tenant. If you gave a user a 1 GB plan, you need to reserve that 1 GB plan in advance, to make sure that they can have at least that much space. Now, you can't allocate more than what the volume holds, because that would be bad if they all started wanting all the disk space they were promised. Then there is the question of what to do about overage, let us say that I give you a 1 GB plan, then charge you overage for every 100MB you use. It would be pretty bad if I allocated another GB from the first byte of the second GB, just because I needed it.

You are focused on the cloud infrastructure, but you need to look at it from the db as a service provider POV.

Ayende,

Why would I use a service from a service provider that is designed in a flawed way like this? Windows Azure does not charge me in this flawed way you describe. Their storage system is designed to handle this scenario. So if your service does't, it should be designed better.

As I pointed out, they do not charge based on file system statistics and you do not need to either. This is a design decision because you are simply just storing files on a NTFS partition and selling space.

What your telling me is if theoretically RavenDB was memory mapping all the disk, that you would have the problems you describe with RavenHQ. So that means if I hosted Raven myself on Windows Azure I could get cheaper rates because I would only be billed for consumption? That means RavenHQ storage system is inferior to Windows Azure.

Your choice is to fix it in the database design rather than at the system level, which can work for you, but for Windows Azure because they are servicing all purposes, a better design covers all the bases.

Kelly, For example, you are running the service on AWS? For example, IO rates on Azure are abysmal and then some?

And I think that you are missing the point, or confusing two issues. 1) We do file size growth to allow size based cost models. 2) We don't do sparse files because of the issues outline in this post. It is very hard to do proper error detection with mmap files in error conditions with sparse files.

Kelly, Also, I would be very cautious of designing my system to be used only on a single cloud provider, because of a current feature that they have. If you want to do SaaS for users that are running in other clouds (and you'll want that), being able to run there is crucial, if only to avoid the cost of cross DC network transfers.

Ayende,

Whether you design your system on top of Azure or AWS doesn't matter. What your doing is sharing a NTFS partition and that's where the design flaws begin.

On AWS you are charged for provisioned size so you can't reduce your cost there. You can be really wasteful with it though and that can increase your rates.

Nothing stops you from creating virtual disk images inside the AWS disk and creating a more flexible system that uses the space more efficiently.

Now granted this won't reduce your cost (but there was nothing you could do about that anyways) but it makes better use of what you provisioned rather than letting the file system be your achilles heel.

As I said, this is a design issue that you can either solve at the system level or inside the database. You chose in the database. Both are in your control.

Kelly, I am not following you here. There are two issues: - mmap + sparse files - unstable and not good in my experiments. - dynamically growing non sparse files

Which one are you talking about?

I'm trying to wrap my head around how the OS sees the file size, as I'm intrigued by those screenshots. How does the OS determine file size in that file property dialog?

Igor, The file entry in the FS contains the file size. But in addition to that, you have the space on disk, that is the number of allocated clusters.

@Kelly I think you are missing the point entirely, the issue raised pertains to DBaaS providers such as ravenmq, mongohq, Oracle Database Cloud Service and sql azure (a better comparison in your case) to name a few.

If you look at the pricing model for all these providers, you realise that they are different. Oracle for example limits the number of schemas, salesforce restrics on number of records, while sql azure uses db size. Ayende is pointing out that that he needs to allow for such flexibility without charging people unfairly.

Now while a VPS provider might not have a need to look into a clients file system to calculate cost, how would salesforce Db.com count the number of records for billing, or oracle limit the number of schemas created.

Better yet how does SQL Azure charge "based on the actual volume of the database" and not the volume ocupied by all the other components necessary to surport that DB.

Your aguments are valid for VPS but I dont think they are for DBaaS

Ayende, certainly true, if you oversubscribe your filesystem you're going to be in trouble. The obvious solution is Don't Do That. You know in advance how much space you're buying from your provider, so why would you ever be in a position of trying to use more than is available?

Howard, Consider this from the POV of the cloud provider. You promise to give people 1 GB db, but they only use 300MB. It is quite a bit of saving if I can avoid allocating all that space up front. And from operational POV, it is much better to get an explicit (out of disk space) than a SIG

Sure, but you don't need to allocate all the space up front, it's just easiest if you do. You could instead do a write() to grow the file whenever page_alloc() has to use new pages. Best of both worlds.

Howard, What do you gain from using the sparse file, then? Just the ability to map the entire thing all at once?

Joram,

I've built and worked with several companies who build these kinds of solutions and the good designs never shared a single file system entry for all customers. We created a more extensive and flexible architecture that allowed us to lower prices.

It does not make any sense to make a service like this in such a way that something like a $BitMap index hurts your service and customer flexibility.

Techniques used by Windows Azure or other cloud providers are applicable to designs of SaaS or DBaaS as well.

I've been on multiple projects like this that use ZFS compression to host virtual disks (like VHD's) but on the surface the customer doesn't know the underlining architecture.

Build a sound system or choose not to and suffer from some limitations that the file system imposes that some competitors may not be suffering from.

To further expand on my comment above.

Yes it takes time to build a sound system and if all you're doing is running RavenDB in the cloud its simpler to write some code in Raven to work around some limitations. This makes sense for a very focused service.

I'm just pointing out that memory mapped files don't cost more money when you build systems properly and this is why other services don't have such limitations.

You can solve this problem either in the database (as Oren has decided) or you can solve it at the system level. If you are providing multiple services then it might make sense to solve it at the system level.

Most of the time I've seen people solve it at the system level and not the database level because of a whole suite of other issues that require it such as data isolation demand from customers, and various other things.

Kelly, I think you missed the point about mmap files. It isn't that they cost more money. It is that mmap files + sparse files + full disk == nasty stuff happens. That is why we choose to not include that.

Oren,

The quote I was mostly responding to was this:

"Remember, we support cloud providers that offer plans based on storage used. Wouldn't be nice if we started out by "oh, you want to store a 3Kb document, I'll just go ahead and reserve that 10GB for you anyway.""

I proved that using a cloud provider that charges for bytes used that allocating a memory mapped file and reserving the space did not cost me any money and that this is false.

The case that this can impact is shared hosting where everyone shares a single file system.

Kelly, Doing that require me to use sparse file, and run into the problems I just outline. Also, your model forces you to use only Azure.

Backing up a step - we missed something somewhere along the way. I just tried to create a 1TB file on an XFS filesystem on my 512GB drive, and it (reasonably) refused. So the behavior that you're accustomed to from Windows/NTFS is different from what I expect. It really makes no sense for a filesystem to allow you to create a file whose size is larger than the underlying media; if I were working frequently on Windows I'd consider this a Windows bug.

The advantage of a single mmap on a sparse file is that you don't spend excess time incrementally growing the map. Also on a heavily loaded system, you may be unable to incrementally grow the map because of fragmentation in the address space, so there are strong disadvantages to not grabbing the space all at once, up front. There's no real penalty to declaring a huge map and leaving the majority of it unused. The kernel knows what's live and what's not.

re: my suggestion about growing the file using write() calls - you wind up paying for these write() calls but you're still only paying the cost during actual file growth. Most of the time, with page reuse, you can just dirty pages in memory without any system call overhead.

Howard, I would say that this is a bug that you can't. The point of sparse files is to create huge (mostly empty) files. If I wanted a 1 TB file, but the actual data I am storing is 100Mb, why can't I do that?

Have you actually seen the memory fragmented to the point where it failed on mmap for 64 bits? I think that addressable space is something like 256 terabytes on most CPUs today, even if you go crazy, I think that should be enough, no?

re: write calls - you are paying for this more than just there, you are also causing fragmentation, where as if you take more space at once you are more likely to get continuous fs space.

Comment preview