B+Trees and why I love them, Part III–in page additions, deletions and updates

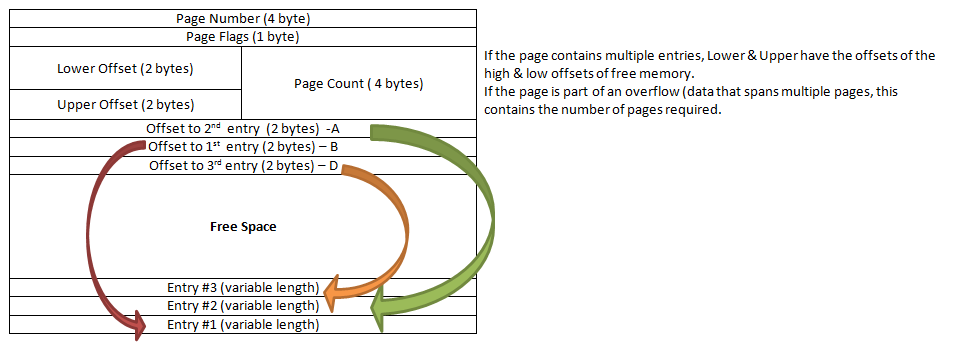

This is more of less how a page is represented in memory.

There are a few things to note. The entries immediately following the page header points to the actual data at the end of the page. Why is that? Well, remember that we are working with a fixed size page. And we need to add data to the page in a sorted fashion, and do this cheaply. In order to do that, we always add the actual data at the end of the file, before any other data. When the page is empty, we add the value in the end. And the next value is immediately before the previous value, etc.

However, as you can see in the image, the fact that we add values in a particular order doesn’t mean that they are sorted in that order. In order to get good sorting, we keep a list of the entries offsets in the beginning of the page, just after the header. This is a sorted list, which gives us the ability to easy add / move an item in the page without actually moving the page, just by changing the offset position it is located on. If we would add another entry to this page, with the key C, the actual data would go on top of entry# 3. But the offset recording this entry would go between B & A at the head of the page.

In other words, the actual data of the page grows upward, and the offsets pointing to the locations of entries in the page grows downward. A page is full when you can’t fit the next data item and its offset in the space between the data & the offsets.

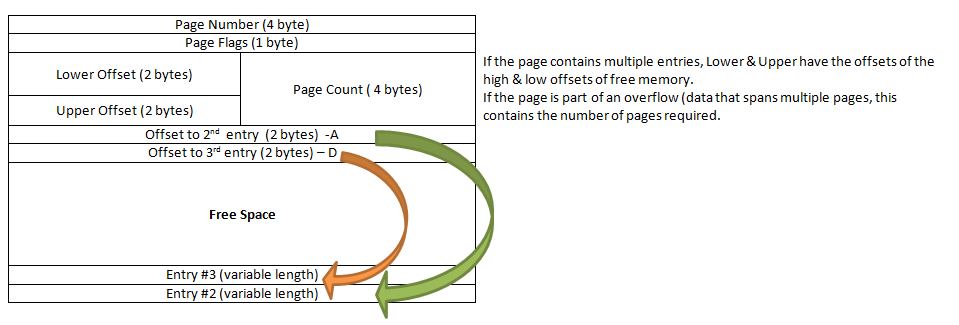

So that is addition. How about deletion. When we delete, we need to recover the space, but that is actually very easy to handle. Let us say that we want to delete the A entry. That means that we need to do the following. Move all the higher entries data downward to cover the space taken by the entry, and then update the offsets to reflect that. In the end, we are left with:

Updates work as a pair of delete/add operations, with some important edge cases. What happen if we have a page that is nearly full, and we want to update an entry that is bigger than it can currently hold? We have to check if the value is also too big if the previous value is removed. If not, we can fit it into the currently page. Otherwise, we would have to do a page split to accommodate it.

Surprisingly enough, the code that is required to handle that is quite pretty, although the actual mechanism probably require a whiteboard to explain. And a lot of hand waving.

Comments

I should note, this is not the only way to handle deletes, it's just the way I chose in LMDB. For example in BerkeleyDB and SQLite3, deletes don't actually delete anything, they just set a Deleted flag on the record. An explicit garbage collection pass must then be performed in the future, to reclaim the space. Personally I dislike anything that requires periodic maintenance, thus LMDB does all housekeeping inline.

This is also a pretty clear time/space tradeoff; you probably get faster deletes this way but you consume more disk space.

re: requiring a whiteboard - I frequently find that writing the code that does what I want takes mere moments, while writing the documentation that explains it can take hours.

Howard, snort lol Yeah, that is probably because you have the mental model for this in your head, and that is nearly impossible to get out in a coherent fashion all at once. You have to explain things from scratch, and a lot of time A depends on B depends on C depends on A, so you've to jump between parts in the explanation.

You cannot read from a page when updateing that page, until the transaction is done? iow a writer blocks the readers?

// Ryan

Ryan, No, that is not how it works. LMDB works using Copy on Write, so instead of modifying the page in place, a copy is made, and that is modified. That copy is only made visible when the transaction commits.

Thus you are creating the whole page at a new location in memory(mapped file) and then 'rebuild' it according to the insert/update/delete operations?

// Ryan

@Ryan, see from slide 22 onwards in this deck http://symas.com/mdb/20121107-LinuxCon-MDB.pdf, it shows the whole process.

@Matt Yup, thanks, I had read that document as soon as this series had started ;)

But this blog gives the impression the page is modified in place, while it is not.

// Ryan

re: "LMDB works using Copy on Write, so instead of modifying the page in place, a copy is made, and that is modified. That copy is only made visible when the transaction commits."

How do you do MVCC? i.e. if there are two transactions open for rows in the same block. So the first takes a copy of the committed block and modifies one row, the second takes a copy of the committed block and modifies a different row, the first transaction commits (replaces block or pointer to block), and then when the second transaction commits, it overwrites the changes done by the first transaction. When the first transaction commits, how do you apply its changes to the block used by the second transaction, before the second transaction commits? Do you notice that this happened and re-do the second transaction?

@Z.T.

lmdb only allow a single-writer, i.e. writes are serialized, see http://symas.com/mdb/:

Reader/writer transactions - readers don't block writers and writers don't block readers. Writers are fully serialized, so writes are always deadlock-free.

Ryan, No, I allocate a new page, copy the current page to it, and then modify the copy.

Wouldn't there be a small advantage in switching the place of data and pointers? So that you store the offset pointers at the end of the page and the data at the start of the page?

The data entries are variable length, while the pointers are all fixed size. When having a single entry in the page that is updated frequently you have to do a lot of data shuffling so that the data always ends where the page ends and also adjust the offset. When having the data stored in the beginning you don't need to change the offset at all and only the length of the entry might change.

Granted, this is a bit of an edge case and the benefit is minimal, but I also don't see any disadvantage in switching those two arround.

Thomas, We aren't doing data shuffling for adds. We add the data at the top of the section already done (so we write toward the start of the page).

Yes, I understand this. I was talking about updating an entry (especially updating the last entry). Assuming you update the last entry with a longer value you have to move the entry up (since the end is fixed) and update the offset. Whereas when switching it arround, you can keep the offset and just append to the end.

Thomas, I think it would be pretty rare to do that, and anyway, we wouldn't have to move anything, it would just work, we would just overwrite from the next point.

You should consider not moving the entries on delete. In particular this works poorly when records are inserted in ascending order and then deleted in ascending order. All the memory moves gives some O(n^2) characteristics.

A typical solution is to free the space, which creates fragmentation, but not compact the page until the space is actually required for another record. That way if the other records are deleted later you don't have to do anything.

Comment preview