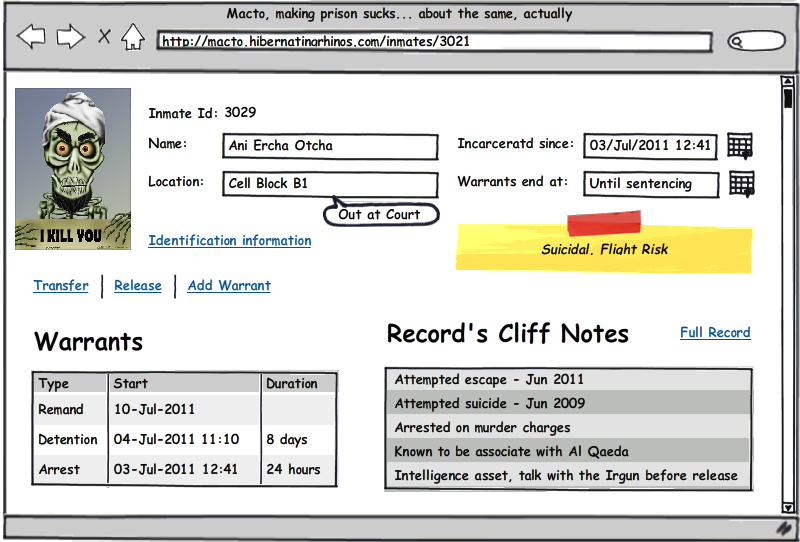

People always love to start with CRUD, but it is almost never that simple. In this post, we will review the process required to accept an Inmate into the prison.

That process is composed of the following parts:

- Identification

- Who is the guy?

- Id number

- Names

- Photo

- If we can’t identify / refuse to identify, we still need to be able to accept him.

- Lawful chain of incarceration

- Go over all the documents for his arrest

- Ensure that they are all in order

- Ensure that they are continuous and valid

- Check if there are any urgent things to do with him. For example, he may need to be at court today or the next day.

- Medical exam

- Is it okay to hold the guy in prison?

- If he is not healthy, can we take care of him in prison?

- Does he require hospitalization?

- Does he require medicine / treatment?

- Are there any medical consideration into where to put him?

- Intelligence

- Interviewing the guy

- Report interesting details that are known about him

- Acceptability

- Does he fit the level of Inmates we can accept? We usually don’t put murderers in minimum security prisons, for example.

- Does he have any medical reason to reject him?

- Are there any problems with the incarceration documents?

- Is there any intelligence warning about the guy?

- Placement

- Decide where to put the Inmate

- What type of an Inmate is he? (Just arrested, sentenced, sentenced for a long period, etc)

- Why is he in prison for?

- What kind is he? (You want to avoid Inmate infighting, it creates paperwork, so you avoid putting them in conflict if possible)

- Where there is room available?

Another important aspect to remember is that while we are allowed to reject invalid input (for example, we are allowed to say that the id number has to consist of only numeric characters), we are not allowed to reject input that is wrong.

What do I mean by that. Let us say that we have an Inmate at the door, and he doesn’t have his incarceration paperwork in order (well, not he, whoever brought him in, but you get the point). That means that legally, we can’t hold him. But Macto isn’t where things are actually happening, it is merely a support system that tracks what is going on in the real world. And the prison commander can decide to accept that guy anyway (say, because the paperwork in en route), and we have to allow for that. If we try to stop people from doing this, it is going to be worked around, and we don’t want that. The system is allowed, even encouraged, to warn the users when they are doing something wrong, but it cannot block it.

The first part, Identification, is actually pretty easy, all told. This is fairly simple data entry process. We’ll want to do some checkups on the data, such as that the id number is valid or to check the id against the name, etc. But we basically have to have some level of trust in the documents that we have. You usually don’t have an arrest warrant for “tall guy in brown shirt”. If we find any problems there, we can Flag the Dossier as a potentially fraudulent name. This is also the stage where we want to check if the Inmate is a returned visit, and bring the life the old Dossier.

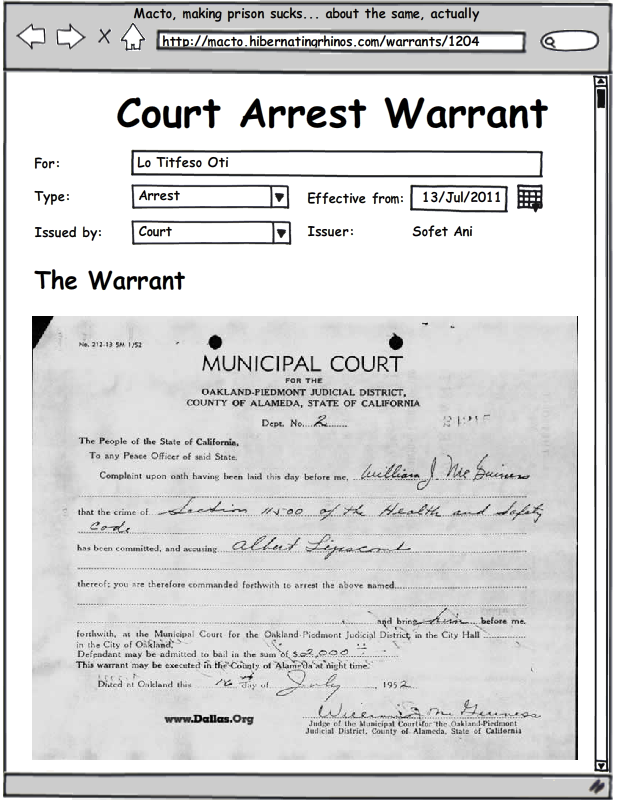

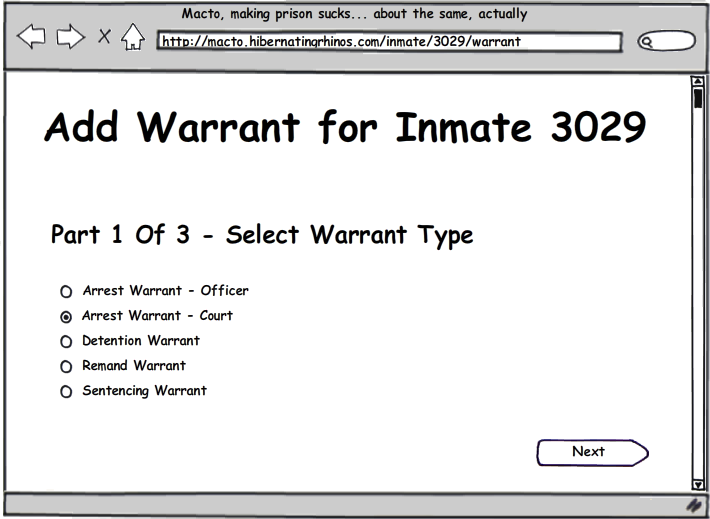

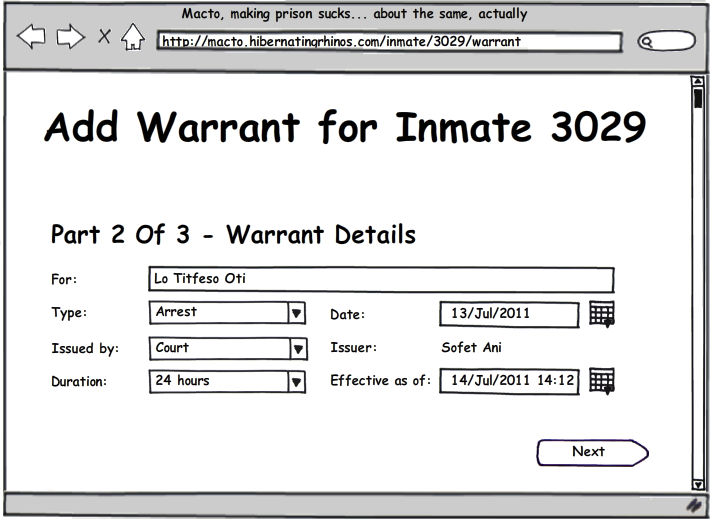

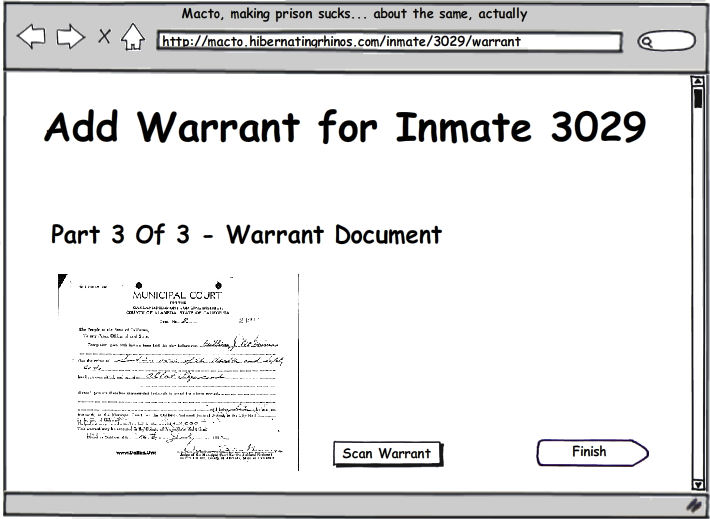

The second part is more complex, because there are many different types of Warrants, each with their own implications. Arrest Warrant is valid for 24 hours, Remand Warrant is good until sentencing, etc. We need to input all of those Warrants, ensure that they are consistent, valid and continuous. If there is a problem with that, we need to Flag the Dossier, but we can’t reject it. We will discuss this in more detail in the next post.

The third part is basically external to Macto, we may need to provide the Inmate ID, for correlation purposes, but nothing beyond that. We do need to get approval from the doctor that the Inmate is in an OK condition to be held in the prison. That does get recorded in Macto.

The forth part is again, external to us. Usually any information is classified and wouldn’t appear in Macto. We may get some intelligence brief about the guy, but usually we won’t.

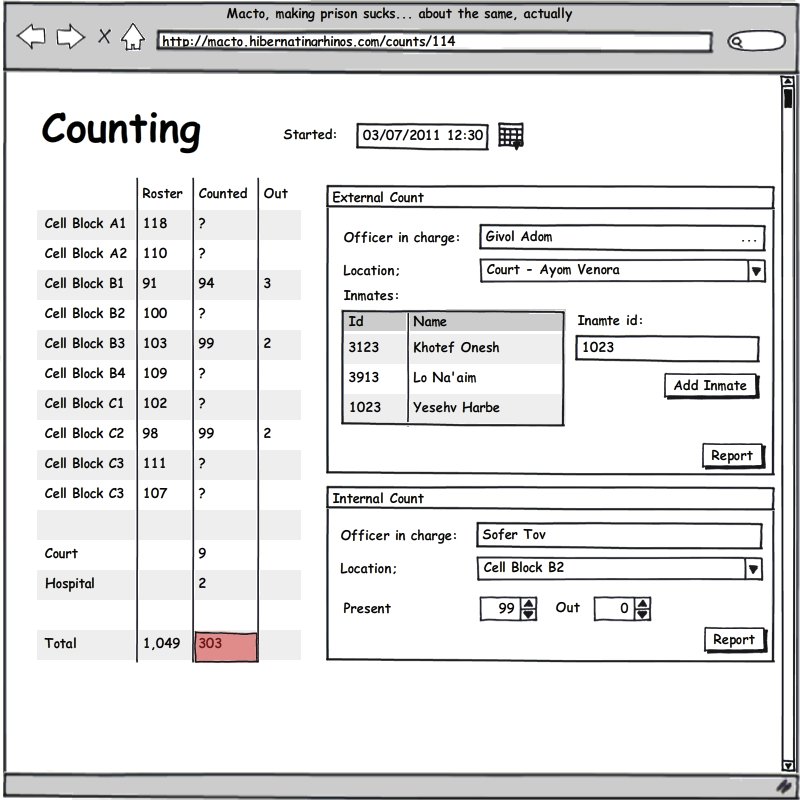

The fifth part is important, this is where we actually take legal and physical ownership for the Inmate. Up until that point, we had him in our hands, but we weren’t responsible for him. Accepting the Inmate is a simple matter if everything is good, but if the Dossier was Flagged, we might need approval from the officer in charge. Accepting an Inmate means that he is added to the prison’s Roster.

The sixth part is pretty much “where do I have a spare bed”, after which he is added to the Roster of the cell block he is now in care of.

It is important to note that Placement always happens. Even if immediately after Accepting an Inmate you rushed him to the hospital, that Inmate still has to be assigned to a cell block, because that assignment means that the cell block commander is in charge of him. That way we avoid potential mishaps when an Inmate is assigned to no one, doesn’t get Counted.

Okay, I think that this is enough for now, in the next post, we will discuss what exactly goes on in the second part, it is a pretty complex piece, and it deserve its own post.