The title for this post is taken from this post.

I released NH Prof to the wild in two stages. First, I had a closed beta, with people that I personally know and trust. After resolving all the issues for the closed beta group, we went into a public beta.

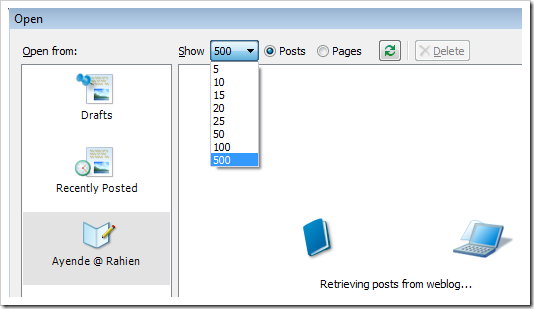

Something that may not be obvious from the NH Prof site is that when you download NH Prof from the site, you are actually downloading the latest build. The actual download site is here.

NH Prof has a CI process that push it to the public whenever anyone makes a commit. My model here was both OSS and JetBrains' daily builds.

What this means for me is that the cost of actually releasing a new version is just about zero. This is going to change soon, when 1.0 will be released, of course, but even then, you'll be able to access the daily builds (and I'll probably have 1.1, 1.2, etc).

What is interesting is that it never occurred to me not to work that way. Perhaps it is my long association with open source software. I have long ago lost my fear of being shown as the stupidest guy in class. (As an aside, one of the things that I tend to mutter while coding is: If stupidity was money, I was rich.)

The first release of NH Prof for the private beta group showed that the software will not even run on some machines!

The whole idea is to get the software out there and get feedback from people. And overall, the feedback was positive. I got some invaluable ideas from people, including some surprises that I am keeping for after v1.0. I also got some on the field crashes. That can't really help the reputation of the tool, but I consider that an acceptable compromise. Especially when the product is in beta. And especially since you are basically getting whatever I just committed.

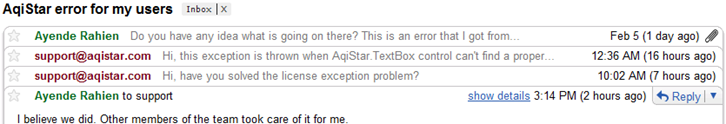

Being able to get an email from a user, figure out that problem, fix it, commit and then reply: "try downloading now, it is fixed" is a very powerful motivator. For myself, because now fixing a bug is so much easier. For the users, because response times are short. For myself, because I am a lazy guy, basically, and I am not willing to do things that are annoying, and deployment is annoying.

One interesting anecdote, we run into some problem with a component that we were using (now completely resolved, and totally our fault). We were able to commit a reduced functionality version, which was immediately available to users (build #227, if you care). Fix the actual issue (build #230, 14 hours later) and have a version out which the users could use.

What about private features? If I want to expose a feature only when it is completed, this is an issue.

Well, what about them? This is why we have branches, and we did some work there, but I don't really believe in private features. We mostly did things there of exploratory nature, or things that were broken (lot of attempts to reduce UI synchronization, for example).

So far, it seems to be working :-)