PR ReviewEncapsulation stops at the assembly boundary

The following set of issues all fall into code that is used within the scope of a single assembly, and that is important. I’m writing this blog post before I got the chance to talk to the dev in question, so I’m guessing about intent.

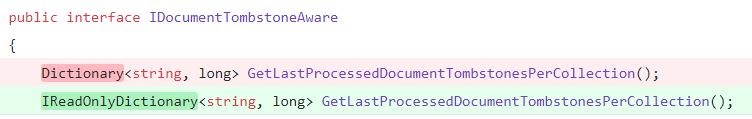

This change is likely motivated by the fact that callers are not expected to make a modification to the resulting dictionary.

That said, this is used between different components in the same assembly, and is never exposed outside. That means that we have a much higher trust between the components, and reading IReadOnlyDictionary means that we need to spend more cycles trying to figure out who you are trying to protect from.

Equally important, in this case, the Dictionary methods can be called without any virtual call overhead, while the IReadOnlyDictionary needs interface dispatch to work.

This is a case that is a bit more subtle. The existingData is a variable that is passed to a method. The problem is that in this case, no one is ever going to send null, and sending a null is actually an error.

In this case, if we did get a null, I would rather that the code would immediately crash with “what just happened?” rather than limp along with bad data.

More posts in "PR Review" series:

- (19 Dec 2017) The simple stuff will trip you

- (08 Nov 2017) Encapsulation stops at the assembly boundary

- (25 Oct 2017) It’s the error handling, again

- (23 Oct 2017) Beware the things you can’t see

- (20 Oct 2017) Code has cost, justify it

- (10 Aug 2017) Errors, errors and more errors

- (21 Jul 2017) Is your error handling required?

- (23 Jun 2017) avoid too many parameters

- (21 Jun 2017) the errors should be nurtured

Comments

The second case looks like a classic "On Error Resume Next" style of code that is trying to be helpful but I think is generally far more harmful. It can be hard to explain to less-experienced developers why hard failure is preferable to trying to helpfully handle error cases , at least until they learn the pain of debugging a system that isn't giving you a clear signal of an error and instead just does the wrong thing.

The first case is interesting to me: I often overlook the cost of virtual dispatch for the sake of making the contracts between components clearer. I wonder if a happier middle-ground would be to use ImmutableDictionary? I much prefer the strong behaviour (you can never change this dictionary) versus the weaker contract (you can't modify this dictionary, but we don't promise nobody else will), and you'd eliminate the virtual dispatch too.

Paul, One thing about

ImmutableDictionaryis that I run into a lot of perf problems with them. See: https://ayende.com/blog/164739/immutable-collections-performanceIn the first case, if the code is used within a single (trusted) assembly, shouldn't the interface be

internalinstead ofpublic? That way, you can safely expose aDictionaryand know that it (probably) won't be modified.Jorge, Internal means that we have more complications when we need to deal with internal tools. Tests, other stuff that plug into it. In general I don't like the usage of

public/internalat stuff to place such boundaries.GetLastProcessedDocumentTombstonesPerCollection... well if you're going to add documentation in method names I suggest to make it more detailed ... something like 'GetLastProcessedDocumentTombstonesPerCollectionSinceThisMethodWasCalledAndTheDocumentsWereMakedAToucheOrTheLastSinceStartOf ... TheEnd' ;)Joking aside I think a shorter name like

GetLatestThombsoneswith doc comments are actually more readable.Pop Catalin, Why? To start with, we usually don't use doc comments in the internals. Second, this is something that needs to be read at the call site, not the function itself. It is much better to be clear about things.

RavenDB used to have a property named:

EnableBasicAuthenticationOverUnsecureHttpEvenThoughPasswordsWouldBeSentOverTheWireInClearTextToBeStolenByHackers@Ayende, I think well-placed doc comments in internal code can greatly reduce source code churn for developer onboarding. Source code churn can be a steep artificial barrier to entry for new developers. You don't need to read the code and know how a settings cache is implemented (until you actually have a need to do it), a description is enough if captures the purpose. IE: "stores the settings in memory and automatically reloads them whenever an update operation is intercepted from ...." This can save minutes to hours of code reading in a phase the developer should skip it anyway.

Pop Catalin, In 95% of the cases, you can express the same thing in a docs comment as the name.

Cache.Getis a good example, what can you really say in a docs comment that isnt' already expressed. https://github.com/dotnet/corefx/blob/master/src/System.Runtime.Caching/src/System/Runtime/Caching/MemoryCache.cs#L634And things like putting the "reload them automatically?" That is not supposed to be on a

Getmethod. There is a reason why comments rot is an insidious thing, and it is easy to get things completely out of wack.That just lead to more confusion. I would rather have clear code.

And note that I'm talking about internal stuff, no things that are externally exposed.

It looks like I was part of that conversation on the BCL immutable collections four years ago. I had forgotten how badly they under-perform for your use cases. I see that you ended up with your own ImmutableAppendOnlyList for Voron. I don't suppose you have another magnificent type that fits this case?

Paul, Afraid not. We do a lot of evil stuff around buffering and reusing things to get this working.

It's the same reason source code rots, people who don't take that extra 5 min at the end of a change to refactor and clean their changes.

Given the choice me too. If however, I can have both, clear code and doc comments, I would gladly take both.

Pop Catalin, Actually, the problem is that we don't look at comments when we edit the code one we know what is going on, so we never think of fixing this. And if you repeat information in many places, that is going to deteriorate quickly.

And given that you can't have the cake and eat it...

IMHO, in the first case, it is always better to have more “readonly” and “immutable” stuff, always, always. Even for internal works. And change it only if you have to. It’s a pity that containers you mention has performance issues, but if you do not implement super-highly-performant services that these concerns look like optimizing too early.

For the second case I prefer to do more checking. Is it contrary to fail early? Probably. But if you have many components interacting with each other IMHO it is better to they be more forgiving to each other then expecting very strictly.

Having explicit READONLY is great, but definitely not at the cost of performance.

In general, in C# any collection interface is a code smell (except

IEnumerableof course). People dragging their Java/C++ wisdom into C# create undue trouble, so keep bashing them on small points and they'll learn quicker.If CLR did have a decent ReadonlyDictionary, that's what would be sensible to use. Of course, ImmutableDictionary isn't a trivial replacement perf-wise, especially in edge cases. Whether it fits here — hard to say. It may well fit, memory-wise it could win over flatter sparser hashtable implementation.

Of course, if you don't use ImmutableDictionary widely anyway, picking it for one specific use case hurts maintainability. Every time developer stumbles upon it, she has to STOP AND THINK. Wow, is this important? Why not normal? Thinking leads to errors.

Checking for null should be done using

ifstatements. Apply ruler on the fingers of those who disobeys.Note how

??needs a comment? That's why. A dumbif (existingData==null)wouldn't, BECAUSE IT'S EASIER TO UNDERSTAND WITHOUT COMMENTS.If

existingDatacannot be null, fine, remove it. Otherwise ask them to share the single empty instance, save on memory. Obvious plus for performance-sensitive code!Andrzej, We are implementing super highly performant services. I'm literally counting the number of instructions and laying out my memory for efficient processing. Having something that is 10 times more expensive for the purity sake is no go.

As for being forgiving, that is a great case to end up with a system that has an error, limp along for a while and errors in mysterious ways.

Oleg, Actually, the

??needed a comment because it wasn't something that we usually do. Not to explain what it does, but the reasoning behind it. We use it quite extensively, and it is great for such scenarios.For example

Oren, so you are right if performance is top priority. Respect! Oleg, how do you know it!? Respect to you too! :) I was C++ developer for years. After switching to C# I’m constantly miss two things. “const” for the first case which highly promotes and spread immutability in the system. References for the second case when you do not have to check for nulls. BTW. C++ is associated with pointer but in C# are all pointers everywhere because you have to check for nulls.

End in end maybe some functional languages are rescue here? (both for immutability and performance)

Oren, not saying

??is a bad thing — but not for this use case (hence comments). Having an explicitifhere would be better.Not least because semantically it's different: assignment will only happen in edge case. The original example has

existingDataredundantly assigned to itself in the much-used hot path.Andrzej, the non-nullability is being added to C# in the next iteration.

Frankly, I don't like it: the checks are being bolted on the side, with no guarantees and whole infrastructure reliant on good intentions rather than fact. It may have been better integrated in C++, although the mental cost of unpicking const-related compiler errors/warnings is a burden.

Comment preview