The importance of a data formatPart VII–Final benchmarks

After quite a bit of work, we have finished (at least for now) the Blittable format. As you can probably guess from the previous posts, we have decided to also implement small string compression in the Blittable format, based on the Smaz algorithm.

We also noticed that there are two distinct use cases for the Blittable format. The first is when we actually want to end up with the document on disk. In this case, we would like to trade CPU time for I/O time (especially since usually we write once, but read a lot, so we'll keep paying that I/O cost). But there are also many cases in which we read JSON from the network jus to do something with it. For example, if we are going to read an index definition (which is sent as a JSON document), there is no need to compress it, we'll just have to immediately uncompress it again.

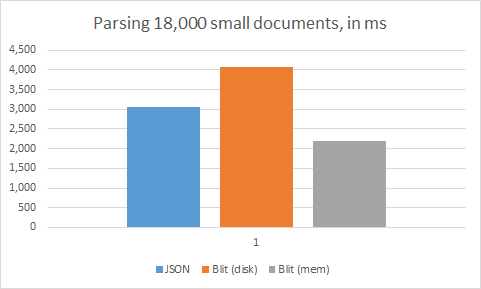

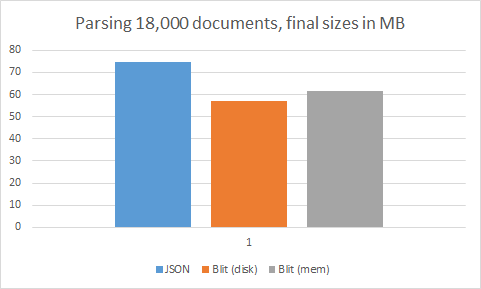

So we added the option to choose what mode you'll use the data. Here are the benchmarks for speed and size for Json, Blittable (for disk) and Blittable (for memory)

As expected, we see a major difference in performance between Blit to disk and Blit to mem. Blit to mem is comparable or better from Json in nearly all scenarios, while Blit to disk is significantly smaller than Json while having comparable or better performance at parsing time, even though it is compressing a lot of the data.

For large documents, this is even more impressive:

Blit to mem is 40% – 75% of the speed of Json at parsing, while Blit to disk can be much more expensive (us to x2.5 times more than Json), depending on how compressible the data is. On the other hand, Blit to mem is already smaller than Json in 33% – 20% for most datasets, but Blit to disk improves on that significantly, often by as much as 60% of the original JSON. In the companies.json case, the original file size is 67.25 MB, using Blit to mem we have a 45.29 MB file, and using Blit to disk gives us a 40.97MB.

This is a huge reduction in size (compared to appreciable fraction of a second on most I/O systems).

So far, we look at the big boys, but what happens if we try this on a large number of small documents? For example, as would commonly happen during indexing?

In this case, we see the following:

Note that Blit to disk is very expensive in this mode, this is because we actually do a lot. See the next chart:

Blit to disk is actually compressing the data by close to 20MB, and it is 5 MB smaller than Blit to memory.

The question on what exactly is the difference between Blit to memory and Blit to disk came up in our internal mailing list. The actual format is identical, there are currently only two differences:

- Blit to disk will try to compress all strings as much as possible, to reduce I/O. This is often at the cost of parsing time. Blit to mem just store the strings as is, which is much faster, obviously. It takes more memory, but we generally have a lot of that available, and when using Blit to memory, we typically work with small documents, anyway.

- Blit to disk also does additional validations (for example, that a double value is a valid double), while Blit to memory skip those. The assumption is that if the double value isn’t valid, it will fail when you actually access the double’s value, whereas we want to have such issues happen when we persist the data if we are actually going to access it later when using Blit to disk.

Overall, a pretty good job all around.

More posts in "The importance of a data format" series:

- (25 Jan 2016) Part VII–Final benchmarks

- (15 Jan 2016) Part VI – When two orders of magnitude aren't enough

- (13 Jan 2016) Part V – The end result

- (12 Jan 2016) Part IV – Benchmarking the solution

- (11 Jan 2016) Part III – The solution

- (08 Jan 2016) Part II–The environment matters

- (07 Jan 2016) Part I – Current state problems

Comments

Comment preview