The importance of a data formatPart IV – Benchmarking the solution

Based on my previous posts, we have written an implementation of this blittable format. And after making sure that it is correct, it was time to actually test it out on real world data and see what we came up with.

We got a whole bunch of relatively large real world data set and run them through a comparison of JSON.Net vs. the Blittable format. Note that for reading the JSON, we still use JSON.Net, but instead of generate a JObject instance, we generate our own format.

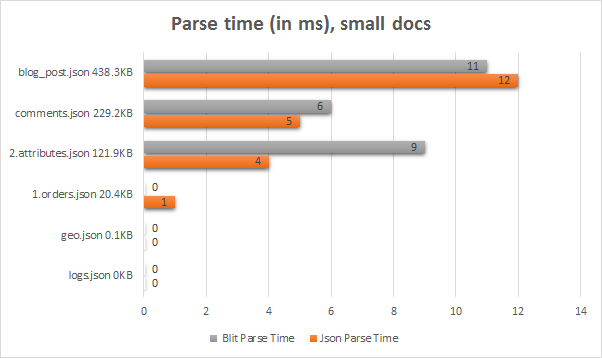

Here are the results of running this on a set of JSON files.

As you can see, there doesn't appear to be any major benefit according to those numbers. In multiple runs of those files, the numbers are roughly the same, with a difference of a few ms either way. So it is a wash, right?

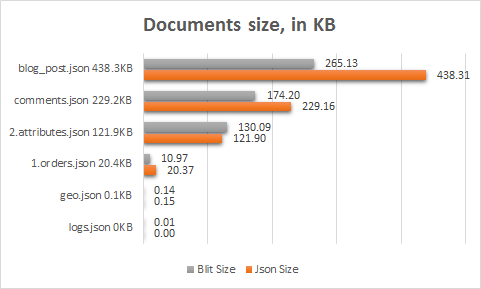

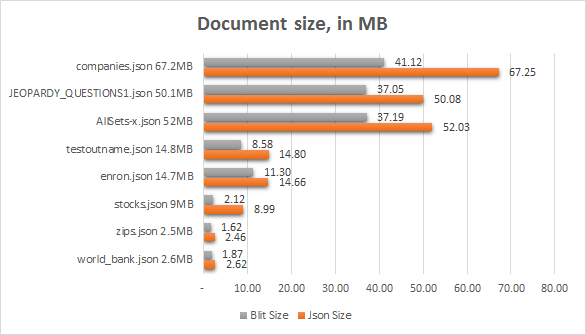

Except… that those aren't the complete truth. Let us look at some other aspects of the problem. How about the persisted size?

Here we see a major difference. The Blit format shows a rather significant reduction in space in most cases. In fact, in some cases this is in the 50% – 60% of the original JSON size.And remember, this is in kilobytes.

In other words, that orders document that was cut by half, that is a 10KB that we won't have to write to the disk or read from the disk. If we need to read the last 50 orders, that is 0.5 MB that won't have to travel anywhere, because it just isn't there. And what about the blog post? That one was reduced by almost half, and we are looking at almost 200KB that aren't there. When we start accounting for all of this I/O that was saved, the computation costs above more than justify themselves.

A lot of that space saving is done by compressing long values, but a significant amount of that comes from not having to repeat property names (in particular, that is where most of the space saving in the comments document came from).

However, there are a bunch of cases where the reverse is true. the logs.json is an empty document. Which can represented in just 2 bytes in JSON, and requires 11 bytes in the Blit format. That isn't an interesting case for us. The other problem child is the attributes document. This document actually grew by about 7% after converting from JSON to Blit format.

The reason is its internal structure. It is a flat list of about 2,00 properties and short string values. The fact that it is a single big object means that we have to use 32 bits offsets, which means that about 8Kb are actually taken just by the offsets. But we consider this a pathological and non representative case. This type of document is typically accessed for specific properties, and here we shine, because we don't need to parse 120Kb of text to get to a specific configuration value.

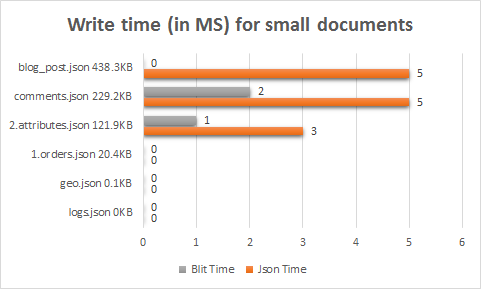

Now, let us see what it cost to write the documents back in JSON format. Remember the Blit format (and someone please find me a good alternative for this name, I really get tired of it) is internal only. Externally, we consume and output JSON strings.

Well, there is a real problem here, because we are dealing with small documents, the timing is too short to really tell.

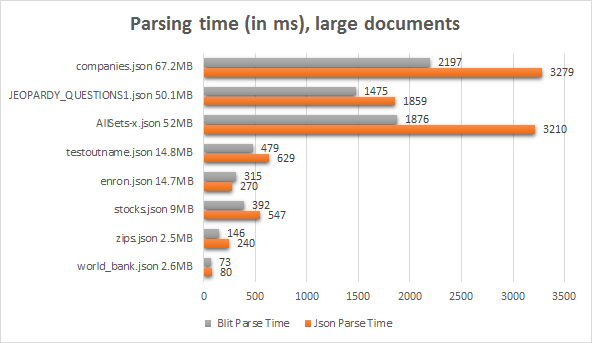

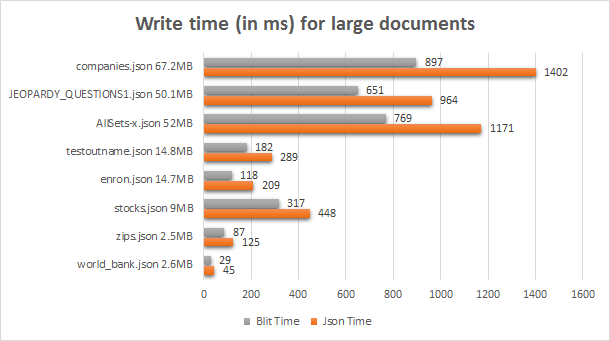

So let us try what happens with larger ones… I selected documents in the 2MB to 70MB range. And here are the results:

There is one outlier, and that is the Enron dataset. This dataset is composed of relatively large number of large fields (those are emails, effectively). Because the Blit format also compresses large fields, we spent more time on parsing and building the Blit format than we do when parsing JSON. Even in this case, however, we are only seeing 17% increase in the time.

Remember that both JSON and Blit format are actually reading the data using the same JsonTextReader, the only difference is what they do about it. JSON creates a JObject instance, which represent the entire document read.

Blit will create the same thing, but it will actually generate that in unmanaged memory. That turn out to have a pretty big impact on performance. We don't do any managed allocations, so the GC doesn't have to do any major amount of work during the process. It also turns out that with large documents, the cost of inserting so many items to so many dictionaries is decidedly non trivial. In contrast, storing the data in Blit format requires writing the data and incrementing a pointer, along with some book keeping data.

As it turns out, that has some major ramifications. But that is one side of that, what about looking at the final sizes?

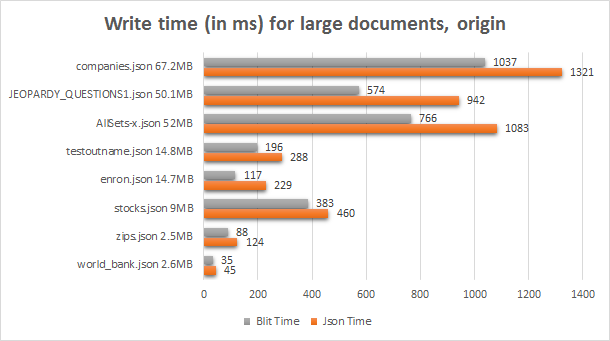

Here we are pretty unambiguous, the moment you get to real size (typically starting from a few dozen KB) you start to see some pretty major difference in the final document size. And now for the cost of writing those documents again as JSON:

Here, too, we are much faster. This has several reasons. Profiling has shown us that quite a bit of time is spent in making sure that the values that we write are properly escaped. With the Blit format, our source data was already valid JSON, so we can skip that. In the graph above, we also don't return the same exact JSON. To be rather more exact, we return the same document, but the order of the fields is different (they are returned in lexical order, instead of the order in which they were defined).

We also have a mode that keep the same order of properties, but it comes with a cost. You can see that in some of those cases, the time to write the document out went up significantly.

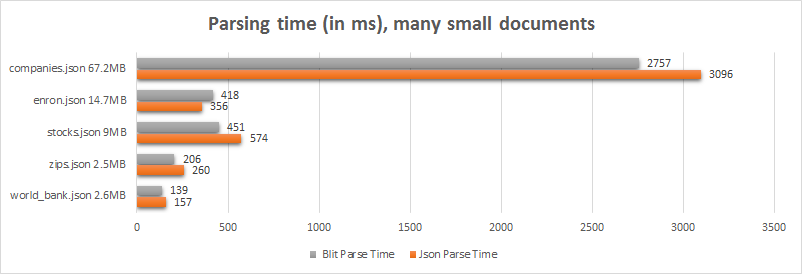

Now, so far I have only dealt with the cost of parsing a single document. When dealing with multiple small documents? The large datasets from before was effectively a lot of small documents that were group into a single large document. For example, companies.json contains just over 18,000 small documents, and zips has 25,000, etc. Here are the perf results:

As you can see, here, too, we are doing much better.

This is because the Blit code is actually smart enough to extract the structure of the documents from the incoming stream, and is able to use that to do a whole bunch of optimizations.

Overall, I'm pretty happy with how this turned out to be. Next challenge, plugging this into the heart of RavenDB…

More posts in "The importance of a data format" series:

- (25 Jan 2016) Part VII–Final benchmarks

- (15 Jan 2016) Part VI – When two orders of magnitude aren't enough

- (13 Jan 2016) Part V – The end result

- (12 Jan 2016) Part IV – Benchmarking the solution

- (11 Jan 2016) Part III – The solution

- (08 Jan 2016) Part II–The environment matters

- (07 Jan 2016) Part I – Current state problems

Comments

How about Indexed format, or compact format, or binary indexed json => bixon, or bison, I think you guys like animal names

You appear to have two copies of your "Enron" comment. One is a small paragraph by itself immediately below the graph (uses the phrase "There is one outlier"), the second is embedded in the following paragraph, in the middle of discussing how similar JSON and Blit are (uses the phrase "There is one difference"). Guessing some kind of Copy & Paste issue.

David, Thanks, fixed

two questions: 1) are the documents in a given collection for the benchmark the same schema? 2) how does it perform on a bigger data set? Something like 30+ million items or 90GB collection.

If you used the bison (blit) format as the wire format you would get a great performance improvement not only at the server side but also at the client side. Although obviously, there are tons of work to do, for example at the client side you would need to implement serialization and deserialization to and from the bison format.

Jesus, We intentionally designed the format to be server side only. The reason for that is that this allows us to make assumptions. The blit format isn't streamable, for example, you need to read all of it before you can do something.

However, regarding Jesus' comment, is it not the case that when a user agent is using the Raven Client assembly many of the methods it uses will always be reading a whole document(s) and converting it to .Net objects. In that case, if the blit format is available as a choice, the Raven Client assembly could request it, where appropriate, and possibly save on both bandwidth and the time required to deserialize into .Net objects. Any other assumptions you have built in could be built into the Raven Client assembly. Granted, it may restrict how/when you to rev the server if it causes a breaking change with the clients.

Piers, There are actually several issues with this. First, it would require us to have permissions to run unsafe code on the client side. Second, it would require a much higher level of security validation. If the client is consuming the Blit format, it could possible also generate it, which means that we need to guard against malicious inputs. Third, it would make it utterly impossible to understand what is going on in the network from just looking at the network trace with a tool like Fiddler. And forth, that would make it much harder to develop clients for any other platform.

It's pretty trivial to write custom packet decoders for e.g. Wireshark. I would not use network trace tool issues as a reason not to use a binary packet format.

But again, if you had gone with ASN.1, you wouldn't have this problem in the first place, the packet decoders already exist.

Howard, Going with binary format means that we have to use wire shark, sure. And we can trace things, sure. But that is a very small (yet important) aspect of things.

1) WireShark requires admin / root privileges to run. Good luck trying to get it working on most production systems. 2) You lose the ability to hit an endpoint in the browser and look at the results. 3) There is a wealth of tools that already exists to monitor, benchmark, profile, work with, etc using HTTP. 4) The cost of accessing the system from a new platform is drastically lower, as is the ability to write scripts with curl / wget, etc.

Comment preview