The importance of a data formatPart VI – When two orders of magnitude aren't enough

So our current version can index 18,000 documents in 22 ms vs. the JSON approach which takes 1.8 seconds. That is an amazing improvement, but I got to tell you, I got a good feeling about it.

Without running a profiler, I'm guessing that a major part of the 22 ms cost is going to be comparisons during a binary search process. We store the property names as a sorted array, and when you need to get a property by name, we need to do a binary search in the properties name to match it.

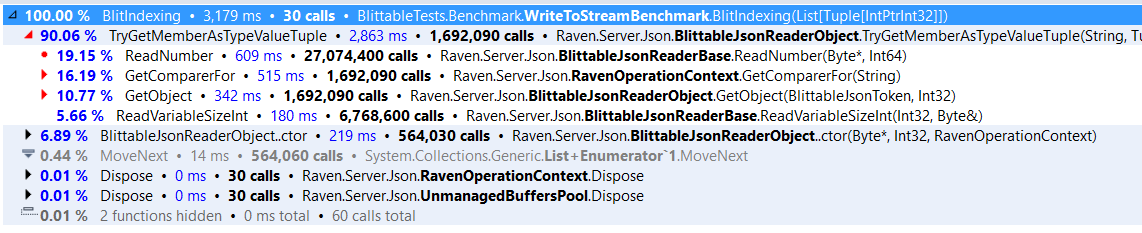

Our test case get 3 properties from a total of 18,801 documents. We do this 30 times, to reduce the chance of jitter getting in the way. Here are the profiler results:

As you can see, the cost is indeed where I expected it to be. Basically, the ReadNumber and GetComparerFor are the expressions of the number of binary searches.

But here we can take advantage on the fact that we know that most of those documents are going to have the same structure. In other words, once we have found the position of a particular property, there is a strong chance that the next time we'll look a property with the same name, we'll find it in the same position. Caching that and using this as the initial position for doing the binary search means that most of the time, we hit the right property right on the nose, and in the worst case, we have a single extra comparison to make.

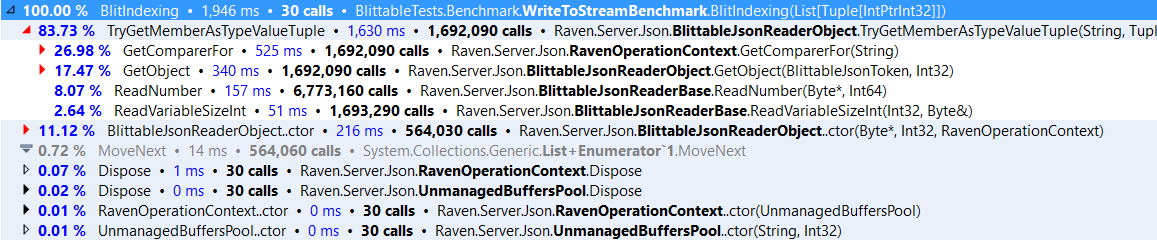

This single change gives us a really nice impact:

That is about 40% improvement in speed, from this single change.

We have been following similar patterns throughout the development of this feature. Write the feature, make sure it passes all its tests, then profiler it. Usually that indicate a problem that we need to solve. Either by optimizing code, or by changing the way we do things in the code. Sometimes radically. But that is a topic for the next blog post.

More posts in "The importance of a data format" series:

- (25 Jan 2016) Part VII–Final benchmarks

- (15 Jan 2016) Part VI – When two orders of magnitude aren't enough

- (13 Jan 2016) Part V – The end result

- (12 Jan 2016) Part IV – Benchmarking the solution

- (11 Jan 2016) Part III – The solution

- (08 Jan 2016) Part II–The environment matters

- (07 Jan 2016) Part I – Current state problems

Comments

That's a really nice trick.

Is the property heuristic adaptive? Otherwise if the first property access is done on a document that is very different than the rest it might work as an slowdown instead of an optimization.

@Pop there are 2 factors here that play a role. <br/><br/>

1st usually indexes request the same property multiple times. <br/>2nd the waste if you fail is theoretically bounded. We are doing binary search there so in the worst case (you select the first or last) you actually check, and sort of "restart" with the rest of the array which has size n - 1 :) ... That means in an O(log(n)) algorithm the runtime cost of failing is O(1). But more, the running time is also bounded to T(1) which is a single operations. <br/><br/> Having said that, if we ever need to make improvements to this, we have already looked into perfect hash functions that will trade-off a very small footprint (really small) to get an O(1) property access. But as always, no need to perform an optimization if there are bigger fishes to fry out there.

Pop Catalin, The result of this optimization is that at best, we get O(1) operational cost. At worst, we get O(logN) operational cost (which is the original cost, without the optimization). In practice, the worst case scenario with the optimization is that we might need to do a single additional comparison if we didn't get this, but this is very cheap

This is a radical change. Congratulations!

I think Pop Catalin has a valid point. It is a shame if we lose 40% performance only because the first object was structured differently. We can add hit rate on a property of it drops below 50% we can evict the old position and enter the latest miss.

Tal, That isn't actually how it works. On every successful match, we update the position. So if the first object is different, the next one will have a slightly higher cost, then all the rest would have an exact match

Indexing is too slow at the moment so I eagerly await these improvements!

Comment preview