I was taking part in a session in the MVP Summit today, and I came out of it absolutely shocked and bitterly disappointed with the product that was under discussion. I am not sure if I can talk about that or not, so we will skip the name and the purpose. I have several issues with the product itself and its vision, but that is beside the point that I am trying to make now.

What really bothered me is utter ignorance of a critical requirement from Microsoft, who is supposed to know what they are doing with software development. That requirement is source control.

- Source control is not a feature

- Source control is a mandatory requirement

The main issue is that the product uses XML files as its serialization format. Those files are not meant for human consumption, but should be used only through a tool. The major problem here is that no one took source control into consideration when designing those XML files, so they are unmergable.

Let me give you a simple scenario:

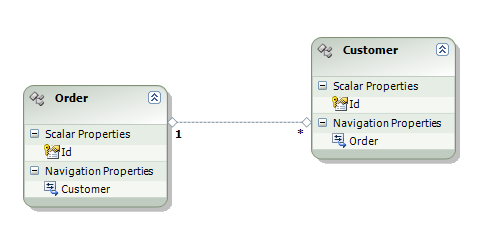

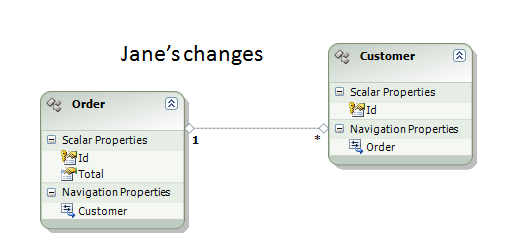

- Developer A makes a change using the tool, let us say that he is modifying an attribute on an object.

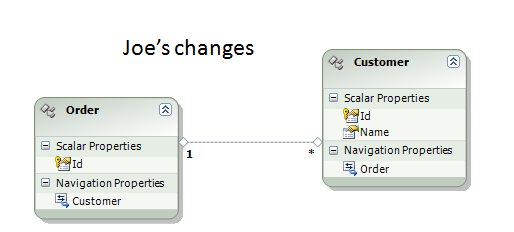

- Developer B makes a change using the tool, let us say tat he is modifying a different attribute on a different object.

The result?

Whoever tries to commit last will get an error, the file was already updated by another guy. Usually in such situations you simply merge the two versions together, and don't worry about this.

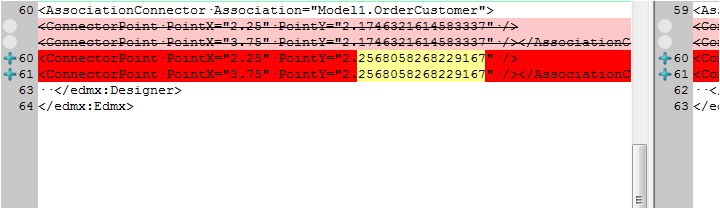

The problem is that this XML file is implemented in such a way that each time you save it, a whole bunch of stuff gets moved around, all sort of unrelated things change, etc. In short, even a very minor change cause a significant change in the underlying XML.

You can see this in products that are shipping today, like SSIS, WF, DSL Toolkit, etc.

The problem is that when you try to merge, you have too many unrelated changes, which completely defeat the purpose of merging.

This, in turn, means that you lose the ability to work in a team environment. This product is supposed to be aimed at big companies. But it can't suppose a team of more than one! To make things worse, when I brought up this issue, the answer was something along the line: "Yes, we know about this issue, but you can avoid this using exclusive checkouts."

At that point, I am not really sure what to say. Merges happen not just when two developers modify the same file, merges also happen when you have branches. As a simple scenario, I have a development branch and a production branch. Fixing a bug in the production branch requires touching this XML file. But if I made any change to it on the development branch, you can't merge that. What happen if I use a feature branch? Or version branches?

Not considering the implications of something as basic as source control is amateurish in the extreme. Repeating the same mistake, over and over again, across multiple products, despite customer feedback on how awful this is and how much it hurt the developers who are going to use it shows contempt to the end developers, and a sign of even more serious issue: the team isn't dogfooding the product. Not in any real capacity. Otherwise, they would have already noticed the issue, much sooner in the lifetime of the product, with enough time to actually fix that.

As it was, I was told that there is nothing to do for the v1 release, that puts the fix (at best) in two years or so. For something that is a complete deal breaker for any serious development.

I have run into issues where merge issues with SSIS caused us to have to drop days of work and having to recreating everything from scratch, costing us something in the order of two weeks. I know of people that had the same issue with WF, and from my experiments, the DSL toolkit has had the exact same issue. The SSIS issues were initially reported on 2005, but are not going to be fixed for the 2008 (or so I heard from public sources) , which puts the nearest fix for something as basic as getting source control right in a 2-3 years time.

The same for the product that I am talking about here. I am not really getting that, does Microsoft things that source control isn't an important issue? They keep repeating this critically serious mistake!

For me, this is unprofessional behavior of the first degree.

Deal breaker, buh-bye.