A repeating choice that we have to make in databases is to trade off performance for scale. In other words, in order to process more requests per time frame, we need to increase the time it takes to process a single request. Let’s see how this works with the case of transaction commits.

In the simplest model, a transaction does its work, prepares itself to be committed, writes itself to the journal, and then notifies the client that it has successfully committed. Note that the most important part of the process is writing to the journal, which is how the transaction maintains durability. It also tends to be, by far, the most expensive part of the operation.

This leads to a lot of attempts to try and change the equation. I talked about one such option when we talked about the details of the transaction journal, having a journal actor responsible for writing the transaction changes as they happen, and amortize the cost of writing them over time and many transactions. This is something that quite a few databases do, but that does have certain assumptions.

To start with, it assumes that transactions are relatively long, and spend a lot of their time waiting for network I/O. In other words, this is a common model in the SQL world, where you have to open a connection, make a query, then make another query based on the results of that, etc. The idea is that you parallelize the cost of writing the changes to the journal along with the time it takes to read/write from the network.

But other databases do not use this model. Most NoSQL databases use the concept of a single command (which may aggregate commands), but they don’t have the notion of a long conversation with the client. So there isn’t that much of a chance to spread the cost of writing to the journal on the network.

Instead, a common trick is transaction merging. Transaction merging relies on the observation that I/O costs are no actually linear to the amount of I/O that you use. Oh, sure, writing 1KB is going to be faster than writing 10 MB. But it isn’t going to be two orders of magnitude faster. In fact, it is actually more efficient to make a single 10MB write than 1024 writes on 1 KB. If this make no sense, consider buying groceries and driving back to your house. The weight of the groceries has an impact on the fuel efficiency of the car, but the length of the drive is of much higher importance in terms of how much fuel is consumed. If you buy 200 KG of groceries (you are probably planning a hell of a party) and drive 10 KB home, you are going to waste a lot less fuel then if you make 4 trips with 50 KG in the trunk.

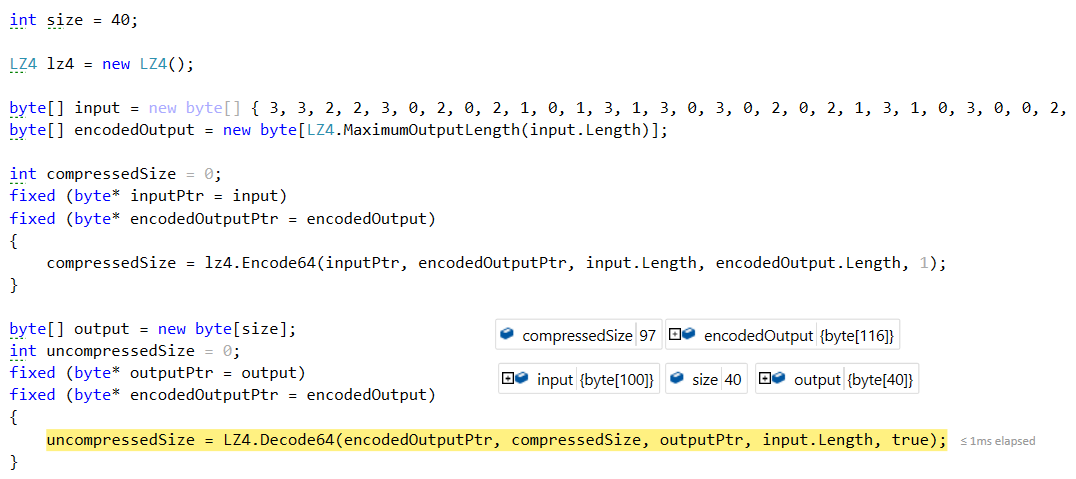

So what is transaction merging? Put simply, instead of calling the journal directly, we queue the operation we want to make, and let a separate thread run through all the concurrent operations. Here is the code:

The secret here is that if we have a load on the system, by the time we read from the queue, there are going to be more items in there. This means that when we write to the journal file, we’ll write not just a single operation (a trip back & forth to the grocery store), but we’ll be able to pack a lot of those operations immediately (one single trip). The idea is that we buffer all of the operations, up to a limit (in the code above, we use time, but typically you would also consider space), and then flush them to the journal as a single unit.

After doing this, we can notify all of the operations that they have been successfully completed, at which point they can go and notify their callers. We traded off the performance of a single operation (because we now need to be more complex and pay more I/O) in favor of being able to scale to a much higher number of operations. If your platform support async, you can also give up the thread (and let some other request run) while you are waiting for the I/O to complete.

The number of I/O requests you are going to make is lower, and the overall throughput is higher. Just to give you an example, in one of our tests, this single change moved us from doing 200 requests / second (roughly the maximum number of fsync()/ sec that the machine could support) to supporting 4,000 requests per second (x20 performance increase)!

In the

In the

Many of the reasons focus on the readability of the code, but remember, my work (usually) revolves around writing pretty disgusting albeit efficient code. So even though I care about readability it is mostly achieved through very lengthy comments on the code on why you shouldn't touch something if you cannot prove something will be faster.

Many of the reasons focus on the readability of the code, but remember, my work (usually) revolves around writing pretty disgusting albeit efficient code. So even though I care about readability it is mostly achieved through very lengthy comments on the code on why you shouldn't touch something if you cannot prove something will be faster.

We run our test suite in a loop to discover any race conditions, timing issues, errors, etc. When doing so, we got a hard crash from the dotnet.exe, and investigating the issue produced a stack trace inside the GC.

We run our test suite in a loop to discover any race conditions, timing issues, errors, etc. When doing so, we got a hard crash from the dotnet.exe, and investigating the issue produced a stack trace inside the GC.