We run our test suite in a loop to discover any race conditions, timing issues, errors, etc. When doing so, we got a hard crash from the dotnet.exe, and investigating the issue produced a stack trace inside the GC.

We run our test suite in a loop to discover any race conditions, timing issues, errors, etc. When doing so, we got a hard crash from the dotnet.exe, and investigating the issue produced a stack trace inside the GC.

So I took a dump of the process memory, and created an issue about that with the CoreCLR repository, while giving it a very high priority internally, and having someone look at that very closely. We are using unsafe code extensively, so it was either a real GC bug or we messed up somewhere are corrupted our own state.

Very quickly Jan Kotas was able to point out that it was a heap corruption issue as well as the likely avenues for investigation.

After looking at this, we found that the problem was in our tests. In particular, in one specific test. In order to test the memory corruption, we changed it to add markers on where it overwrote the buffer, and the test passed.

This caused us additional concern, because the only thing we could think about was that maybe there is some invariant that is being broken. Our suspicion focused on the fixed statement in C# not working properly. Yes, I know, “hoof beats, horses, not zebras”.

So I went to the issue again and reported my finding, and Andy Ayers was kind enough to find the problem, and point it to me.

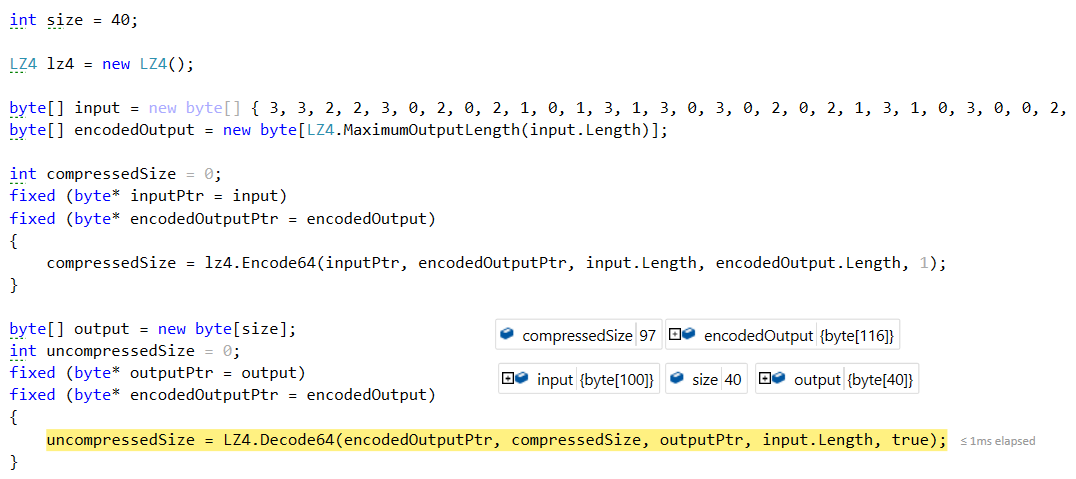

Here is the relevant test code:

This is during debugging, so you can see what the problem is. We defined size to be 40, and we defined an input buffer, whose size is 100.

A little bit below, we created an output buffer based on the size variable (40), and then wrote to it with the expected size of input.Length, which is 100. Everything behaved as it should, and we had a buffer overrun in the test, the heap was corrupted, and sometimes the GC died.

Also, I feel very stupid about spouting all sort of nonsense about bugs in the CLR when our code is unable to do simple arithmetic.

The good news, the bug was only in the tests, and the kind of support that you get from Microsoft on the CoreCLR is absolutely phenomenal. Thank you very much guys.