Adaptive Domain Models with Rhino Commons

Udi Dahan has been talking about this for a while now. As usual, he makes sense, but I am working in different enough context that it takes time to assimilate it.

At any rate, we have been talking about this for a few days, and I finally sat down and decided that I really need to look at it with code. The result of that experiment is that I like this approach, but am still not 100% sold.

The first idea is that we need to decouple the service layer from our domain implementation. But why? The domain layer is under the service layer, after all. Surely the service layer should be able to reference the domain. The reasoning here is that the domain model play several different roles in most applications. It is the preferred way to access our persistent information (but they should not be aware of persistence), it is the central place for business logic, it is the representation of our notions about the domain, and much more that I am probably leaving aside.

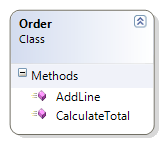

The problem here is there is a dissonance between the requirements we have here. Let us take a simple example of an Order entity.

As you can see, Order has several things that I can do. It can accept an new line, and it can calculate the total cost of the order.

As you can see, Order has several things that I can do. It can accept an new line, and it can calculate the total cost of the order.

But those are two distinct responsibilities that are based on the same entity. What is more, they have completely different persistence related requirements.

I talked about this issue here, over a year ago.

So, we need to split the responsibilities, so we can take care of each of them independently. But it doesn't make sense to split the Order entity, so instead we will introduce purpose driven interfaces. Now, when we want to talk about the domain, we can view certain aspect of the Order entity in isolation.

This leads us to the following design:

And now we can refer to the separate responsibilities independently. Doing this based on the type open up to the non invasive API approaches that I talked about before. You can read Udi's posts about it to learn more about the concepts. Right now I am more interested in discussing the implementation.

First, the unit of abstraction that we work in is the IRepository<T>, as always.

The major change with introducing the idea of a ConcreteType to the repository. Now it will try to use the ConcreteType instead of the give typeof(T) that it was created with. This affects all queries done with the repository (of course, if you don't specify ConcreteType, nothing changes).

The repository got a single new method:

T Create();

This allows you to create new instances of the entity without knowing its concrete type. And that is basically it.

Well, not really :-)

I introduced two other concepts as well.

public interface IFetchingStrategy<T> { ICriteria Apply(ICriteria criteria); }

IFetchingStrategy can interfere in the way queries are constructed. As a simple example, you could build a strategy that force eager load of the OrderLines collection when the IOrderCostCalculator is being queried.

There is not complex configuration involved in setting up IFetchingStrategy. All you need to do is register your strategies in the container, and let the repository do the rest.

However, doesn't this mean that we now need to explicitly register repositories for all our entities (and for all their interfaces)?

Well, yes, but no. Technically we need to do that. But we have help, EntitiesToRepositories.Register, so we can just put the following line somewhere in the application startup and we are done.

EntitiesToRepositories.Register( IoC.Container, UnitOfWork.CurrentSession.SessionFactory, typeof (NHRepository<>), typeof (IOrderCostCalculator).Assembly);

And this is it, you can start working with this new paradigm with no extra steps.

As a side benefit, this really pave the way to complex multi tenant applications.

Comments

So if I resolve both interfaces, will I get 2 different instances of the Order?

Or should it be that you can register both interfaces for the same concrete type? (remember we had this discussion on Unity forums)

In my book, there is no such thing as CalculateTotal(). There is a Total derived(calculated) property and it is on a OrderLineCollection with many other properties that correspond to all lines as a whole.

You don't resolve an Order, it is an entity.

Two interfaces for a service is a bad thing, for entity, it is a good idea.

I disagree about the way to handle total.

Having to do something like order.Lines.Total is messy from my point of view. You need to write a lot more code, violate the law of Demeter, and deal with a lot of cases that are inapplicable (custom collection with lazy loading) if you don't go there.

I can see you point for order.Total, however, and then it is just a case of what exactly it does.

If it is a simple summation, sure. But it can also be something like:

public Money CalculateTotal(IDiscountService, IProductCostRules[] rules);

As for this:

IOrderCalculator a = Repository<IOrderCalculator>.Get(1);

IOrderAdder b = Repository<IOrderAdder >.Get(1);

You get two references to the same object, not two instances.

Yeah I read Udi's posts a while back, interesting stuff.

I must admit I've never really thought role interfaces made sense on our domain model but I'm always interested to see how others use them. Anyway it does worry me slightly that the main driver could end up being performance and that in the process we migth lose some of the essence of DDD. Not sure though.

It also seems odd now seeing repositories returning not aggregates but just single views onto an aggregate. Plus one of the key things about an aggregate is that when you commit your changes to it the aggregate should ensure all invariants within the aggregate are satisfied. However what happens if that means accessing data that you haven't retrieved for the little interface you requested. I know you could change the fetching strategy but its just getting more complex, though maybe there are workarounds.

More specifically on this example having repositories create objects, and seemingly without passing in arguments seems quite restrictive. Having said that I haven't seen the code before so maybe I'm missing something.

It is interesting how differently people practice DDD. Some people use AOP a lot, some people use heavyweight service layers, this approach, some people use IoC to inject validation rules etc etc.

@Ayende

"Having to do something like order.Lines.Total is messy from my point of view. You need to write a lot more code, violate the law of Demeter, and deal with a lot of cases that are inapplicable (custom collection with lazy loading) if you don't go there."

Originally I thought the same but I find custom collection classes help clarify things a bit, otherwise your aggregate roots interfaces can get cluttered (though you'd handle that with interfaces).

Anyway I think moving some behavior to the collections and forcing you to do order.Lines.Total is OK, not least as the collection itself has domain meaning so I don't mind talking about it.

"Two interfaces for a service is a bad thing, for entity, it is a good idea."

You could be right but when do you use them? Is it for cross (domain) module situations, only for customizing them for use by the upper layers or maybe for all cross-aggregate associations?

I definitely use them cross module but so far I've been happy to let the "upper" layers access the entities directly or for different aggregates to directly reference each others roots.

Colin,

The interface for the entities actually improve the DDDness of the code.

You can think of them as bounded context view into the entity

@Ayende

Not sure, repositories are related to aggregates so breaking that link seems to me to be moving away from DDD? I know that technically its still based on aggregates, but surely the link is now less directly.

Also to me bounded contexts are something different again, at a higher level (about tying your model to a particular context). That was my understanding of Evans' use of the term.

Fowler talks the same here http://martinfowler.com/bliki/RoleInterface.html

... which eventually leads here http://www.objectmentor.com/resources/articles/isp.pdf

One thing that bothers me is... Does anyone have a real world experience with "role interfaces"? Does it scale? Won't it lead to kind of "zillion interfaces" antipattern? You see, coarse grained interfaces have their place too.

Udi does.

Hadn't noticed the term "header interface" before, nice.

To be fair my main problem with a lot of advice on interfaces is that it doesn't focus enough on role interfaces, instead it just assumes that extracting any old interface will improve your design.

Anyway so far we've tended to use role interfaces for associations between domain modules, and in those situations they work a treat.

Victor,

The number of interfaces you'll have in the system corresponds to the number of use cases.

I've worked on very large systems and have not seen "zillions of interfaces" using this pattern.

Further, the larger the system, the more we find sub-domains so that the relative surface area a developer sees stays capped.

I would name that faceted interface approach, it make sense to me for separation of concern.

The concern is that when a given client need two or more facets to work with...

Here mixin or some interface composition scheme would be great.

IOrderSummaryPresenter.PresentOrder(mixin::(IOrderCalculator, IOrderInspector) orderRepresentation) // ugly syntax

Comment preview