Algorithms, joins and performance

I thought about moving from hashtables to Dictionary<T,K>, I got interesting results.

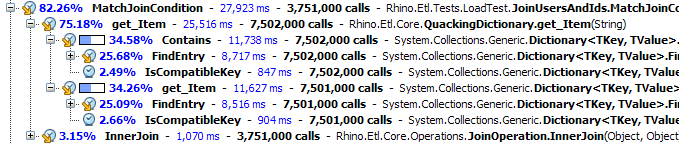

For simple new Dictionary<string,object>(), I expected a significant improvement, but I got this:

This is actually much worse than the result of hashtable + ignore case comparison.

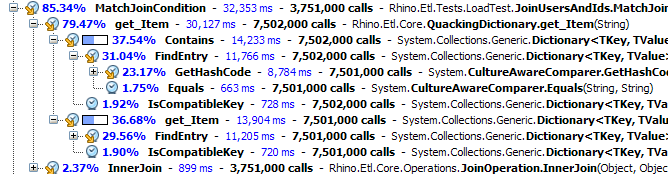

When I used that, I got this horrendous result:

I tried various other tricks, but none of them change the fact that making 7.5 million calls are going to cost a lot of time. And I want to support more than just 2,500 x 1,500.

I changed the implementation to look like this:

rightRowsByJoinKey = {}

for rightRow in right:

key = rightRow.CreateKey( rightJoinParams )

rightRowsByJoinKey[ key ] = [] unless rightRowsByJoinKey[ key ]

rightRowsByJoinKey[ key ].Add(rightRow)

for leftRow in left:

key = leftRow.CreateKey( leftJoinParams )

for matchingRight in rightRowsByJoinKey[ key ] :

yield MergeRows( leftRow, rightRow )

Now I have N + M, instead on N*M.

From performance perspective, it means that doing nested loop join on 2,500 x 1,500 result in 3.5 millions comparisons, which is quite a bit, even for such a small set of rows. It took over 6 seconds to run on my machine.

A hash join, however,will perform measly 5,000 operations to do the same amount of work. On my machine, 2,500 x 1,500 completes in 0.2 seconds, most of which are spend it just initialization of the framework.

I try to take that to a spin on with two orders of magnitude more rows, 250,000 x 150,000 has completed in 5.48 seconds. Which is very encouraging.

Hash join is not applicable if you want to join over anything but equality, which is why we need the nested loops join as well.

Comments

Strange that you are doing this in memory instead of having database handle it.

Bunter,

In this case, I am doing a join of items from file into the results of a web service calls and then joining that to a DB call.

The result goes to a second DB.

The context is an ETL tool, not a DB

Have you considered using a local DB as a temp store? At MP3.com we used unix join/sort/files/cut commands on text file for ETL because it beat in-memory operations. I recently talked to one of the warehouse guys from those days and he told me that he now uses SQL Lite as a local store for doing his transformations and found it to beat in memory operations.

I considered this, sure.

See previous discussion on the blog. Right now I don't need it, but it is the logical next step, I think

Using DSA database was exactly what I refering to. In memory operations quickly become impossible if your size of your data grows. Then you would have to implement a lot of functionality most DB engines offer otb. But that ain't su much fun :)

Have you tried Perfect Hashing? You know the keys in advance, and access them much more times then their count. You can get really fast lookup.

And what if you use a HybridDictionary ?

With large collections, a Hashtable is more performant.

The HybridDictionary uses internally a hashtable when the collection is large, and a ListDictionary when the collection is small.

What are the results if you use a HybridDictionary ?

Comment preview