Performance, Joins and why you should always have a profiler

I did some heavy duty import process yesterday, and we run into severe performance issue with Rhino ETL joins. Five joins with about 250,000 records on the initial left and a few tens of thousands on the rights took about 2 hours to complete.

That was unacceptable, and I decided that I have to fix this issue. I had a fairly good idea about what the issue was. Rhino ETL supports nested loops joins only at the moment, which means that the join is performed as (pseudo code):

for leftRow in left: for rightRow in right: if MatchJoinCondition(leftRow, rightRow): yield MergeRows(leftRow, rightRow)

Obviously the N*M was what causing the problem right? I quickly built a trivial join test, which joined 2,500 rows on the left with 1,500 rows on the right. Trivial stuff, and should result in 1,500 rows returned.

It executed in 6 seconds. That was a shock.

Well 1,500 * 2,500 = 3,750,000, but it should be that bad.

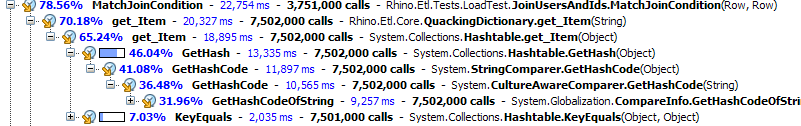

Then I run the code under a profiler, and it completed in 29 seconds, but I also saw this:

It is not the nested loop that cost me all this money, it was the hash table lookups!

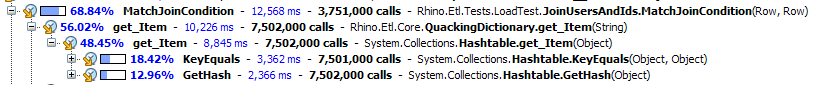

The most expensive call was GetHashCodeOfString, we have some globalization stuff here, because I told the hashtable to be case insensitive, I tried removing that and run it under the profiler again, now it dropped to 18 seconds, and we had this cost structure to deal with:

We still spend almost all of our time just doing hash table lookups, although we dropped by 10 seconds this time.

I don't think that I would have ever consider the cost of simply doing the hashtable lookups as the primary cost of the join operations.

Comments

what profiler do you use?

Yeah, I've been bitten by that type of thing before. Even though I know I need to run the profiler first, I still get surprised when I see the results.

As a side note, Rico Mariani has a pretty cool performance oriented blog that deals with some issues like this. In fact, he's written relatively recently about hash codes.

For a profiler, I have always used AQTime. Although from what I've seen, I'm pretty sure I'd be happy with dotTrace as well.

+1 to the profiler question. We're looking for one where I work as well and I'd love a recommendation.

The very same thing happened to me the other week. We had a loooong running query that we knew took a few minutes. We've been struggling with that query for days and pretty much couldn't get it to execute any faster.

And... The same happened to us, we had a look using dotTrace and... Voila! Almost 30% of the time was spent inside our GetHashCode method. We fixed that and as a bonus - suddenly a lot of our other queries ran a lot faster because it was implemented in a superclass.

Based on the screenshots, it looks like he's using dotTrace from JetBrains.

http://www.jetbrains.com/profiler/index.html

Where else did you expect to spend the time if not in the code you're executing? Of course, the code in the most inner loop should be as fast as possible, BUT you gain significantly more performance boosts from reducing the count of iterations. The results of the profiler are exactly what I expect to see if I'm profiling such code.

If you want to optimize your code, I see two chances:

Memorize the joins (e.g. store which rows satisfy your MatchJoinCondition)

Implement your own IEqualityComparer to compare two strings. The string's GetHashCode always generates a hash code involving the whole string. This produces very good hash values but at a cost (proportional to the characters in the string). You may use special information about the strings to create quite good hash values.

Comment preview