Making code fasterPulling out the profiler

After doing all I can without reaching out to the profiler, and managing to get x45 performance gain, let us see what the profiler actually tells us. We’ll use the single threaded version, since that is easier.

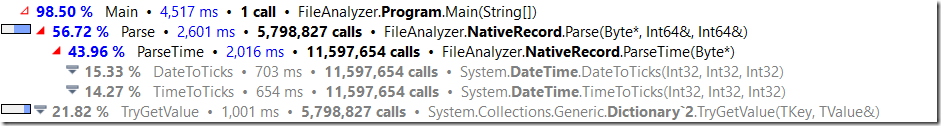

Here it is:

We can see that dictionary operations take a lot of time, which is to be expected. But what is very surprising is that the date time calls are extremely expensive in this case.

The relevant code for those is here. You can see that it is pretty nice, but there are a bunch of things there that are likely costing us. The exception inside the method prevents in lining, there is error handling here that we don’t need, since we can safely assume in this exercise that the data is valid, etc.

So I changed the ParseTime to do this directly, like so:

And that saved us 11%, just this tiny change.

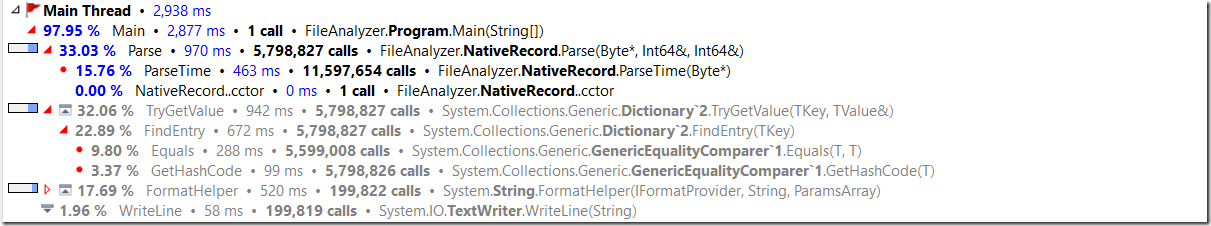

Here are our current costs:

Note that we reduced the cost of parse significantly ( at the cost of error handling, though ), but there are still a lot of work being done here. It turns out that we were actually measuring the time to write to the summary file as well (that is what all those FormatHelpers calls are), so that dirty the results somewhat, but nevermind.

The next place that we need to look at is the Dictionary, it is expensive, even though the usage of FastRecord means that we only need a single call per line, that isn’t so much fun. Note that it is using the GenericEqualityComparer, can we do better?

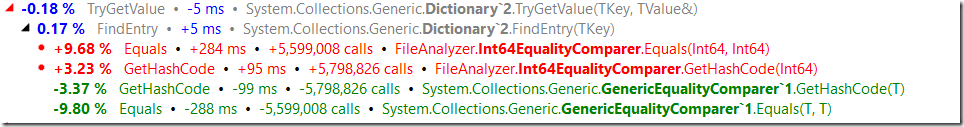

Trying to create my own equality comparer for longs doesn't really help.

So we’ll go back to the parallel version with the ParseTime optimization, and we are now running at 628 ms. And at this rate, I don’t think that there is a lot more room for improvements, so unless someone suggests something, we are done.

More posts in "Making code faster" series:

- (24 Nov 2016) Micro optimizations and parallel work

- (23 Nov 2016) Specialization make it faster still

- (22 Nov 2016) That pesky dictionary

- (21 Nov 2016) Streamlining the output

- (18 Nov 2016) Pulling out the profiler

- (17 Nov 2016) I like my performance unsafely

- (16 Nov 2016) Going down the I/O chute

- (15 Nov 2016) Starting from scratch

- (14 Nov 2016) The obvious costs

- (11 Nov 2016) The interview question

Comments

People have already suggested using a simple array with IDs indexing into it, but that'd be ~400MB for ints and ~800MB for longs. Could we reduce the memory usage of the array, perhaps by sacrificing accuracy? Reducing the resolution from ticks to seconds lets us stuff 18 hours into an unsigned short, at the expense of rounding errors at the seconds level.

@Alex that would depend on what the data is being used for. On some systems dealing with rounding errors of a second is nothing. But if what you are reading is logs, those ticks makes a huge difference. Having said that, you can use a different trick instead. You can still use a trick like that... Just take the lower 16 bits and encode the other 48 bits in a variable size format. There is ample chance that you can encode those remaining bits in far less bits than 48 (16 maybe?)

Don't you need to consider leap seconds also?

@Alex, as already remarked before - refer to the comments on the first post of this topic The interview question - the data to be used consists of an id key that has 8 digits in the alphabet '0' .. '9' and could be encoded as a max 27 bit

uintand a duration value with a seconds resolution that can be encoded in auint.As the solution I posted (refer to comments under the I like my performance unsafely blog article) shows, it is actually possible for the given dataset of 199819 unique id entries to store this in a map that requires less than 199819 x (4 + 4) bytes = 1,598,552 bytes without compromising the seconds resolution. The "3-level trie" manages to store this data with only 864 kb of total allocations, i.e. a bit more than half of that size. It does so while being much faster than either a dictionary or flat 390 MB array indexed by id due to favorable memory cache effects.

@Bruno, theoretically yes. Note however that the reference .NET DateTime class also does not have any provisions for dealing with leap seconds, refer e.g. to this stackoverflow post.

I have coded my own solution, based upon yours, but replacing the dictionary with a home-grown object which bases on a long[][]. My solution takes only about 50 % of the time of yours, and only uses about 25% of the memory.

see here: https://github.com/ursmeili/ConsoleApplication2/blob/master/ConsoleApplication2/Program.cs

The class in question is called "FastRecordCollection"

sorry there are no comments, but I had to finish it quickly before my wife came back from choir rehearsal :-)

do I pass the job interview?

there's much to absorb here. I'd like to apply similar stuff to Powershell, too, but hopefully w/o having to write any C# stuff , which sort of defeats the point. Why? Well, like in Unixland, using things like Awk, Perl, etc. to do this kind of stuff is usually Just Good Enough, some of us want to use Powershell for the same purpose...

Thanks for the good reading, Ayende!

Can't resist trying again. Checked it against the ten-line test this time round and made sure to eliminate the unsafe array access.

Code: https://gist.github.com/anonymous/d959662b4df4efd7ffea801fbec31879

@Urs: Nice one. Initialliy I thought you would produce hash collisions with var i = (id & (SLOTSIZE - 1)); but your slot design via var slot = id >> RAISE_BY; nicely circumvents this. Is this trick somewhere documented?

@Alois, no it is nowhere documented as far as I know, but I use this design in my on programs now and then, because it allows for lightning fast collision detections when you have a vast array of datetime intervals to process.

Hi Ayende,

have you tried to create Int64 Equity Comparer because gethashcode doesn't support longs? whats the motivation? maybe two nested dictionary's can help

just for comparison, I'm curious how long it takes on your machine @Arseny Kapoulkine c++ solution? https://gist.github.com/zeux/90a49b85c8cfdf04ffa5489ec8916271

@Uri The c++ solution by @Arseny Kapoulkine (with some corrections to get a more accurate estimate of actual memory allocations) gives the following numbers on my system, vs. the fastest option of my c# based solution. Notes: "Parallel" on my system means 8 threads, "Validation" means whether or not input validity checking is performed, "Reference" is the initial "linqy" blog post solution provided in the zip archive together with the input data file.

thank you @alex, this is quite impressive, C# fastest option is only 20% less than the most optimized c++. in single thread the difference is even lower. there is always the option to include external c dll to do the job, but generally, c# has good performance.

Writing to disk is now my second most expensive operation (costs me about 100ms). I can't figure out how to make that any quicker. But I did manage to shave off another 100ms in other places:-

Code: https://gist.github.com/anonymous/7d19e14c8f223c08142f4bab808deda7

JustPassingBy, Try writing a dedicated function to it, you are wasting a lot of time there doing allocations, string processing, etc.

"[...] unless someone suggests something, we are done"

Why not use what we know about the file? Entry- and exitlog from a parkinglot should mean the datepart often are equal. And since we only care about the duration, in those majority of cases we could get away by just parsing the timeparts.

With a simple sequence equality check and a simple timeparser the execution time could be cut in half.

Joakin, Yes, that is what we ended up doing. Wait for the next few posts

Comment preview