Timing the time it takes to parse timePart II

There are times when you write clean, easily to understand code, and there are times when you see 50% of your performance goes into DateTime parsing, at which point you’ll need to throw nice code out the window, put on some protective gear and seek out that performance hit that you need so much.

Note to the readers: This isn’t something that I recommend you’ll do unless you have considered it carefully, you have gathered evidence in the form of actual profiler results that show that this is justified, and you covered it with good enough tests. The only reason that I was actually able to do anything is that I know so much about the situation. The dates are strictly formatted, the values are stored as UTF8 and there are no cultures to consider.

With that said, it means that we are back to C’s style number parsing and processing:

Note that the code is pretty strange, we do upfront validation into the string, then parse all those numbers, then plug this in together.

The tests we run are:

Note that I’ve actually realized that I’ve been forcing the standard CLR parsing to go through conversion from byte array to string on each call. This is actually what we need to do in RavenDB to support this scenario, but I decided to test it out without the allocations as well.

All the timings here are in nanoseconds.

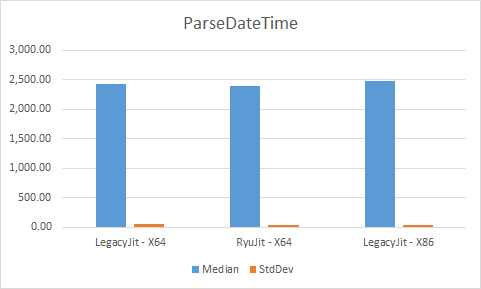

Note that the StdDev for those test is around 70 ns. And this usually takes about 2,400 ns to run.

Without allocations, things are better, but not by much. StdDev goes does to 50 ns, and the performance is around 2,340 ns, so there is a small gain from not doing allocations.

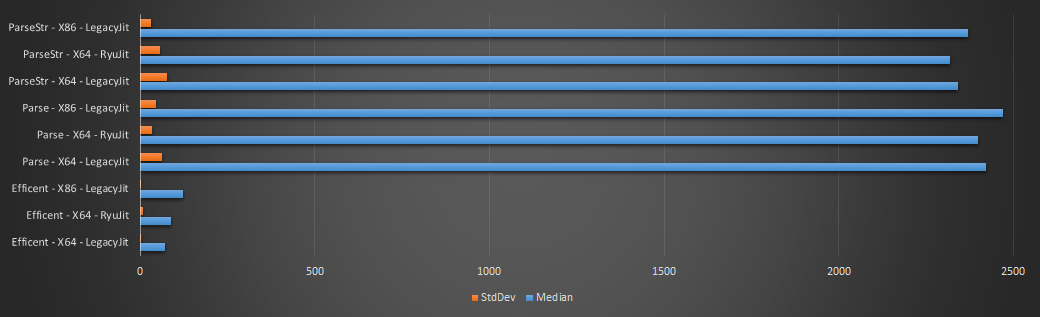

Here are the final results of the three methods:

Note that my method is about as fast as the StdDev on the alternative. With an average of 90 ns or so, and StdDev of 4 ns. Surprisingly, LegacyJit on X64 was the faster of them all, coming in at almost 60% of the LegacyJit on X86, and 20% faster than RyuJit on X64. Not sure why, and dumping the assembly at this point is quibbling, honestly. Our perf cost just went down from 2400 ns to 90 ns. In other words, we are now going to be able to do the same work at 3.66% of the cost. Figuring out how to push it further down to 2.95% seems to insult the 96% perf that we gained.

And anyway, that does leave us with some spare performance on the table if this ever become a hotspot again*.

* Actually, the guys on the performance teams are going to read this post, and I’m sure they wouldn’t be able to resist improving it further ![]() .

.

Comments

@Oren, I assume the big perf hit in the framework implementation is that first it parse the format string, then it create a parser and only then it parse the actual input string. And it will do this for each invocation of DateTime.ParseXYZ. A better approach would be to have a DateTimeParser object or something like that, that should be possible to create only once upfront and then reuse it all the time. In fact the same approach has been taken in Objective-C and their Foundation framework, specifically the NSDateFormatter class (see https://developer.apple.com/reference/foundation/nsdateformatter) which is used to to string->date and date->string conversions.

The typical old-style approach of the BCL of having very quick to use methods is nice to use, but for perf intensive scenarios they should consider this othe approach: they should take into account this parser/formatter based design, and than re-implement the standard methods using this underneath.

I suspect that by just using this approach and instancing only one parser/formatter would speed things up a lot.

What do you think?

njy, That is my thought as well. Actually, the code for this is here: https://github.com/dotnet/coreclr/blob/master/src/mscorlib/src/System/Globalization/DateTimeParse.cs#L3488

And it isn't actually generating a parser, they just have a big switch statement to handle all the possibilities. A dedicated formatter / parser would be much faster, yes. This is the second time that we had to write our own code to get something much faster with dates.

Then again, we are talking about something that can process about 400 K per second. We just need it to be much faster (our impl get about 12 million / sec)

That first paragraph is so nice :) I love it when we need to get dirty like that.

For RyuJIT code quality increase was a non-goal (puzzlingly) so it's not surprising to see it not faster. It's faster in certain special cases, and legacy JIT is faster in other special cases. The .NET JITs are garbage basically. I do not understand the severe lack of investment in them.

Tobi, I would strongly disagree with you here.

We opened several performance issues with the JIT, and we had always gotten enthusiastic support behind "let get this faster". This particular issue is logged here: https://github.com/dotnet/coreclr/issues/7564

And I expect to have a reason why this is happening and a fix at some point.

I think that a large part of RyuJIT is that it needed to allow more platforms and likely better architecture.

I say split DateTime and DateTimeOffset into two separate methods.

Oleg, I would love to see benchmark results that shows a difference here.

My understand of the thought behind RyuJIT was that it wanted to focus more on upfront JITing time rather than code execution time.

Or, more accurately, the legacy 64bit JITer was meant for use on servers not desktops, and so time was spent making sure that the code it generated ran the fastest possible but not much time was spent making sure the JITer ran fast. RyuJIT was created, in part, to deal with that problem.

They do seem to be spending more time, now, on improving the generated code, which is great.

Minor code review point - on line 22 of your first code sample, you are checking buffer[16] twice.

Is

TryParseNumbergetting inlined into the caller? If not, the optimizer cannot really generate good code for this function.The optimizer must consider the possibility that

ptrpoints to the same memory as thevalreference. Thus every change ofvalmust be written to memory, the compiler cannot use a CPU register. You might get slightly better performance by computing the value in a local variable and storing the result in the out-parameter only once at the end of the function.Also, there's a lot of callers that pass

size==2-- you might want to create a separate function that parses these short integers without using a loop.Of course if

TryParseNumberis getting inlined, the compiler is probably already doing these optimizations. But I'm not sure how likely the JIT is to inline this function. I think the classic JIT never inlines functions containing loops.@Daniel, probably we could do far better at the micro-instruction level, however, dont forget we are talking about a more than 1 order of magnitude improvement. Right now TryParseDate doesnt even show-up in the top 10 functions to optimize. Usually optimizing at that level involve writting pretty disgusting code, at this point this ugliness is far more than enough for any practical purpose.

RyuJIT does not have good internal architecture. Andy Ayers said in a public talk that the codebase is not fresh, the architecture is baroque and nobody feels quite comfortable with it. Whenever I read the code or read the commit logs it felt like it. It does not even use SSA which is known for a long time to be the correct internal compiler data structure. That's further evidence the code is not fresh.

RyuJIT not being about perf is explicitly stated on the teams blog.

I don't understand why this JIT was created. They should have created an interpreter plus a strong 2nd tier JIT that works unconstrainedly. RyuJIT feels like the result of a project or management failure. If you write a new JIT and all you achieve is 50% faster JIT times plus open source, is that really the point of the project?!

Line 22 looks mildly suspicious:

Just to underline the point I'll quote Mikedn from the linked issue:

RyuJIT does not (here and in general) extract multiple loads from the same location into a register. s.x + s.x loads x twice. RyuJIT does not perform the most basics optimizations. I do not know how it's possible to argue that it is a capable JIT. The JVM guys are laughing at this.

@tobi are you sure you are not talking about LegacyJIT? Because as far as I know RyuJIT uses (at least in some parts of its data flow) a SSA form. Among the optimization are dead code optimization via liveness analysis, loop hoisting, copy propagation and a few others.

Source: https://github.com/dotnet/coreclr/blob/master/Documentation/botr/ryujit-overview.md#ssa-vn

@tobi it does extract multiple loads into a register.

@Daniel, Tryparse does not get inlined and adding a local variable does indeed improve performance by about 15% on my machine

25 M dates parses: Original: 00:00:03.0097201 Local Var: 00:00:02.5762892

@PopCatlin, this is hardcoded to work in loops. Outside of loops it does not occur. Seems to be hard to make it work in their architecture. For that reason they only addressed the most impactful case which is loops.

@Frederico I did not say the JIT does not perform any optimizations. Clearly it does. It's just quite poor in the level of optimizations that are supported.

@tobi, I'm afraid that's not true, loads are grouped even outside of loops. This basic optimization is done everywhere.

The following code has load optimization without any loops (this is done strictly by the JIT, it is not present in IL):

David, Correct, that was a copy/paste issue

Daniel. Very good suggestions. We started out at 97.4 ns, with local variable that cost goes to 86.9, and with dedicated methods for each size, it is down to 79.5

Interested article. I love exploring aspects of things within themselves. I recently wrote an article about efforts making it faster to tell time. http://www.circonus.com/time-but-faster/

I found the bar charts of time and stddev a bit awkward. Had you considered using a candlestick graph to visualize the trial runs? https://en.wikipedia.org/wiki/Candlestick_chart

That optimization seems to have been added since I last looked. Testing this on Desktop 4.6.2 x64 Release:

This was simplified to cw(2) which is good news! I still have the test cases I sent to Microsoft, so I know for sure this was not optimized. But this does not appear to really fold the loads... Rather, it blasts the struct to its components.

And now it's a desaster:

000007FE95504200 push rdi

000007FE95504201 sub rsp,40h

000007FE95504205 lea rdi,[rsp+20h]

000007FE9550420A mov ecx,8

000007FE9550420F xor eax,eax

000007FE95504211 rep stos dword ptr [rdi]

000007FE95504213 xor ecx,ecx

000007FE95504215 lea rax,[rsp+20h]

000007FE9550421A xorpd xmm0,xmm0

000007FE9550421E movdqu xmmword ptr [rax],xmm0

000007FE95504222 mov qword ptr [rax+10h],rcx

000007FE95504226 mov dword ptr [rax+18h],ecx

s.X = 1; 000007FE95504229 mov dword ptr [rsp+20h],1

Console.WriteLine(s.X + s.X); 000007FE95504231 mov ecx,dword ptr [rsp+20h]

000007FE95504235 add ecx,dword ptr [rsp+20h]

000007FE95504239 call 000007FEF423F6B0

Struct on the stack, it's a constant, most of it is dead. It moves 1 into X, then loads X twice and adds it. Can it possibly be worse and less optimized? This is what I mean.

Just wondering: What's driving the need to parse? Would it be possible for whatever your source is to store in a binary format that can be loaded directly?

TryParseNumber seems broken. It is not multiplying the positional numbers by its correct powers of 10.

Wanderlei, Look at line 92

Rik, We could do that, yes, but it make it easier to interchange. The problem with binary format is that then you need to start supporting all sort of various formats (DateTime, DateTimeOffset, TimeSpan), to name just a few. It is better if we have this as a well defined string, because that also helps us to avoid type detection on the fly, which has its own costs.

I know I'm late to the party (just back from a week of downtime), but does your code need to only work in half of the world? Or am I missing something else, where you only need to care about positive offset values?

I.e. I believe that 2016-10-05T21:07:32.2082285-03:00 would fail since there's only an explicit check for

+.Damien, That is a bug that was corrected, yes.

Comment preview