RavenDB RetrospectiveBASE Indexes

RavenDB was designed from the get go with ACID documents store, and BASE indexes. ACID stands for Atomic, Consistent, Isolated, Durable, and BASE stands for Basically Available, Soft state, Eventually consistent.

That design had been conceived by twin competing needs. First, and obvious, a database should never lose data. Second, we want to ensure that the system remains responsive even under load. It is quite common to have spike in production traffic, and we wanted to be able to be able to handle it with better aplomb.

In particular, the kind of promises that are made by RavenDB queries allow us to perform quite a few performance optimizations. In databases that require that all indexes will be up to date on transaction commit, you’ll find that there is a very high cost to adding indexes to the system, because each additional index means additional work is needed at query time. It also makes things such as aggregating indexes (map/reduce, in RavenDB terms) a lot harder to build.

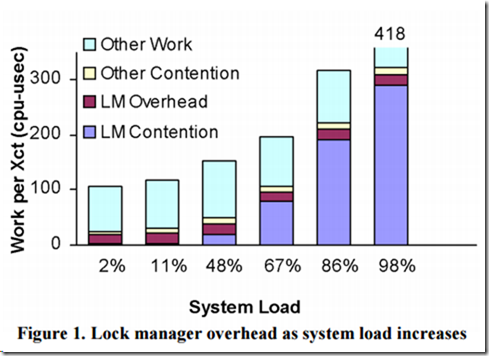

By having BASE indexes, we gain the ability to batch multiple writes into a single index update operation. It also allows us to defer writing the indexes to the disk, avoiding costly I/O operations. But most importantly, by changing the kind of promise that we give to users, we are able to avoid a lot of locks, complexity and hardship inside RavenDB. This may seems like a small thing, but this is actually quite important. Take a look at this study:

In fact, there are a lot of studies on the overhead of locking in database systems, and that has been a hot research topic for many years. By choosing a different architecture, we can avoid a lot of those costs and complexities.

So far, that was the explanation from the point of view of the database creator. What about the users?

Here the tradeoff is more nuanced. On the one hand, there is a certain level of complexity that people have to deal with the notion that queries on just inserted data might not include it (stale queries), on the other hand, it means that queries are consistently faster and we can handle spikes in traffic and load much more easily and consistently.

But it is a mental model that can be hard to follow, even when you are familiar with it. Probably the most common issue with RavenDB’s BASE indexes is the case of Post / Redirect / Get. Let us look at how this may play out:

In here, we actually have two requests, one that adds a new order to the system, and the other that fetch the details. If you have redirected to the new order page, everything is going to work as expected, and you won’t notice anything even if the indexes are stale at the time of the request. But a pretty common scenario is to add the new order, and then go and look at the list of orders for this customer, and if the index didn’t have the chance to update between those two requests (which typically happen very quickly) then the customer will not see the new order.

That particular scenario is responsible for the vast majority the pain we have seen from our users around BASE indexes.

Now, one of the great things about BASE indexes is that the user get to choose whatever they want to wait for the up to date results or whatever they want whatever is there right now. And we have had mechanisms to control this at a very granular level (including options for personal consistency control, so different customers will have different waits depending on their own previous behavior). But we have found that this is something that puts a lot of responsibility on the developer to control the flow on their users on their applications.

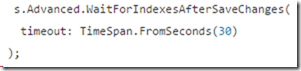

So in RavenDB 3.5 we have changed things a bit. Now, instead of processing the write requests as soon as possible, you can ask for the server to wait until the relevant indexes has processed:

In other words, when you call SaveChanges, it will wait until the indexes has been updated, so when you return from the call, you can be certain that the results of any future queries will include all the changes on that transaction. This moves the responsibility to the write side and make such scenarios much easier to handle.

Given all of that, and our experience with RavenDB for the past 8 years or so, we spiked how it would look like with ACID indexes, at least for certain things. The problem is that this pretty much takes out of the equation a lot of the power and flexibility that we get from Lucene (more on why you can’t do that in Lucene in a bit) and force us to offer what are essentially B+Tree indexes. Those are so limited that we would have to offer:

- B+Tree indexes – ACID (simple property / range queries). With different indexes needed for different queries and ordering options.

- Lucene indexes – BASE, full text, spatial, facets, etc queries. Much more flexible and easy to use.

- Map/reduce indexes – BASE (because you aren’t going to run the full map/reduce during the original transaction).

The problem is that then we would have continuous burden of explaining when to use which index type, and how to deal with the different limitations. It will also make it much more complex if you have a query that can use multiple indexes, and there are problems associated with creating new ACID indexes on live systems. So it would generate a lot of confusion and complexity to users, for fairly small benefit that we can address already with the “wait on save” option.

As for why we can’t do it all via Lucene anyway, the problem is that this wouldn’t be sustainable. Lucene isn’t really meant for individual operations, it shines when you push large amount of data through it. It also doesn’t really have the facilities to be transactional, we have actually solved that particular problem in RavenDB 4.0, but it was neither pretty nor easy, and it doesn’t alleviate the issue of “we do best in large batches”. RavenDB’s BASE indexes are actually designed to take advantage of that particular aspect. Because under load, we’ll process bigger batches are reap the performance benefits that they bring.

BASE indexes also make for much simpler operations. You can define a new index without fearing locking the database, and it enables scenarios such as side by side indexing to update index definitions without impacting the running system.

Finally, a truly massive benefit of BASE indexes is that they allow us to change the following statement: more indexes means faster reads, slower writes. Fewer indexes means slower reads, faster writes. By movng the actual indexing work to a background task, we let the writes go though as fast as tehy possible can.

Indexes still have a cost, and the more indexes you have, the higher the cost (we still got to do some work here). But in the vast majority of the cases, we can squeeze this kind of work between writes, in times that the database would be idling.

What that means is that you can have more indexes at the same cost, and that your queries are going to be using those indexes and are going to be fast.

More posts in "RavenDB Retrospective" series:

- (17 Oct 2016) The governors

- (14 Oct 2016) Explicit indexes & auto indexes

- (12 Oct 2016) BASE Indexes

- (28 Sep 2016) Unbounded result sets

Comments

Yes BASE is a PITA for business software.

I don't mind when searching using keywords from the description of a product returns results that are a few minutes stale, however adding a new order to a customer and not seeing it right away in it's order history is really bad. That's why we usually try to cover for it by doing lot's of manual workarounds like adding and order and updating the customer order history document, then when you load the document if the last order is not returned by the order query you add a nice "Processing ..." artificial state to it .... basically you are forces to emulate ACID indexes in a way ....

Pop Catalin, And the "let us wait on save" option?

@Ayende, it's very spiky as perf .... and I don't want to call names here , but there are some DocumentDB databases out there which are notoriously slow at updating indexes for large collections ...

Comment preview