Northwind Starter Kit ReviewIt is all about the services

This is a review of the Northwind Starter Kit project, this review revision 94815 from Dec 18 2011.

Okay, enough about the data access parts. Let us see take a look at a few of the other things that are going on in the application. In particular, this is supposed to be an application with…

Domain logic is implemented by means of a Domain Model, onto a layer of services adds application logic. The model is persisted by a DAL designed around the principles of the "Repository" patterns, which has been implemented in a LINQ-friendly way.

Let us try to figure this out one at a time, okay?

The only method in the domain model that have even a hint of domain logic is the CalculateTotalIncome method. Yes, you got it right, that is a method, as in singular. And that method should be replaced with a query, it has no business being on the domain model.

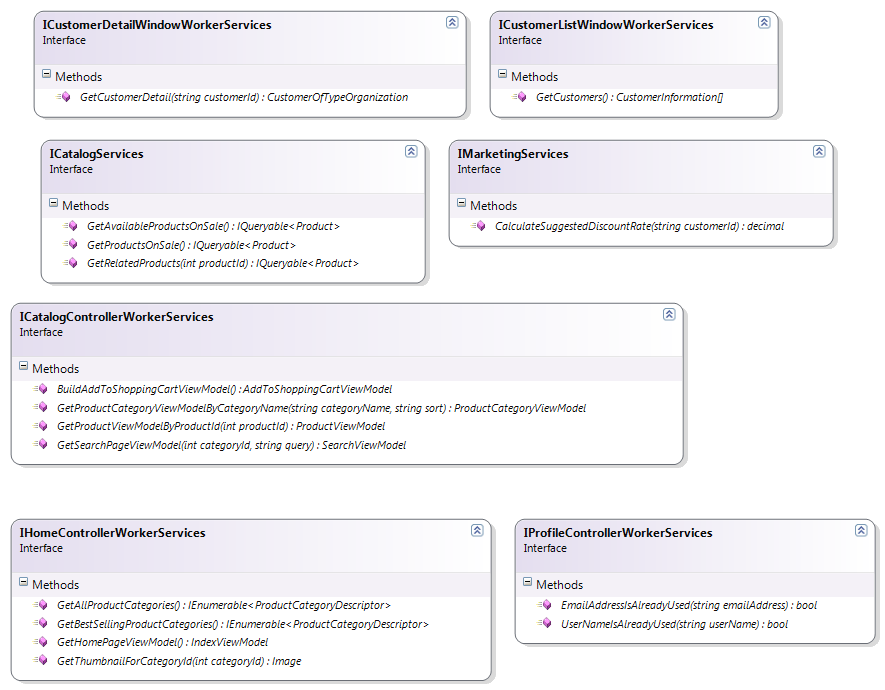

So let us move to the services, okay? Here are the service definitions in the entire project:

Look at the methods carefully. Do you see any action at all? You don’t, the entire thing is just about queries.

And queries should be simple, not abstracted away and made very hard to figure out.

The rule of the thumb is that you try hard to not abstract queries, it is operations that you try to abstract. Operations is where you usually actually find the business logic.

More posts in "Northwind Starter Kit Review" series:

- (26 Jan 2012) Conclusion

- (24 Jan 2012) That CQRS thing

- (23 Jan 2012) It is all about the services

- (20 Jan 2012) From start to finishing–tracing a request

- (18 Jan 2012) If you won’t respect the database, there will be pain

- (16 Jan 2012) Refactoring to an actual read model

- (13 Jan 2012) Data Access review thoughts

- (12 Jan 2012) The parents have eaten sour grapes, and the children’s teeth are set on edge

- (11 Jan 2012) Data Access and the essence of needless work, Part II

- (10 Jan 2012) Data Access and the essence of needless work, Part I

Comments

I suppose this means that there are no operations in this example?

The only method I can see that sounds operational is the "CalculateSuggestedDiscountRate" on the Marketing service.

Frank, Nope, that is another query

I disagree. GetBestSellingProductCategories is a great example why. The logic for this query could be relatively complex. By abstracting that logic into its own method, you get the ability for others who depend on it to use it without worrying about those details.

For example, here's a unit test using what they have up there:

public void Index_should_return_the_best_selling_products(){ var products = new [new Product()]; var fakeService = new Mock<IHomeControllerWorkerServices>() .Setup(x=>x.GetBestSellingProductCategories()) .Returns(products); // regular work... results.ShouldBeSameAs(products); }

instead of:

public void Index_should_return_the_best_selling_products(){ var products = // A BUNCH OF DATABASE SETUP TO REPLICATE THE SITUATION var fakeService = new Mock<IHomeControllerWorkerServices>() .Setup(x=>x.GetBestSellingProductCategories()) .Returns(products); // regular work... results.ShouldContain(expectedProductA????); results.ShouldContain(expectedProductB????); // have fun writing this assert... }

Plus, obviously, what happens when two actions need the results from the same method? You get more test setup, more complex tests, and the type of testing environment where everything is touching and you're afraid to change anything. That's a really bad place to be when the changes come.

Plus, the when the programmer is writing the code that uses this method, maybe the logic for determing the "best selling product" hasn't been fleshed out. Maybe the product object does not contain all of the properties that it's going to need? By abstracting the query, you leave other programmers free to focus on their details (like just getting the best-selling products passed to the view) rather than have to do a ton of development and planning in one big shot.

I've said this before to you and I'll say it again: I think your stances on these queries is due to your development approach, which is not compatible with the outside-in, BDD/TDD approach. If the tests are driving the code, you'll get the abstractions and controllers that obey SRP. If the programmer drives, you'll get queries in the controllers and code soooooo "simple" that SRP doesn't really matter.

Sorry, the unit tests didn't retain their formatting. Here's a gist: https://gist.github.com/1662749

Operation would probably mean some writes and applied logic to model (so, I would expect some 'If/else' statements). All those queries no mater how complex are, even with data aggregation and simple calculations (without Ifs!) are better suited for extension method or raw SQL. That's how I understood Ayendes point on all this.

While I do agree that there are major drawbacks to abstracting queries that being said i still prefer working with some abstractions as its quite typical for me to share queries with other system functions like backend intergrations, ect.

using the following, sharing the query in EF is not so painful..

public interface ISpecification<in T> where T : class, new() { IEnumerable<string> GetIncludes(); Func<T, bool> GetPredicate(); }

public static IEnumerable<T> GetBySpecification<T> (this ObjectContext context, ISpecification<T> specification, params string[] adHocIncludes) where T : class, new() {

Pretty good rule of thumb: If it returns data, it is a query. If it returns void or throws an exception it is a command (or a "domain operation"). One reads without affecting state, the other only affects state.

I've not come across an example which falls in the middle.

I think the big problem I have with this is that by querying in the application layer, it gets direct access to our domain model. This means the domain model is visible to the application layer.

I'm just wondering how much this prevents refactoring of the domain model - knowing each delivery mechanism is coupled to the domain implementations.

As Darren points out - it makes testing harder as well.

But thanks for the post, I'm definitely going to think about this a lot.

Rule: "to not abstract queries..." works for very simple queries. But there is a lot of large and complex quries in real systems. "Top 5 selling products last month", "Recommended books" and other lightveight analytic are quries and may be implemented as single select query. But implement thems with one large linq expression is very hard.

Main advantage of IQueryable<T> interface is capability to compose-decompose queries.

Main component of query decomposition shoud be "combinator". "Combinator" is Func<IQueryable<T>,...,IQueryable<V>>

You can decompose any complex queries to "combinators" and "aspects". "Aspect" is Interface with properties, wich implemented by data model classes.

Like this: IHideable { bool IsHidden { get; set; } }

public static IQueryable<T> Visible(IQueryable<T> query) where T:class, IHideable { return query.Where(e => !e.IsHidden); }

Using combinators and aspect you can compose wery complex quries and hide implementation details in combinator.

To loose couplinng you can create classes and use decorator pattern with IoC (late binding) instead of early-bound function composition. Function composition if more readable, but it has very limited capability to inject dependencies.

Interesting. I think the issue is that the way this code is written is also the way that quite a few resources espouse as the way to do Repositories. I know that until very recently I would write code like this because I saw how it was done in Rob Conery's Storefront video series and this was approximately how that was done - "Repository" classes implementing an interface of the same name, wrapping LINQ queries, with a Service layer that's exposed to the controller and simply provides a wrapper around the Repository.

That's how I was exposed to services/repositories so I could see how this is considered a good practice. Of course reading Ayende's recent articles has shown me otherwise now, but until this series started I pretty much would have said this sample code showcases best practices for repositories and services!

@Wayne M - this is mostly a matter of opinion! You need to work out what works well for you and your team rather than just careering off towards any given person's idea of "best practice" (a term I strongly suspect Ayende is allergic to anyway).

This is the only article in this series that I disagree with, but that's because of a personal taste. I like having my queries abstracted into a business layer query object or method, whose sole function is to execute that one query. It helps keep my MVC controllers smaller and easier to read, and the method name helps convey the purpose of the query, without having to look at the Linq itself to try and figure out how the query is meant to function.

That being said, I still directly access my ORM right in my business layer, as I agree about the data access abstractions and the issues with the repository layer.

@gandjustas If you are practicing DDD then one of the common recommendations is NOT to use your domain model as a query model. When you apply a command to your domain, you can denormalize the results into view models and store those in the DB too. Thus, if you commonly need the top 5 products sold in a month, you could have a denormalization of total sales of products per month. Then your query would be something like: ProductSales.Where(SalesForLast30Days).OrderBy(x => x.TotalSales).Take(5);

This could even be generated as a nightly process. You don't need real time sales recommendations do you? In a nightly process you don't need to worry about performance and working around odd abstractions.

Darren: those tests seem a poor comparison to make. The first one doesn't actually test what its name suggests, it just establishes that Index returns whatever it gets from the service irrespective of whether those results are actually the best selling product categories. It's not really testing anything particularly useful.

The second one, I assume, is going to actually retrieve the best selling product categories from a database populated with test data and then establish if they're the results we would expect, namely that they are the best selling product categories. Yes, it's more set up given that it's more of an integration test but at least it's testing something useful: that the Index method actually returns the best selling categories.

As for the re-use argument, I think that Ayende has addressed that elsewhere by suggesting helpers or extension methods. Having these interstitial service classes isn't the only alternative to achieve re-use.

Darren: In addition to SteveR's comment, you're testing your LINQ (i assume) query against an in memory array. You'll run into all kinds of issues if you have string comparisons depending on your underlying ORM/DB. Some LINQ implementations are not case sensitive, but if you do in memory tests, they are. Plus some only implement a subset of operators. You'd really be better off running this as an integration test.

Sigh.

SteveR, the unit test does test what it says, because that's what the controller is doing. The controller is going to the place for determining what the best selling products are: The service that provides it.

It's no different than going to the product table directly, except that the calculations for determining the best products are stored away in a service responsible for handling it.

Does this mean that it's possible that the controller returns something other than the best selling products? Of course. But that can happen if you use an extension method or helpers or anything. So to solve that problem, you write integration tests (with SpecFlow, Cucumber, etc) that handle it, with the unit tests driving the classes that eventually meet the specs. It's BDD.

Plus, there's still the issue of the Single Responsibility Principle, which I haven't seen addressed yet. Plus, I haven't seen one unit test, as it seems some think it's ok to stick with integration-level tests on controllers.

Ryan,

That was a copy/paste typo in the code, which I just took out. The database setup is going to be all of what is done with the products. Which, now that I think about it, means that I can't test anything from the scope of the unit test because new objects will be instantiated by the ORM. -- Sorry, I don't do this type of testing :)

So I guess that makes my case even stronger: How are you going to test that the right objects came out, assert against the unique identifier on each object? And how do I assert that the objects come out of the ORM in the way that will work (including the right child objects, etc), since my controller is now doing the data access? I'd probably just do what many devs do in these types of situations: Ignore the detail, assert some trivial issue like the number of records returned, and move on.

I understand the problems of testing an in-memory set versus the live database, which is why I advocate for writing integration tests against the actual database in addition to the unit tests.

Darren, My opinion on testing queries is that your read model should me so simple that writing unit tests around it would have marginal value compared to the unit tests around your business logic. Thus I'd spend very little time testing the read side. Throw in a few integration tests to verify that no obvious exceptions are being thrown by the data access code, then use time you save by providing extra value and addressing issues as they arise.

I'd like to see DDD app written by you Oren. Not some one page blog engine.

@ Ryan: I'm not practicing DDD, I'm practicing non-ddd ;) I'm generating "top sales last moth" in realtime until it's fast enough (1st phase). When this process became not fast enough I will enable long-period caching (2nd phase). When cache became not enough I will use OLAP (3rd phase).

I see no point of using denormalization unil project reach 3rd phase. I will prefer to have as simple and clear code as possible, even if it not conforms DDD\CQRS\SOLID\IOC\ORM\ABCD\XYZ or any other acronym.

BTW. Only small number of projects reaches 3rd phase.

Darren: thanks for the condescending sigh. The first "test" is not a test of anything meaningful. It's busywork. Your test relies on a stub of the service to return canned results to the controller which it then returns, which proves what exactly? Whether you accept it or not, it simply does not test what the name of the test suggests that it does. This should be a clue that perhaps tests like that don't provide any value. Rename it "IndexShouldReturnWhateverItGotFromTheService" and that becomes clear. So if your argument is that having the service class allows you to write tests like that then my answer is, "So what?".

There's no "calculation" taking place, either. It's just a query, which is Ayende's point and why the service classes are redundant.

Just thinking how it would look if I applied this architecture to some larger application (let say it has 100 tables, workflows, complex domain logic). It would be nightmare to build and maintain. Been there, than that, and still learning from mistakes. Great app designs practices lies in simplicity of resolving complex problems.

Let me try to come with my humble view.... :)

All too often we see the traditional presentation -> service layer -> repository/ dal design, exactly as the provided example. We see people struggle with redundant code and strange "ohh-so-clever" abstractions over simple queries... Why?

The problem lies exactly in the abstraction as Oren points out, not in the queries. All queries doesn't have to be abstracted and can easily be executed without the service layer. The more complicated ones, that do need some sort of special logic, needs an abstraction and should reside in the business logic.

This sums up to... pass-through queries should just be written without any abstractions and carried out where needed, whereas business logic operations and should encapsulate complicated queuries.

Well, just my 2 cent.

Comment preview