I originally titled this post NHibernate Stupid Perf Tricks, but decided to remove that. The purpose of this post is to show some performance optimizations that you can take advantage of with NHibernate. This is not a benchmark, the results aren’t useful for anything except comparing to one another. I would also like to remind you that NHibernate isn’t intended for ETL scenarios, if you desire that, you probably want to look into ETL tools, rather than an OR/M developed for OLTP scenarios.

There is a wide scope for performance improvements outside what is shown here, for example, the database was not optimized, the machine was used throughout the benchmark, etc.

To start with, here is the context in which we are working. This will be used to execute the different scenarios that we will execute.

The initial system configuration was:

<hibernate-configuration xmlns="urn:nhibernate-configuration-2.2">

<session-factory>

<property name="dialect">NHibernate.Dialect.MsSql2000Dialect</property>

<property name="connection.provider">NHibernate.Connection.DriverConnectionProvider</property>

<property name="connection.connection_string">

Server=(local);initial catalog=shalom_kita_alef;Integrated Security=SSPI

</property>

<property name='proxyfactory.factory_class'>

NHibernate.ByteCode.Castle.ProxyFactoryFactory, NHibernate.ByteCode.Castle

</property>

<mapping assembly="PerfTricksForContrivedScenarios" />

</session-factory>

</hibernate-configuration>

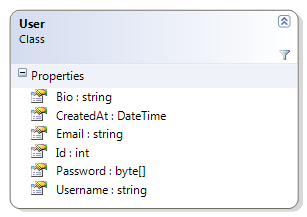

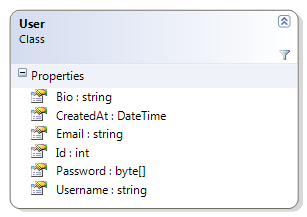

The model used was:

And the mapping for this is:

<class name="User"

table="Users">

<id name="Id">

<generator class="hilo"/>

</id>

<property name="Password"/>

<property name="Username"/>

<property name="Email"/>

<property name="CreatedAt"/>

<property name="Bio"/>

</class>

And each new user is created using:

public static User GenerateUser(int salt)

{

return new User

{

Bio = new string('*', 128),

CreatedAt = DateTime.Now,

Email = salt + "@example.org",

Password = Guid.NewGuid().ToByteArray(),

Username = "User " + salt

};

}

Our first attempt is to simply check serial execution speed, and I wrote the following (very trivial) code to do so.

const int count = 500 * 1000;

var configuration = new Configuration()

.Configure("hibernate.cfg.xml");

new SchemaExport(configuration).Create(false, true);

var sessionFactory = configuration

.BuildSessionFactory();

var stopwatch = Stopwatch.StartNew();

for (int i = 0; i < count; i++)

{

using(var session = sessionFactory.OpenSession())

using(var tx = session.BeginTransaction())

{

session.Save(GenerateUser(i));

tx.Commit();

}

}

Console.WriteLine(stopwatch.ElapsedMilliseconds);

Note that we create a separate session for each element. This is probably the slowest way of doing things, since it means that we significantly increase the number of connections open/close and transactions that we need to handle.

This is here to give us a base line on how slow we can make things, to tell you the truth. Another thing to note that this is simply serial. This is just another example of how this is not a true representation of how things happen in the real world. In real world scenarios, we are usually handling small requests, like the one simulated above, but we do so in parallel. We are also using a local database vs. the far more common remote DB approach which skew results ever furhter.

Anyway, the initial approach took: 21.1 minutes, or roughly a row every two and a half milliseconds, about 400 rows / second.

I am pretty sure most of that time went into connection & transaction management, though.

So the first thing to try was to see what would happen if I would do that using a single session, that would remove the issue of opening and closing the connection and creating lots of new transactions.

The code in question is:

using (var session = sessionFactory.OpenSession())

using (var tx = session.BeginTransaction())

{

for (int i = 0; i < count; i++)

{

session.Save(GenerateUser(i));

}

tx.Commit();

}

I expect that this will be much faster, but I have to explain something. It is usually not recommended to use the session for doing bulk operations, but this is a special case. We are only saving new instances, so the flush does no unnecessary work and we only commit once, so the save to the DB is done in a single continuous stream.

This version run for 4.2 minutes, or roughly 2 rows per millisecond about 2,000 rows / second.

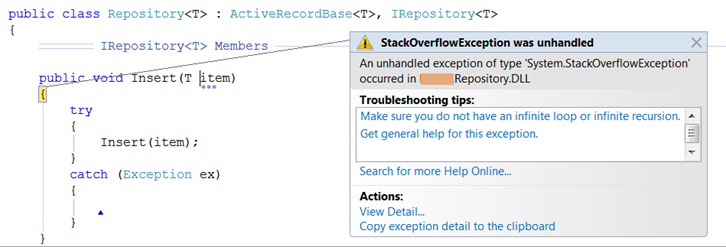

Now, the next obvious step is to move to stateless session, which is intended for bulk scenarios. How much would this take?

using (var session = sessionFactory.OpenStatelessSession())

using (var tx = session.BeginTransaction())

{

for (int i = 0; i < count; i++)

{

session.Insert(GenerateUser(i));

}

tx.Commit();

}

As you can see, the code is virtual identical. And I expect the performance to be slightly improved but on par with the previous version.

This version run at 2.9 minutes, about 3 rows per millisecond and close to 2,800 rows / second.

I am actually surprised, I expected it to be faster, but it was much faster.

There are still performance optimizations that we can make, though. NHibernate has a rich batching system that we can enable in the configuration:

<property name='adonet.batch_size'>100</property>

With this change, the same code (using stateless sessions) runs at: 2.5 minutes and at 3,200 rows / second.

This doesn’t show as much improvement as I hoped it would. This is an example of how a real world optimization is actually failing to show its promise in a contrived example. The purpose of batching is to create as few remote calls as possible, which dramatically improve performance. Since we are running on a local database, it isn’t as noticeable.

Just to give you some idea about the scope of what we did, we wrote 500,000 rows and 160MB of data in a few minutes.

Now, remember, those aren’t numbers you can take to the bank, their only usefulness is to know that by a few very simple acts we improved performance in a really contrived scenario by 90% or so. And yes, there are other tricks that you can utilize (preparing commands, increasing the batch size, parallelism, etc). I am not going to try to outline then, though. For the simple reason that performance should be quite enough for everything who is using an OR/M. That bring me back to me initial point, OR/M are not about bulk data manipulations, if you want to do that, there are better methods.

For the scenario outlined here, you probably want to make use of SqlBulkCopy, or the equivalent for doing this. Just to give you an idea about why, here is the code:

var dt = new DataTable("Users");

dt.Columns.Add(new DataColumn("Id", typeof(int)));

dt.Columns.Add(new DataColumn("Password", typeof(byte[])));

dt.Columns.Add(new DataColumn("Username"));

dt.Columns.Add(new DataColumn("Email"));

dt.Columns.Add(new DataColumn("CreatedAt", typeof(DateTime)));

dt.Columns.Add(new DataColumn("Bio"));

for (int i = 0; i < count; i++)

{

var row = dt.NewRow();

row["Id"] = i;

row["Password"] = Guid.NewGuid().ToByteArray();

row["Username"] ="User " + i;

row["Email"] = i + "@example.org";

row["CreatedAt"] =DateTime.Now;

row["Bio"] = new string('*', 128);

dt.Rows.Add(row);

}

using (var connection = ((ISessionFactoryImplementor)sessionFactory).ConnectionProvider.GetConnection())

{

var s = (SqlConnection)connection;

var copy = new SqlBulkCopy(s);

copy.BulkCopyTimeout = 10000;

copy.DestinationTableName = "Users";

foreach (DataColumn column in dt.Columns)

{

copy.ColumnMappings.Add(column.ColumnName, column.ColumnName);

}

copy.WriteToServer(dt);

}

And this ends up in 49 seconds, or about 10,000 rows / second.

Use the appropriate tool for the task.

But even so, getting to 1/3 of the speed of SqlBulkCopy (the absolute top speed you can get to) is nothing to sneeze at.

Davy Brion, one of NHibernate’s committers, is running a series of posts discussing building your own DAL. The reasons for doing so varies, in Davy’s case, he has clients who are adamantly against using anything but the Naked CLR. I have run into similar situations before, sometimes it is institutional blindness, sometimes it is legal reasons, sometimes there are actually good reasons for it, although it is rare.

Davy Brion, one of NHibernate’s committers, is running a series of posts discussing building your own DAL. The reasons for doing so varies, in Davy’s case, he has clients who are adamantly against using anything but the Naked CLR. I have run into similar situations before, sometimes it is institutional blindness, sometimes it is legal reasons, sometimes there are actually good reasons for it, although it is rare.