Continuing to shadow Davy’s series about building your own DAL, this post is about hydrating entities.

Hydrating Entities is the process of taking a row from the database and turning it into an entity, while de-hydrating in the reverse process, taking an entity and turning it into a flat set of values to be inserted/updated.

Here, again, Davy’s has chosen to parallel NHibernate’s method of doing so, and it allows us to take a look at a very simplified version and see what the advantages of this approach is.

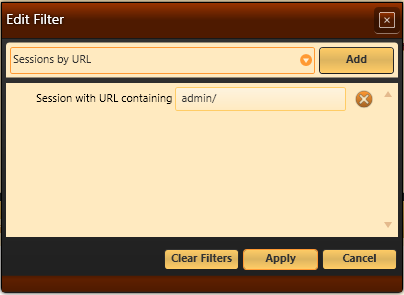

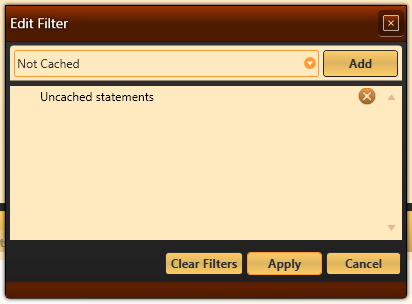

First, we can see how the session level cache is implemented, with the check being done directly in the entity hydration process. Davy has some discussion about the various options that you can choose at that point, whatever to just use the values from the session cache, to update the entity with the new values or to throw if there is a change conflict.

NHibernate’s decision at this point was to assume that the entity that we have is correct, and ignore any changes made in the meantime to the database. That turn out to be a good approach, because any optimistic concurrency checks that we might want will run when we commit the transaction, so there isn’t much difference from the result perspective, but it does simplify the behavior of NHibernate.

Next, there is the treatment of reference properties, what NHibernate call many-to-one associations. Here is the relevant code (editted slightly so it can fit on the blog width):

private void SetReferenceProperties<TEntity>(

TableInfo tableInfo,

TEntity entity,

IDictionary<string, object> values)

{

foreach (var referenceInfo in tableInfo.References)

{

if (referenceInfo.PropertyInfo.CanWrite == false)

continue;

object foreignKeyValue = values[referenceInfo.Name];

if (foreignKeyValue is DBNull)

{

referenceInfo.PropertyInfo.SetValue(entity, null, null);

continue;

}

var referencedEntity = sessionLevelCache.TryToFind(

referenceInfo.ReferenceType, foreignKeyValue);

if(referencedEntity == null)

referencedEntity = CreateProxy(tableInfo, referenceInfo, foreignKeyValue);

referenceInfo.PropertyInfo.SetValue(entity, referencedEntity, null);

}

}

There are a lot of things going on here, so I’ll take them one at a time.

You can see how the uniquing process is going on. If we already have the referenced entity loaded, we will get it directly from the session cache, instead of creating a separate instance of it.

It also shows something that Davy’s promised to touch in a separate post, lazy loading. I had an early look at his implementation and it is pretty. So I’ll skip that for now.

This piece of code also demonstrate something that is very interesting. The lazy loaded inheritance many to one association conundrum. Which I’ll touch on a future post.

There are a few other implications of the choice of hydrating entities in this fashion. For a start, we are working with detached entities this way, the entity doesn’t have to have a reference to the session (except to support lazy loading). It also means that our entities are pure POCO, we handle it all completely externally to the entity itself.

It also means that if we would like to handle change tracking (with Davy’s DAL currently doesn’t do), we have a much more robust way of doing so, because we can simply dehydrate the entity and compare its current state to its original state. That is exactly how NHibernate is doing it. This turn out to be a far more robust approach, because it is safe in the face of method modifying state internally, without going through properties or invoking change tracking logic.

I wanted to also touch about a few things that makes the NHibernate implementation of the same thing a bit more complex. NHibernate supports reflection optimization and multiple ways of actually setting the values on the entity, is also support things like components and multi column properties, which means that there isn’t a neat ordering between properties and columns that make the Davy’s code so orderly.