Code ReviewWho Can Help Me

Originally posted at 3/22/2011

This time, taking on Who Can Help Me, I found some really interesting things there.

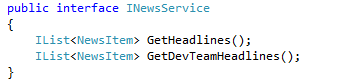

For one thing, we got this little guy:

I was all ready to discover INewsRepository –<> NewsRepository : Repository<NewsItem>, etc. What I found, instead was a real service:

That is how it is supposed to be, to be frank. There is a need to abstract something, and we write just enough code to make it work. I would argue with the implementation of this, however, because the approach for multi threading is wrong headed. We shouldn’t spin out a new thread pool work item just to execute the request when the FluentTwitter API already contains async version that can do quite well for us, but the overall concept is sound. And I was relieved not to find nested repositories in my first few steps there.

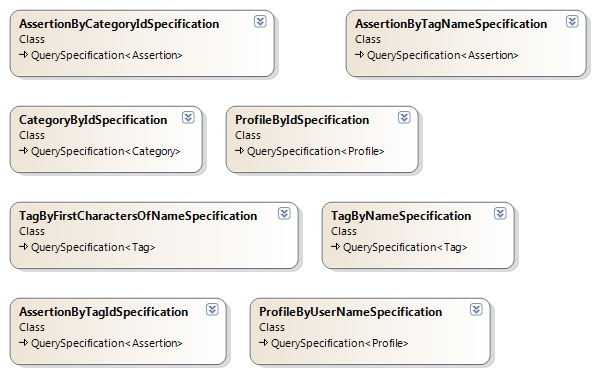

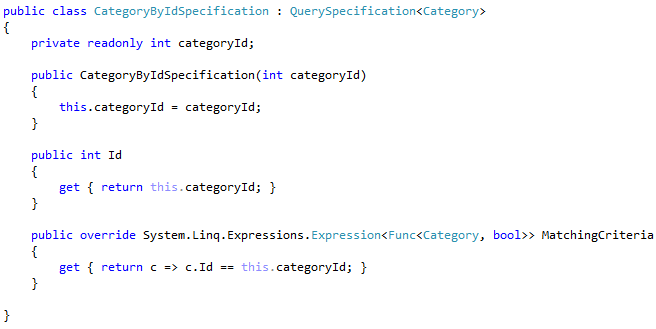

Of course, I then discovered that this nice service has some friends from the left side of the blanket:

I think that I expressed my opinion about code such as this:

A waste of keystrokes, and highly inefficient to boot. You don’t make queries by Id in NHibernate, you use Get or Load instead.

Overloading the infrastructure – After reviewing the code, I was surprised to see so much of it dedicated for caching:

What surprised me more is that in the entire application there were exactly two locations where a cache was used. In both cases, it led to the same service. Implementing a much simpler solution in that service would have chopped quite a bit of code out of this project.

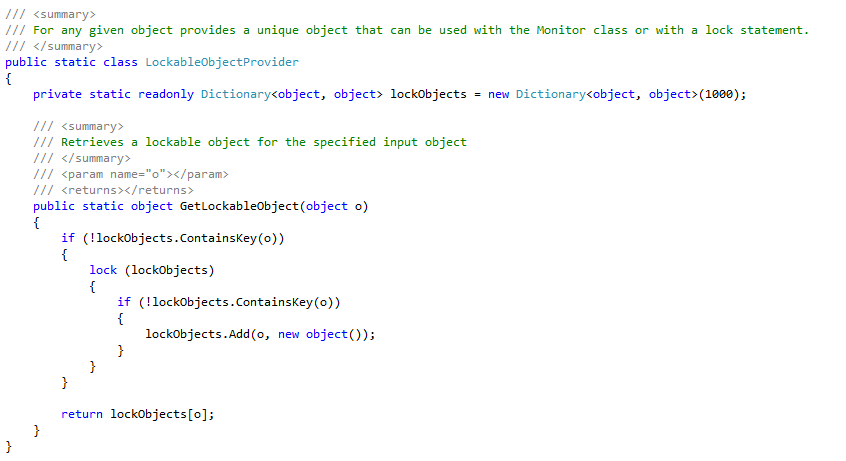

And then there was this:

Luckily, this is dead code, but I was quite amused by this code, in a “shake you head in disbelief” fashion.

First, for a code that is obviously meant to be used in a multi threaded fashion, it is not thread safe. Second, it is actually a memory leak waiting to happen, more than anything else. If you call that method, your items will never freed.

The next is a personal opinion, but I can’t help feeling that this is pretty heavy weight:

Well, that is true whenever you are looking at an XSD, but in this case, we just need to expose two properties, and I think that it would have been perfectly fine to stick that into the <appSettings/> section. There are actually several places where similar approach has been tried, but I don’t see this of any value if you aren’t writing a library that might require special configuration. If you are writing an app, using the default modes is usually more than enough.

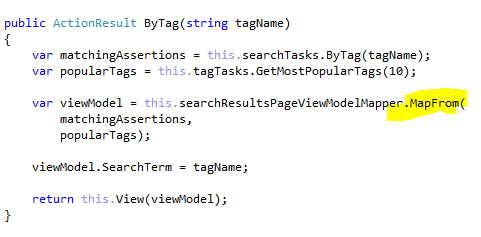

Reading the controllers code wasn’t a real surprise. One thing that did bother me is the amount of mapping that is going on there, and how much of that I was simply unable to follow. For example:

Which is then calling;

Which then goes into additional custom implementations and convention based ones.

The reason that this is important is that this is a prime location for Select N+1 issues, and indeed, we have several such occurrences of the problem just in this piece of code.

More posts in "Code Review" series:

- (26 Jun 2016) The bounded queue

- (15 Apr 2011) Who Can Help Me

- (01 Apr 2011) SharpArchitecture.MultiTenant

- (28 Nov 2007) PetShop 3.0

- (28 Nov 2007) The PetShop Application

Comments

As silly as LockableObjectProvider is (hard to believe the developer didn't spot the "memory leak" problem), I don't see why it's not thread safe? Looks like a standard double check lock - so won't work in Java land, but should work ok on the MS CLR.

Not that I'm advocating it, just curious :-)

@Steven Robbins

You can't use ContainsKey concurrently with Add. When Add changes the underlying structure, ContainsKey will break.

Oh, and the same goes for concurrently using Get and Add.

Although thinking about it, maybe ContainsKey won't break, but Get definitely can.

So, a ConcurrentDictionary ( msdn.microsoft.com/en-us/library/dd287191.aspx) could be used instead if the double-locking ...

@Patrick ah - it's a dictionary problem, fair enough, I was looking at the general structure rather than what it was actually doing. Thanks.

Out of interest, how does it "break" ? It should never (theoretically) be calling add and get concurrently if ContainsKey doesn't return true until the item is actually added, and not when it's halfway though adding it.

Yes, on the face of it, this is safe code, in the sense that we will never get DuplicateKeyException. But the implementation of ContainsKey() isn’t safe to run when Add() is also executing.

The actual behavior depends on the implementation, but it is easy enough to imagine a scenario where invariants that ContainsKey() relies on are broken for the duration of the Add() call.

I'm probably taking this off topic (into implementation details of dictionary and its internal data structures) .. probably sufficient to say "it's calling methods from multiple threads that aren't guaranteed to be thread safe" and leave it at that :-)

public static object GetLockableObject(object o)

{

return o;

}

surely?

Damien :: In general. no - you shouldn't be locking the actual object under usage, because it's not fine grained enough and if other consumers are also doing similar you can end up with deadlocks occurring over unrelated functionality that shouldn't involve deadlocks.

That said, the above implementation ALSO has that problem - you should really perform locks on specific data for specific functionality, and not perform locks on exposed functional objects.

Where is the memory leak?

"One thing that did bother me is the amount of mapping that is going on there, and how much of that I was simply unable to follow."

How would you prefer to handle the mapping? Is your concern that the mapping for the SearchResultsPageViewModel doesn't happen in one place?

The one question I've got is how does one avoid the ever growing service with an implementation like this? It seems like the NewsService could end up with quite a lot of code being so very procedural. I'm not arguing for abstraction.. but a little OO would be nice no?

@RJ They are storing objects as keys in a static dictionary. Even if every other reference to the object goes out of scope, they dictionary will still hold a reference as a key, and the dictionary is never cleared out. So anything added to the dictionary, which is any object you call LockableObjectProvider.GetLockableObject() on, will never be garbage collected.

It will be thread safe if the dictionary is declared as volatile... still I have no idea what this class suppose to do.

firefly,

No, it cannot be made thread safe as written

James,

My problem with the mapping is that we can't really see what is going on. It is broken all over the place.

I would rather see it all in one place so that I can follow it.

You are right... it's still breaking at the return... I was sloppy :)

What am I missing? The docs at msdn.microsoft.com/en-us/library/xfhwa508.aspx say "...where an enumeration contends with write accesses, the collection must be locked during the entire enumeration. To allow the collection to be accessed by multiple threads for reading and writing, you must implement your own synchronization." Are the docs wrong?

If not, this code seems to fulfil their requirements. (It can throw exceptions, but "threadsafe" doesn't mean "exception free". I'd assume that fail-fast is what we want.) The ContainsKey method will either get a true hit, a false miss, or a true miss. A true hit is no problem. Either kind of miss will enter the double-checked lock. Assuming we don't have a debugger attached (which can make "lock" theoretically non-thread-safe), the second ContainsKey evaluation will have its collection locked for the entire enumeration, per the docs.

There could be a problem if you pass in an object that overrides Equals() in a sketchy way, such that it's not safe for Dictionary use. If two different objects can appear equal, then they'll get the same synchronization object. But that sort of kludge is not "safe" for most uses anyhow.

Comment preview