Code ReviewPetShop 3.0

I got some feedback about my previous review, that the PetShop 2.0 was recognized as architecturely unsound, and that I should look at version 3.0 of the code, which is:

Version 3.x of the .NET Pet Shop addresses the valuable feedback given by reviewers of the .NET Pet Shop 2.0 and was created to ensure that the application is aligned with the architecture guideline documents being produced by the Microsoft.

I have to say, it looks like someone told the developers, we need an architecture, go build one. The result is... strange. It make my spider sense tingle. I can't really say that it is wrong, but it makes me uncomfortable.

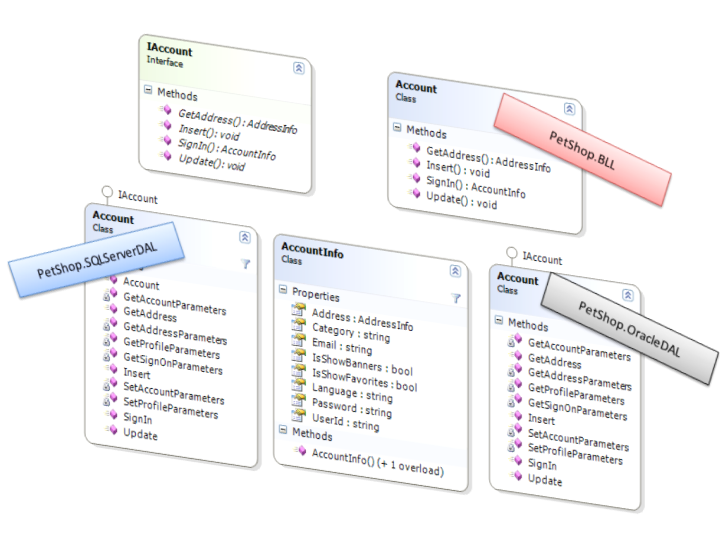

Take a look at the account class, and how it is structured:

Well, I don't know about you, but that is poor naming convention to start with. And I am seeing here an architecture by rote, if this makes any sort of sense.

Then there are such things as:

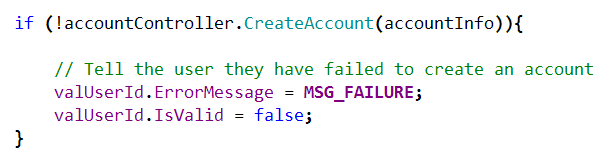

Which leads us to this:

The MSG_FAILURE is:

I am sorry, but while there was some effort made here over the previous version, I am not really impressed with it. As I said, the architecture is now probably sound, if suspicious because of lack of character, but the implementation is still not really one that I would call decent. I have to admit about a strong bias here, though. I don't like te naked CLR, but the code has missed a lot of opportunities to avoid unnecessary duplication and work.

I have been also asked what I would consider a good sample application, and I would like to recommend Cuyahoga, as the application that probably models my thinking the best. SubText is also good, but it is more interesting case, because I don't like its reliance on stored procedures. Nevertheless, it is a valid approach, and it certainly serving this blog very well.

More posts in "Code Review" series:

- (26 Jun 2016) The bounded queue

- (15 Apr 2011) Who Can Help Me

- (01 Apr 2011) SharpArchitecture.MultiTenant

- (28 Nov 2007) PetShop 3.0

- (28 Nov 2007) The PetShop Application

Comments

Hey Oren,

your comment about not using Stored Procs got me wondering. When you're using ORM tools like NHIbernate, how do you avoid not using stored procs? At some point, surely it would be better, performance wise, for a stored proc to perform some intense data calculation/manipulation, on a high performance server, than you retrieving the original data into domain objects, and calculating yourself?

Our current winforms project is based on market performance data, we have several stored procs that manipulate historical data to produce summary data (for example on yearly performance). Execution of these stored procs takes seconds on the server, where as retrieving all that yearly data and calculating it in our business layer would take up network bandwidth, user CPU, thus slower performance.

MarkK,

It really depends on the scenario.

OR/M can certainly support such things natively. With NHibernate for instance, you can ask it to do almost anything that SQL can do, and it will do that (on the server).

There are situations where you do want SP, but those are rare and far between.

Won't this approach lead to an "anemic" domain model? It looks like the model is just data with no behavior, and that the behavior has been delegated to the BLL. While it might be appropriate here, it seems to suggest that your design should be structured as

Model.MyThing (bag of setters and getters only)

BLL.MyThing (behaviors)

Data.MyThing (CRUD)

The .NET framework folks who figured out that a plethora of Exception information is a good thing need to spread the message throughout Microsoft and encourage that in their developer guidelines. Perhaps there should also be a SensitiveData IDictionary<string, object> to encourage logging of all information that could be potentially helpful.

Oren I agree the above snippets of code do seem to have a smell when you look at them in this isolated fashion.

I told you I was working on a sample project using activerecord, it is no small task! because there are many correct ways to build an enterprise app using activerecord etc depending on the goal of the application.

When you are new to some of these technologies, some of your old approaches must change and adapt to fit the new technologies. I am discovering this with activerecord/monorail integration. It has a much different architecture than my usual ASP.net/Nhibernate routine. I miss asp.net server controls, but things like the template engine and the scaffolding was love at first sight!

I am currently not impressed at my attempt to build some "best-practice" sample app, but I have a beginnings on google code, if you are ever have a need for something that encapsulates activerecord in a "supervising controller" style fashion.

Connor,

It sure does lead to an anemic domain model. Or, since their "domain classes" don't even have stuff like interception for lazy loading etc - i.e no behavior at all - one could argue that they don't really have a domain model, just a set of DTOs.

And while I agree with you that this approach is appropriate at times, it may not be so when one is trying to rewrite a demo app for the third time, this time to showcase an application architecture including a domain model...

I created a version of PetShop - a modified PetShop 2 - that used the O/R Mapper I developed at the time (Pragmatier) to show that a version actually using a real domain model and an O/R Mapper could scale just as well. You can read about the test results here: http://www.devx.com/vb2themax/Article/19867

Anyway, the thing is that without an O/R Mapper to do the heavy lifting for you, supporting the domain model in a demo application for which you are /also/ trying to publish a competitive Lines Of Code (LOC) count isn't going to be easy. PetShop 3 is pretty much what you end up with.

If MS had an O/R Mapper as part of their base library, meaning they wouldn't have to count the LOC for it, then they could publish a much nicer PetShop, that wouldn't have to have an anemic domain model. Perhaps they will when Linq2Sql or Linq2EF is out...

/Mats

The thing making your spidey sense tingle has a name, it's called a "component model"..and it's quite a different beast than a Domain Model apps..

One of the driving ideas behind it is the concept of separating object shape (state) and behavior. That's why you see an AccountInfo with all state, and an Account class with all behavior.

You will usually see that stateful class mapped directly from the database columns.

Their entities are not OO entities, they are the entites you would see on an Entity/Relationship Diagram (ie...database entities).

This style of design also seemed to be the direct target for the initial versions of the Entity Framework (replace the DALs with EF).

Just FYI...

Evan

Connor,

Yes, thank you for putting the finger on it.

Pete,

The reason that looked at that was that I wanted a legacy code base, I got more than I expected

Why not review PetShop 4.0?

http://msdn2.microsoft.com/en-us/library/aa479070.aspx

Hi Guys,

Why does the Alt.Net group not come out with a reference architecture of their own.

We don't need a complex application, in fact I would rather you clone the Pet Shop Demo itself. People would then be able to contrast the difference between your architecture and the one coming out of Redmond.

Also you could use all the tools you want Castle, Spring, NHibernate, MonoRails.

yup, I agree to [An Amateur Developer ] suggestion.

Take a look at this other unofficial PetShop implementation :

http://www.dotnetguru.org/modules.php?op=modload&name=Downloads&file=index&req=getit&lid=14

This one show many good application architecture practices.

Yann.

Comment preview