What happens behind the scenes: NHibernate, Linq to SQL, Entity Framework scenario analysis

One of the things that I began doing since starting to work on multiple OR/M Profilers is to compare how all of them are handling a particular task. This is by no means a comparative analysis, but it is an interesting data point.

The scenario in question is loading a blog with all its posts and comments.

Let us start with NHibernate:

var blogs = s.CreateQuery(

@"from Blog b

left join fetch b.Posts p

left join fetch p.Comments

where b.Id = :id")

.SetParameter("id", 1)

.List<Blog>();

Will generate the following SQL

select blog0_.Id as Id7_0_, posts1_.Id as Id0_1_, comments2_.Id as Id2_2_, blog0_.Title as Title7_0_, blog0_.Subtitle as Subtitle7_0_, blog0_.AllowsComments as AllowsCo4_7_0_, blog0_.CreatedAt as CreatedAt7_0_, posts1_.Title as Title0_1_, posts1_.Text as Text0_1_, posts1_.PostedAt as PostedAt0_1_, posts1_.BlogId as BlogId0_1_, posts1_.UserId as UserId0_1_, posts1_.BlogId as BlogId0__, posts1_.Id as Id0__, comments2_.Name as Name2_2_, comments2_.Email as Email2_2_, comments2_.HomePage as HomePage2_2_, comments2_.Ip as Ip2_2_, comments2_.Text as Text2_2_, comments2_.PostId as PostId2_2_, comments2_.PostId as PostId1__, comments2_.Id as Id1__ from Blogs blog0_ left outer join Posts posts1_ on blog0_.Id = posts1_.BlogId left outer join Comments comments2_ on posts1_.Id = comments2_.PostId where blog0_.Id = 1 /* @p0 */

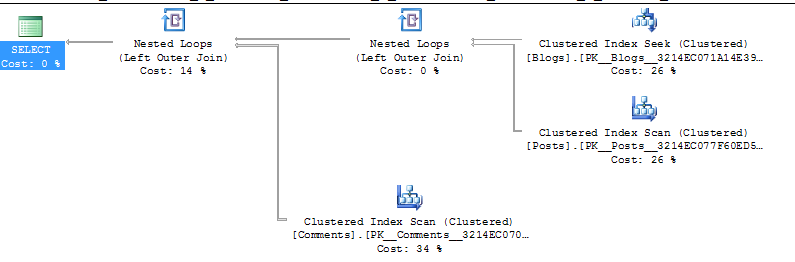

This result in a fairly simple query plan:

However, you should note that this also result in a Cartesian product, which may not be what you wanted.

Linq to SQL doesn’t really provide a good way to express what I wanted, but it does get the job done:

var dataLoadOptions = new DataLoadOptions(); dataLoadOptions.LoadWith<Blog>(x => x.Posts); dataLoadOptions.LoadWith<Post>(x => x.Comments); using (var db = new BlogModelDataContext(conStr) { LoadOptions = dataLoadOptions }) { db.Blogs.Where(x => x.Id == 1).ToList(); }

Interestingly enough, this does not generate a single query, but two queries:

-- statement #1 SELECT [t0].[Id], [t0].[Title], [t0].[Subtitle], [t0].[AllowsComments], [t0].[CreatedAt] FROM [dbo].[Blogs] AS [t0] WHERE [t0].[Id] = 1 /* @p0 */ -- statement #2 SELECT [t0].[Id], [t0].[Title], [t0].[Text], [t0].[PostedAt], [t0].[BlogId], [t0].[UserId], [t1].[Id] AS [Id2], [t1].[Name], [t1].[Email], [t1].[HomePage], [t1].[Ip], [t1].[Text] AS [Text2], [t1].[PostId], (SELECT COUNT(* ) FROM [dbo].[Comments] AS [t2] WHERE [t2].[PostId] = [t0].[Id]) AS [value] FROM [dbo].[Posts] AS [t0] LEFT OUTER JOIN [dbo].[Comments] AS [t1] ON [t1].[PostId] = [t0].[Id] WHERE [t0].[BlogId] = 1 /* @x1 */ ORDER BY [t0].[Id], [t1].[Id]

The interesting bit is that while there are two queries here, this method does not generate a Cartesian product, so I have to consider this a plus. What I would like to know is whatever this is intentionally so or just a result of the way Linq to SQL eager loading is structured.

The query plan for this is simple as well:

Finally, Entity Framework (both 3.5 and 4.0), using this code:

db.Blogs

.Include("Posts")

.Include("Posts.Comments")

.Where(x => x.Id == 1)

.ToList();

This code will generate:

SELECT [Project2].[Id] AS [Id], [Project2].[Title] AS [Title], [Project2].[Subtitle] AS [Subtitle], [Project2].[AllowsComments] AS [AllowsComments], [Project2].[CreatedAt] AS [CreatedAt], [Project2].[C1] AS [C1], [Project2].[C4] AS [C2], [Project2].[Id1] AS [Id1], [Project2].[Title1] AS [Title1], [Project2].[Text] AS [Text], [Project2].[PostedAt] AS [PostedAt], [Project2].[BlogId] AS [BlogId], [Project2].[UserId] AS [UserId], [Project2].[C3] AS [C3], [Project2].[C2] AS [C4], [Project2].[Id2] AS [Id2], [Project2].[Name] AS [Name], [Project2].[Email] AS [Email], [Project2].[HomePage] AS [HomePage], [Project2].[Ip] AS [Ip], [Project2].[Text1] AS [Text1], [Project2].[PostId] AS [PostId] FROM (SELECT [Extent1].[Id] AS [Id], [Extent1].[Title] AS [Title], [Extent1].[Subtitle] AS [Subtitle], [Extent1].[AllowsComments] AS [AllowsComments], [Extent1].[CreatedAt] AS [CreatedAt], 1 AS [C1], [Project1].[Id] AS [Id1], [Project1].[Title] AS [Title1], [Project1].[Text] AS [Text], [Project1].[PostedAt] AS [PostedAt], [Project1].[BlogId] AS [BlogId], [Project1].[UserId] AS [UserId], [Project1].[Id1] AS [Id2], [Project1].[Name] AS [Name], [Project1].[Email] AS [Email], [Project1].[HomePage] AS [HomePage], [Project1].[Ip] AS [Ip], [Project1].[Text1] AS [Text1], [Project1].[PostId] AS [PostId], CASE WHEN ([Project1].[C1] IS NULL) THEN CAST(NULL AS int) ELSE CASE WHEN ([Project1].[Id1] IS NULL) THEN CAST(NULL AS int) ELSE 1 END END AS [C2], CASE WHEN ([Project1].[C1] IS NULL) THEN CAST(NULL AS int) ELSE CASE WHEN ([Project1].[Id1] IS NULL) THEN CAST(NULL AS int) ELSE 1 END END AS [C3], [Project1].[C1] AS [C4] FROM [dbo].[Blogs] AS [Extent1] LEFT OUTER JOIN (SELECT [Extent2].[Id] AS [Id], [Extent2].[Title] AS [Title], [Extent2].[Text] AS [Text], [Extent2].[PostedAt] AS [PostedAt], [Extent2].[BlogId] AS [BlogId], [Extent2].[UserId] AS [UserId], [Extent3].[Id] AS [Id1], [Extent3].[Name] AS [Name], [Extent3].[Email] AS [Email], [Extent3].[HomePage] AS [HomePage], [Extent3].[Ip] AS [Ip], [Extent3].[Text] AS [Text1], [Extent3].[PostId] AS [PostId], 1 AS [C1] FROM [dbo].[Posts] AS [Extent2] LEFT OUTER JOIN [dbo].[Comments] AS [Extent3] ON [Extent2].[Id] = [Extent3].[PostId]) AS [Project1] ON [Extent1].[Id] = [Project1].[BlogId] WHERE 1 = [Extent1].[Id]) AS [Project2] ORDER BY [Project2].[Id] ASC, [Project2].[C4] ASC, [Project2].[Id1] ASC, [Project2].[C3] ASC

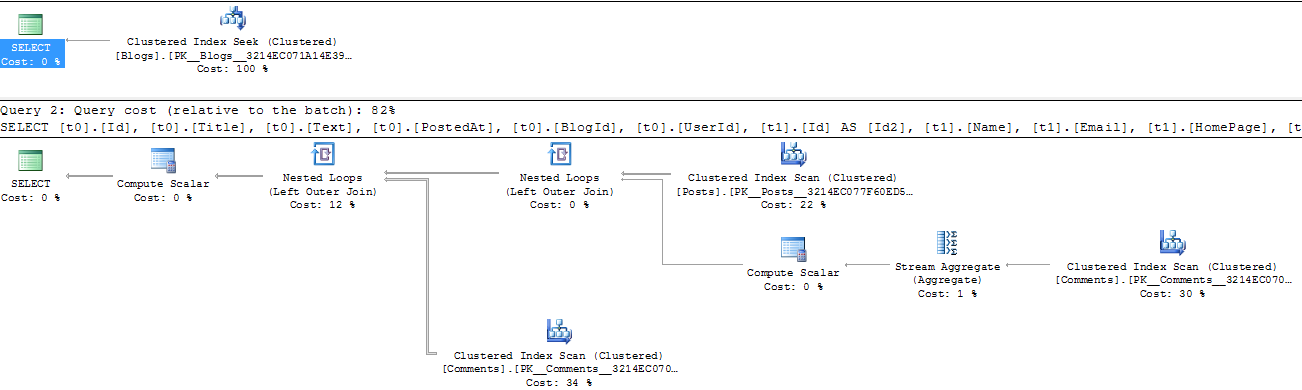

The query plan for this seems overly complicated:

If you’ll look closely, you’ll see that it generate a join between Blogs, Posts and Comments, essentially creating a Cartesian product between all three.

I am not going to offer commentary on the results, but open a discussion on them.

Comments

hmm - maybe I'm missing out on something, but in which case does the NH approache create a cartesian product?

As far as I know a cartesian product means to take all blogs, posts and comments and create a "matrix" of all possible combinations of blogs, posts and comments.

The execution plans are very similar in each case, so the query performance should be roughly the same.

I second Henning - Cartesian product is tableA x tableB without restricting join or where clauses.

In all of the 3 cases you need 1 blog and its associated posts and their associated comments.

For me the difference is that in the NHibernate case you get your Blog-entity redundantly back for every row returned. In the Linq-to-SQL you only get it once. So its more a matter of getting superfluous data from the database to the application (which can also important be important based on amount of rows and data in Blog ofcourse).

Both solutions are still getting superfluous data for the Posts (when more than one Comment per Post exists).

On the downside for the Linq-to-sql SQL there is the Count aggregation which takes around 31% in total (Rightmost scan of Comments in executionplan). This aggregation is completely missing (not necessary) in NHibernate.

Concerning EF, the query is pretty much identical to the one from NHibernate, apart from the expensive sorting (which is also the reason for the case logic - that prevents sorting on null's). Why they find it necessary to do the "outer" select I don't know - but could imagine is has to do with the way the SQL is constructed from the expression tree.

....which is why i just write the SQL I want in the first place...

Was this SQL generated from EF1 or EF4?

In a previous post of Ayende I have seen Entity Framework use a UNION ALL which is superior to any of the above approaches. I wonder

a) if NHibernate will support it in the future

b) why EF sometimes chooses to do such a bad job as it did in the example above

Curious what the logic i/o count is for the three?

It would be interesting to see what the equivalent ESQL comes up with, because I don't really trust the LINQ to produce a good equivalent given the history of the late addition of LINQ to the entity framework by the ADO.NET team.

SELECT VALUE

FROM

WHERE

I usually like to try the similar in ESQL, because of the rushed nature of LINQ in EF 1.0.

I'm really looking forward to some examples of there the new EF4 query output.

Just curious if it will be a bit nicer to look at.

LINQ to SQL is not very good at eager loading one-to-many associations. It often generates multiple queries or even select n+1 scenarios.

The output of the NHibernate sample is exactly what I would expect. Why does it need to be any more complicated?

So Ayende, which is better?

What version of Entity Framework is being used here - 3.5SP1 or 4.0? There was a lot of query optimization work done in 4.0.

blogs.msdn.com/.../...ed-sql-in-net-4-0-beta1.aspx

[)amien

I think this is EFv1. Assuming I have that correct, I would be interested to see the same SQL and query plan with EFv4 when it is finally released.

As much as I want EF to have the favourable SQL and query plan my gut reaction is to shy away from anything that is more complicated than it needs to be.

There is an argument to say "it's a tool, don't worry about it" but sooner or later you will need to and having something nicely formatted and concise to wade through will be easier!

Damien,

It is 3.5, I'll post about the difference between 3.5 & 4.0 shortly

Damien,

I just tested that with EF 4.0

The queries are basically the same, the only difference in the aliases used.

May be I'm wrong, bot all the cases seems nearly equally bad for me:

The only index seek operation there happens for PK_Blogs. Other indexes, that are normally quite large, are scanned. May be this is acceptable in exactly your case (SQL Server makes such decision based on statistics), but in general, this is quite bad. Are there any indexes allowing to do this better at all? E.g. I'd create indexes for Post.Blog and Comment.Post properties.

L2S plan seems the strangest one. It scans 3 indexes. Why? Because it computes count(*) aggregate, that actually isn't really necessary in your case (count of items in the collection can be computed completely on the client).

EF and NH plans are very similar, the only difference is in sequence of nested loop joins - in NH case it is much better (potential number of rows @ left & right side in both nested loops must be much lower). But this isn't something that is intrinsically dependent on NH on EF - it's just the result of SQL Server query optimizer work. I.e. in your particular case (data, I mean) the second plan might really look more attractive for it.

Btw, I'd like to see the same, but this appropriate indexes. There is nothing to compare without them - i.e. SQL Server already did the best while decided to make a single index seek at least ;)

Too many mistypings again ;)

" NH or EF"

"with appropriate ..."

Or more data, if they are there. SQL Server might really prefer scan, if there are just few hundreds of records in Posts and Comments tables (i.e. very few pages).

What would the execution plan be if you were to do say (with LINQ to SQL)

var result = from p in ctx.Posts

Because you would be doing a join, and not two seperate queries.

One thing that I find problematic in NHibernate is doing server-side paging while eager-loading many-to-one associations. DistinctEntityRoot transformer operates in memory so you will end with less items per page than expected.

It looks like the options are using batch loading instead of eager loading or having a subquery that returns distinct ids. Is there a more elegant way of doing this?

You could do a transformation (in the query convert to a DTO containing all the information you want). That way, you don't have to think about eager and lazy loading, for display purposes.

Until there are 2 index scans and 2 nested loop joins, I''m not sure if there are any reasons for optimizing SQL here. I.e. indexes and properly defined foreign key constraints must be established first.

Based on the costs in the query plan I'm guessing there is virtually no data in the test database? How else would a clustered index seek and a clustered index scan (on what should be a single item in the nested loop) have the same cost?

What happens when there is significantly more data loaded?

My initial thought was the question the database design, but now I'm more concerned about the sample data size and discussing optimization on such a small data set that it would really never matter.

John Chapman is right but in case of EF you can see clearly that it is inferior to the other two. The SQL is ridiculous.

Most likely. One more evidence: estimated amounts of data are large, there must be hash or merge join instead of nested loops.

+1.

Sorry, "One more evidence: IF estimated amounts"...

I agree with Alex for the most part. My initial reaction was to gawk at the number of clustered index scans (e.g. table scans) in each of the query plans. Perhaps this is somewhat of a misnomer in this case if there isn't much data in the tables or a lack of indexes, though.

Tossing that aside for the moment, it does seem LINQ to SQL is the worst case of the three and I don't understand why it needs COUNT(*) from Comments. I may be mistaken (I haven't peeked at the internal LINQ to SQL code), but this could probably be removed and handled more efficiently on the client side.

As far as the amount of data passed across the network, LINQ to SQL is probably somewhat more efficient. NHibernate and Entity Frameworks isn't exactly returning a Cartesian product, but it's close enough I think I understand why you say it is.

Just curious here, but have hierarchical resultsets fallen out of favor? I haven't dealt with them in years and have one VB6 project using them that I need to rewrite in C# at some point.

Other than the needless sort (not sure why that's there) and the compute scalars (which are negligible in the query plan) the EF and NH query plans are equivalent.

Someone's already mentioned that a true head to head match would be NQL vs ESQL (or Linq to NHibernate vs Linq to Entities) right now you're comparing apples to oranges.

Also, without a large dataset and database optimization (indices where they need to be). The query plan tells you nothing. That sort accounts for 47% of the operation, but if the total execution time is under 5 milliseconds for 10k rows who cares? The database isn't your bottleneck in that case, the network will be.

Everything needs to be considered in context. The benefit of EF and NH is that you don't have to worry about these things until they become a problem. If you're noticing response issues, that's when you pop open EFProf/NHProf (or the built in SQL Profiler for SQL Server) and look what's going on. Heck SQL Server even comes with an analysis tool that will recommend ways to improve the performance of the database from your usage. Make your load tests, run it against your database with the analysis tool on and look at the results.

Finally, after looking at all of these, if I still see a problem, I have the option of wrapping that call in a sproc with EF or NH.

It's a good thing that you're providing these tools for devs to understand what's going on behind the scenes. But without a skilled DBA who can provide true guidance on whether that should give pause, or if it's a code or Database concern it's like having an MRI and no Doctor to read it.

Comment preview