RavenDB 5.3 New FeaturesIncremental time series

In RavenDB 5.0 we had a major new feature, native time series support. Using this feature, you can store values over time, query and aggregate them, store them efficiently, produce rollups, etc.

In RavenDB 5.0 we had a major new feature, native time series support. Using this feature, you can store values over time, query and aggregate them, store them efficiently, produce rollups, etc.

The classic example for time series data in RavenDB is when you have data coming from sensors. For example a Fitbit monitoring heartrate, a stock exchange feed giving you stock values. You don’t care about a particular value, you care about the value over time. It turns out that there are quite a lot of use cases for those kind of details. We have seen a major pick up in IoT related fields in particular.

However, the API we provided for users to insert data for time series had a limitation, have a look:

The API gives you the ability to record a value (or a set of values) at a particular point in time, with an optional tag for additional meaning. What is the problem with this API, then?

Well, it works great if you are processing data from a singular source (the stock exchange feed, or a medical device), but it fails to do its job if you may need to record multiple values for the same timestamp.

Huh? What does that even mean? If we a are storing a value per timestamp, obviously there should be a value for that timestamp. How can there be multiple values? Note that here I’m not talking about something like location (with latitude and longitude coordinates), those are covered under storing an array of values on the same timestamp.

The issue happens when you have the need to record multiple different values at the same timestamp. Typical time series are things like Heartrate, Location, StockPrice, etc. Having multiple values for the same thing at the same time frame doesn’t really work. In the Location time series, if I’m both here and there, you can expect trouble (if only because the paradox cops will show up). A stock may have different prices at the same time in different exchanges, sure, but that is not the same value, by its very nature.

There is a common scenario where this will happen. When what I’m recording is not the full value, but part of that value. The classic example for that is tracking page views. Let’s say that I want to know how many people are looking at this blog post, I cannot use the Append() API for that purpose. Each individual operation is going to belong to a particular timestamp. What happens if I have two views on this post at the exact same millisecond? For that matter, what happens in the more “interesting” case of having writes to the same millisecond on two different nodes in the cluster?

With timeseries as we envisioned them for the 5.0 release, that wasn’t an issue, a timeseries had a value in a particular timestamp. But supporting a scenario such as tracking views, or any scenario where we want to record partial data and have RavenDB take care of everything else isn’t served well by this model.

Note that RavenDB already has the notion of distributed counters, they are intended specifically for doing such things. It is trivial in RavenDB to implement a counter that would track the overall views on a post. It will also handle concurrency, distributing data between nodes, everything that needs to be handled. So why can’t I use that?

It turns out that I typically want to know more than just the total number of views on the post, I want to know when they happened. Counters are only a partial answer for that.

That is why incremental time series were created. They are here to marry the ability of time series to track a value over time and the distributed counters ability to aggregate information concurrently and in a safe distributed manner. Here is the new API for incremental time series:

The changes are apparent at the API level, the Increment() is not setting the value, it is incrementing it with a delta value. So two increments on the same timestamp will give you the right result. Note that we don’t have a way to tag the entry any longer. That is no longer meaningful, because a single timestamp may have multiple different values. The method is called increment, but note that you can also pass negative values, if you want to reduce the amount.

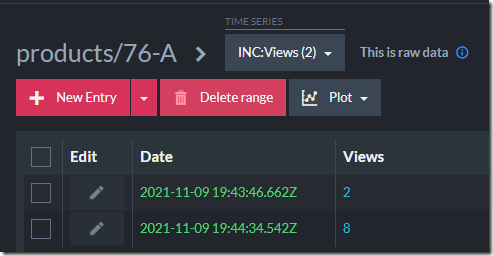

You can see in the image on the right how this looks like in the studio. An incremental time series is one that has the “INC:” prefix in the name. Such a time series is able to accept only increment operations, it will reject attempts to append values to it. In the same sense, a non incremental time series will not allow you to increment a value, only append new entries. We wanted to have a strong separation between the two time series modes because mixing them up resulted in a huge mess of edge cases that are really hard to solve.

I probably should explain the terminology here, because it reflects an important distinction:

- Append – add a new timestamp and the value(s) for that time. This appends to the time series a new entry. Appending an entry to a time that is already in the timeseries will overwrite that time.

- Increment – add a new timestamp and its values. If there is already value for that time in the time series, we’ll add the new value and existing value together, writing their sum as the new value.

- That isn’t actually how it works internally, but that is the conceptual model.

Aside from using increment to set the values, incremental time series behave just like any other time series. You can query over them, aggregate, index, etc. They can create rollups (a rolled up incremental time series is a normal time series, not an incremental one), apply retention polices, and everything else that you can do with a time series, the special behavior of incremental time series does not extend to its rolled-up versions.

Here is a full example of how you can use this feature:

As usual, this is transactional with any other operation you may want to do, so you can increment a time series along side uploading an attachment and modifying a document, as a single atomic transaction.

And now we can ask about view counts on an hourly basis for the last week, like so:

This feature is going to be available in all editions of RavenDB 5.3, expected for release in mid November. I got so many ideas about what you can use this for ![]() .

.

More posts in "RavenDB 5.3 New Features" series:

- (26 Nov 2021) Revisions includes

- (25 Nov 2021) JSON Patch

- (24 Nov 2021) Studio & Query improvements

- (23 Nov 2021) TCP Compression

- (18 Nov 2021) Experimental PostgreSQL wire protocol

- (17 Nov 2021) Elasticsearch ETL

- (15 Nov 2021) Incremental time series & implementing lambda based accounting

- (12 Nov 2021) Incremental time series

- (11 Nov 2021) Concurrent Subscriptions & Serial operations

- (10 Nov 2021) Concurrent subscriptions

Comments

Are you also thinking about adding multiple time-series for a single timestamp? Because at the moment we can't seem to be able to store these efficiently (/batch-wise)

The use-case for this is for example a system-monitoring tool like telegraf which monitors multiple time series per system and then sends them periodically. So it will send a cpu-usage, but at the same time the used-perc per disk (and usually it comes out to 32+ values / tags /time series per system)

The only way I can find for this to store it is by storing single time-series in a for-loop.

ChristiaanS,

I'm not sure that I follow your intent here. You can store more than a single value per timestamp, yes. However, there is a max of 32 values, as you noted.

What data are you recording, and is meaningful as a single wide time series? Or are they independent? Also, not sure what you mean WRT for loop?

Comment preview